Gestures To Control Computer Applications

Mohd Talha Zeeshan1 , Mohd Ishthyaq Hussain 2 , B Karthik Raj31Student, Dept. of Electronics and Communication Engineering, SNIST, Ghatkesar, Hyderabad, Telangana, India

2Student, Dept. of Electronics and Communication Engineering, SNIST, Ghatkesar, Hyderabad, Telangana, India

32Student, Dept. of Electronics and Communication Engineering, SNIST, Ghatkesar, Hyderabad, Telangana, India ***

Abstract - Gesturetocontrolcomputerapplicationsmeanswe usegestures tocontrolourcomputersystemherein this projectwehaveusedhandgesturetocontrolthetwomainapplicationofcomputerthatiscontrollingaVLCmediaplayer andpresentationandwehavealsotriedtouseoureyesascursor.Thistypeofapplicationisverymuchhelpfulduringthe Covid era as we know that many people got affected to covid by simply touch other objects so there instead of using joystick,keyboardandmousewithoutanydirectcontactwecaninteractwiththecomputerdevice.Aseveryapplicationit also has pros and cons. It also helpful for many people who are suffering with physical disabilities. As we know that the world is very large many people loose their fingers and hands in accidents so such people can also access the computer easily.Byusinghandgesturesweacanplay/pauseavideo,increaseanddecreasethevolumeandcanforwardandrewind avideo.Inapresentationwecanusingourfingerasapointer,wecandraworwriteonapresentationslides,wecanerase whateverwehavewrittenordrawnontheslide andwecanmovetheslidetoforwardandbackward.Hereweareusing only python and its libraries and pycharm IDE we can also do the same project by using arduino and also other similar devices.

Key Words: Gestures, Python, VLC Media Player, Libraries, Pycharm IDE.

1.INTRODUCTION

Acomputingmethodcalledgesturerecognitionmakesanefforttoidentifygesturesandanalysethemusingmathematical algorithms. Gesture recognition is not just restricted to hand motions; it can also be applied to every part of the body. Thereisaglobalconferencedevotedtogestureandfacialrecognition,whichisaburgeoningareaofcomputerscience.The possibilitiesforusingthefieldwillexpandaswellasthefield itself.Gesturerecognitionsoftwarecanbeusedwithtouch screens,cameras,orotherperipheraldevices,amongothermethods,toimprovehuman-computerinteraction.

For many individuals today, recognising touch screen gestures has become second nature. Although certain computers and operating systems provide individualised gesture recognition, Nowadays, the majority of people are aware of the pinch-to-zoom feature on touch screens, which they may use to get a closer look at anything. Nearly all user interfaces, includingthoseonsmartphonesanddesktopcomputers,arecompatiblewiththisparticulargesture.Touchscreensmake itrelativelysimpleforpeopletointeractwithcomputers.

A camera and motion sensor are used in vision-based gesture recognition technology to monitor and translate human motionsin real time. The monitoringofdepthdata is alsopossible with more recentcamerasandsoftware, whichhelps enhancegesturerecognition.Usersmayquicklyengagewiththeapplicationtogettherequiredresultsthankstoreal-time picture processing. As an illustration, the Xbox Kinect used a camera to interpret player gestures throughout various games.

Deep learning algorithms have also been tested in tests where a camera was used to watch a person's walk in order to estimatetheirriskoffallingandprovideadviceonhowtoreducethatrisk.

Devices that employ specialisedcamerasand programmesfocused on hand trackinghave been developed, suchasthose from Leap Motion, to enhance the results of motion-tracking. Such applications can improve accuracy by concentrating solelyonhandgesturedetection,enablinguserstointerfacewiththeirsystemsconvenientlyandtotallyhands-free.

2. LITERATURE SURVEY

TheinitialapproachofcommunicationwithcomputeremployinghandgesturewasfirstprojectedbyMyronW.Kruegerin 1970[1].Thepurposeoftheapproachwasattainedandalsothemousecursorcontrolwasaccomplishedusinganexternal webcam(GeniusFaceCam320),asoftwarepackagethatwouldparaphrasehandgesturesandsoturnedtheacknowledged gesturesintoOScommandsthathandledthemouseoperationsonthedisplayscreenofthecomputer[2].Selectinghand gesture as an interface in HCI will permit the implementation of a good vary of applications with none physical contact

withthecomputingenvironments[3].Nowadays,majorityoftheHCIreliesondeviceslikekeyboard,ormouse,however an enlarging significance in a category of techniques based on computer vision has been came out because of skill to acknowledgehumangesturesinahabitualmanner[4].Theprimaryaimofgesturerecognitionistospotaspecifichuman gestureandcarryinformationtothecomputer.Generalobjectiveistocreatethecomputeracknowledgedhumangestures, tomanage remotelywithhandposesa goodsortofdevices[5].Theautomatedvision-based recognitionofhand gesture for management of tools, such as digital TV, play stations and for sign language was take into account as a significant exploration topic lately. However the common issues of those works arise because of several problems, like the complicatedanddisturbingenvironments,tonecolorofskinand alsothekindofstaticanddynamichandgestures.Hand gesturesrecognitionforTVmanagementissuggestedby[6].Duringthissystem,justonegestureisemployedtoregulate TVbyoperatinguserhand.Onthedisplay,ahandiconseemsthatfollowsthehandofuser.Inthispaper[7],theactualHCI systemthatbasedongesturesandacceptgesturesuniquelyoperatingonemonocularcameraandreachoutthesystemto theHRIcasehasbeenevolved.ThecameoutsystemdependsonaConvolutionNeuralNetworkclassifiertograspfeatures andtoacknowledgegestures.TheHiddenMarkovModeldeliversasacrucialtoolfortherecognitionofdynamicgestures inrealtime.ThemethodemployedHMM,worksinactualandisbuilttooperateinstaticenvironments.Theapproachisto make the use of LRB topology of HMM in association with the Baum Welch Algorithm for training and also the Forward and Viterbi Algorithms for testing and checking the input finding sequences and producing the most effective attainable state sequence for pattern recognition [8]. In this paper [9], the system is designed even it appears to be easy to use as comparedtolatestsystemorcommand basedsystemhoweveritislesspowerful inspottingand recognition. Requireto upgrade the system and attempt to construct further strong algorithm for both detection and recognition despite of the confused background environment and a usual lighting environment. Also require to upgrade the system for several additionalcategoriesofgesturesassystemisbuiltforjustsixclasses.Howeverthissystemcanusetomanageapplications likepowerpointpresentation,games,mediaplayer,windowspicturemanageretc.Inthispaper[10],handgesturelaptop makestheuseofanArduinoUno,Ultrasonicsensorsandalaptoptoperformtheactivitieslikecontrollingmedia,playback and volume. Arduino, Ultrasonic sensors, Python used for serial connection. This type of technology can be employed in theclassroomforeasierandinteractivelearning,immersivegaming,interactingwithvirtualobjectsonscreen.

3. PROPOSED METHODOLOGY

This project made use of a number of computer vision-related methods. These consist of the ones utilised in colour segmentation, Morphological filtering, Extraction of Features, Contours, Convex Hull and controlling media player and presentationusingpyautogui.

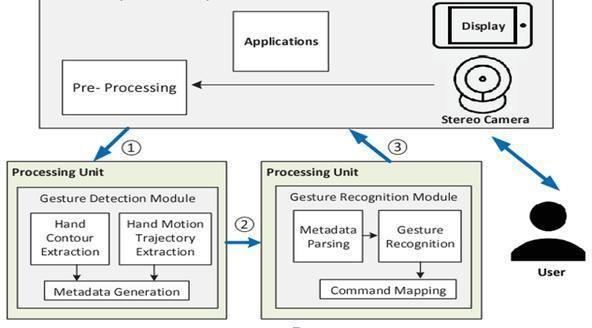

3.1.1 Data Flow Digaram

The data flow diagram essentially shows how the data control flows from one module to another. Unless the input filenames are correctly given the program cannot proceed to the next module. Once the user gives, the correct input filenamesparsingisdoneindividuallyforeachfile.

The data flow diagram essentially shows how the data control flows from one module to another. Unless the input filenames are correctly given the program cannot proceed to the next module. Once the user gives, the correct input filenamesparsingisdoneindividuallyforeachfile.Therequiredinformationistakeninparsingandanadjacencymatrixis generatedforthat.Fromtheadjacencymatrix,alookuptableisgeneratedgivingpathsforblocks.

Inaddition,thefinalsequenceiscomputedwiththelookuptableandthefinalrequiredcodeisgeneratedinanoutputfile. Incaseofmultiplefileinputs,thecodeforeachisgeneratedandcombinedtogether.

3.1.2 Component Diagram

ThecomponentdiagramforthegesturedetectionSystemincludethevariousunitforinputandoutputoperation.Forour designed we have mainly two process in which one is to capture the image through camera which is done by invoking openCV and other is pre-processing done by the system. The processing includes two Unit which is used to process the imagecapturebythecamera,Firstlythepreprocessingunitdetectsthemetadataigtheimageanditstrajectorythatisthe orientationinwhichthehandfingerswasraised,thenitssentforfurtherpre-processing.

InFurtherpre-processingsystemusedthealgorithmforextractingthefeaturetorecognizethefingersraisedThefeature are extracted using the metadata and information in previous pre-processing steps.It is interesting to note that all the sequenceofactivitiesthataretakingplaceareviathismoduleitself,i.e.theparsingandtheprocessofcomputingthefinal sequence.Theparsingredirectsacrosstheothermodulesuntilthefinalcodeisgenerated.

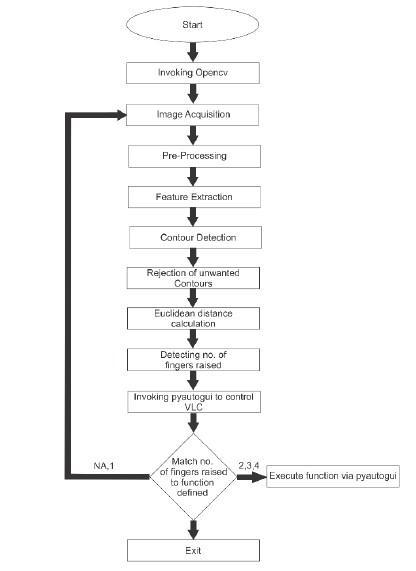

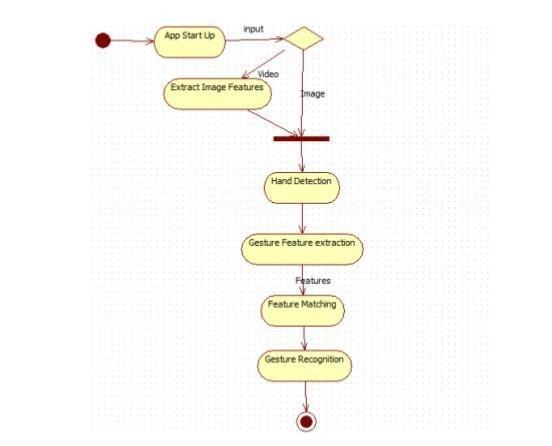

3.1.3 Activity Schematic

An activity diagram displays the order in which a complicated process's many phases must occur. An activity is represented by a circle holding the operation's name. A transition caused by the completion is denoted by an outgoing solidarrowconnectedtotheendoftheactivitysign.

Activity diagrams are visual depictions of processes with choice, iteration, and concurrency supported by activities and actions. Activity diagrams in the Unified Modelling Language are meant to represent both organisational and computational operations (i.e. workflows). Activity diagrams display the entire control flow. arrow-connected activity diagramsareupofasmallnumberofshapes.Themostsignificantshapesinclude:

Roundrectanglesstandinforactions,diamonds forchoices,barsforthebeginningorendofconcurrentactivities,ablack circlefortheworkflow'sinitialstate,andanencirclingblackcircleforitsconclusion(finalstate).

Similar to the other four diagrams, activity diagrams serve similar fundamental goals. It captures the system's dynamic behaviour. The message flow from one item to another is depicted using the other four diagrams, whereas the message flowfromoneactivitytoanotherisdepictedusingtheactivitydiagram.

An activity is a specific system function. Activity diagrams are used to build the executable system utilising forward and reverseengineeringmethodologiesinadditiontohelpingtoseeasystem'sdynamicnature.

Recognition ofhandGestureincludesvariousactivitiestobeperformed.Asshown inthe figure5.5the firstactivityisto startthecameratocapturetheimage.ThisactivityautomaticallyinvokethecamerausingopenCVlibraryinpythonasthe execution of the program starts. Then on the basis of the capture image , the gesture information is extracted. This information is used to extract the features such contours , convex hull and the defects point. On the basis of which the number of fingers in front of the camera is Recognized .As the Finger information is extracted ,the application automatically perform the action such as play,pause, seek forward, seek backward etc on the basis of number of fingers raise,ontheVLCMediaPlayer.

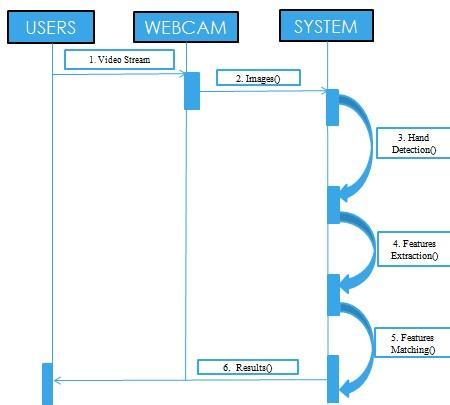

3.1.4 Sequence Diagram

Sequence diagram are an easy and intuitive way of describing the behaviour of a system by viewing the interaction between the system and the environment. A sequence diagram shows an interaction arranged in a time sequence. A sequence diagram has two dimensions: vertical dimension represents time; the horizontal dimension represents the objectsexistenceduringtheinteraction.

TheshownSequencediagramexplaintheflowoftheprogram.AsthisSystemisbasedonHumanComputerInteractionso it basically include the user, computer and the medium to connect both digitally that is web camera. As the execution of the program starts, it firstly invoke the web camera to take RGB image of the hand. Then the image is segmented and filteredtoreducethenoise intheimage.Afterthe removal ofthenoisethehandgesturesaredetectedi.ethenumberof fingers raised are preprocessed, on the basis of feature are extracted. After Feature extraction is done, By using conditionals statements in the program the feature are matched .As the features matches ,it automatically controls the mediaplayerandgivesustherequiredresults.

3.2 Obtaining Data

Thefirststepistocollectthepicturefromthecameraanddesignatearegionofinterestintheframe.Thisisvitalbecause theimagemayincludemanyvariables,andthesefactorsmayhaveunintendedeffects,whichgreatlyreducestheamount ofdatathathastobeprocessed.Awebcamisutilisedtotakethepicture,whichcontinuallyrecordsframesandprovides therawdataneededforprocessing.Theinputimageinthiscaseisanuint8.TheRGBpicturethatwasprocuredneedsto betreatedfirst,orpre-processed,beforethecomponentsaredividedandanacknowledgementisgiven.

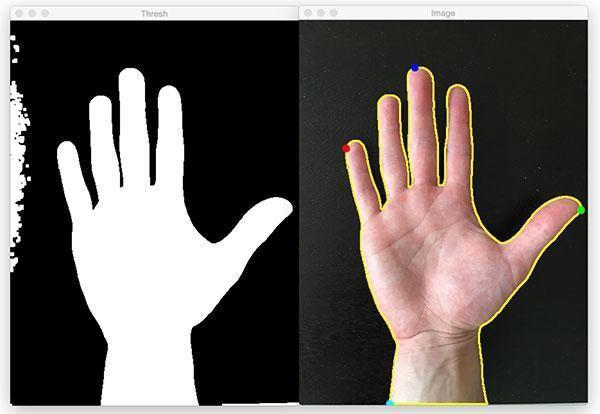

3.2.1 Threshold-Based Colour Segmentation

Identificationofcertainareaswithinapictureisknownassegmentation. Thefollowingdiagramillustratesthealgorithmusedforthresholding-basedcoloursegmentation:

• Usethecameratorecordapictureofthegesture.

• EstablishtherangeofHSVvaluesforskintonethatwillbeusedasthresholds.

• Changetheimage'scolourspacefromRGBtoHSV.

• Changeallofthepixelstowhiteiftheyfallinsidethethresholdvalues.

• Changeallremainingpixelstoblack.

Thesegmentedimageshouldbesavedasanimagefile.

3.2.2 Morphological Filtering

Morphological image processing isacollectionofnonlinearoperationsrelatedtotheshapeormorphologyoffeaturesin animage.AccordingtoWikipedia,morphologicaloperationsrelyonlyontherelativeorderingofpixelvalues,notontheir numerical values, and therefore are especially suited to the processing of binary images. Morphological operations can alsobeappliedtogreyscaleimagessuchthattheirlighttransferfunctionsareunknownandthereforetheirabsolutepixel valuesareofnoorminorinterest.

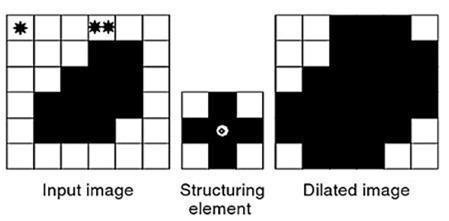

3.2.3 Dilation

Thebinarypictureisextendedfromitsinitialformduringthedilationprocess.Thestructuralelementdetermineshowthe binarypictureisenlarged.Incomparisontothepictureitself,thisstructuralelementistiny;itstypicalsizeis3by3.

Let'sdefineBasthestructuralelementandXasthereferenceimage.Equationdefinesthedilationoperation.

XB=z|[(B)ZX]XX

X⊕B={z|[(B^)Z∩X]∈X}

Then,thisstructuralcomponentwillbemovedtotheright.OneoftheblacksquaresofBisfoundto overlaporintersect theblacksquareofXatlocation.Hence,position.Blackwillbeinsertedinsidethesquare.ThestructuralelementBisalso atransitionbetweenlefttorightandfromtoptobottomontheimageXtoyieldthedilatedimage.

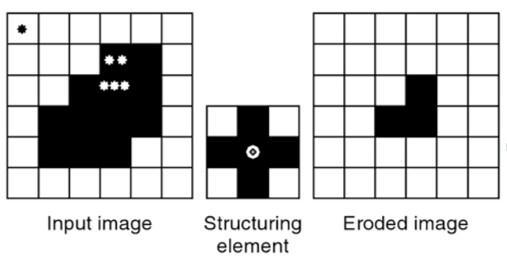

3.2.4 Erosion

Dilation's opposite process is erosion. When an image is enlarged via dilation, it is reduced in size by erosion. The structuralelementdetermineshowtheimageisreduced.Witha3×3size,thestructuralelementisoftensmallerthanthe picture.

Whencomparedtogreaterstructuring-elementsizes,thiswillguaranteefastercalculationtimes.Theerosionprocesswill shiftthestructuralelementfromlefttorightandfromtoptobottom,almostidenticaltothedilatationprocess.

X⊝B={z|(B^)Z∈X}X⊝B={z|(B^)Z∈X}

Accordingtotheequation,thestructuringelementisonlytakenintoaccountwhenitisasubsetoforequaltothebinary imageX.Fig.6.3illustratesthisprocedure.Again,thewhitesquaredenotesthenumberO,whiletheblacksquaredenotes thenumber1.Startingatpoint•,theerosionprocessbegins.

Becausethereisn'tacompleteoverlapinthisinstance,thepixelatlocation•willstaywhite.

The identical condition is then seen when the structural element is moved to the right. Because there isn't total overlappingatpositionu,thewhitecolourwillbeappliedtotheblacksquareshownbytheasterisk(*).

Thestructuringelementisthenmovedoncemoreuntilitscentreisinthelocationindicatedbytheasterisk(••).

There may be some "1s" in the background if the division is not continuous; this is known as background noise. Additionally, there is a chance that the system recognised a motion incorrectly; this is known as gesture noise. The aforementioned mistakes should be eliminated if we want faultless gesture contour detection. To provide a smooth, closed, and finished hand action, a morphological separating (filtering) strategy is used to group dilation (enlargement) anderosion(disintegration).

3.2.5 Extraction of Features

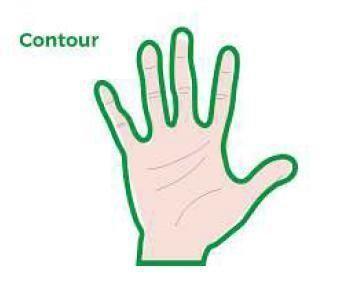

Itispossibletouseapre-madeorpre-processedphotograph,butthefinalimagelosesitsuniquehighlights.Thefollowing aretheattributesthatmayberetrieved:LocatingContoursii.

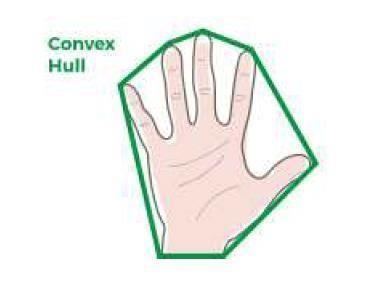

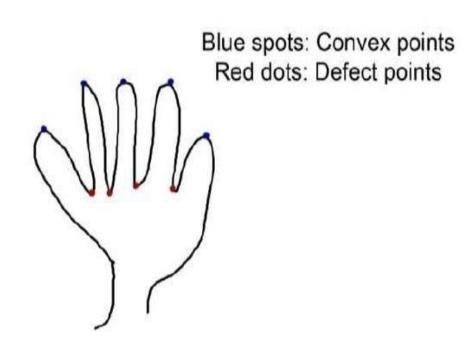

Locatingandfixingconvexhulliii.Operationsin Mathematics

1. Contours: This refers to the hand's orientation, regardless of whether the hand is placed vertically or horizontally. Assumingthatthehandisverticalandtheboxboundingistenlengthslong,wefirstattempttodeterminetheorientation bylengthtobreadthratio.

Theywillbewiderthanthesame-sizedbox,andifthehandishorizontallypositionedsothattheboundingbox'swidthis morethantheboundingbox'swidth,theywillbelongerthanthebox's

Identifyingandcorrectingconvexhulls:Ahandposturemaybeidentifiedbyitsorientationandthenumberoffingersthat arevisible.Weneedtoprocessjustaportionofthefingeronthehandthatwehavepreviouslyprocessedbydetermining andevaluatingthecentroidinordertoacquirethetotalnumberoffingersthatareexhibitedinhandmotions.

3.Thismaybedeterminedusingthefollowingformula:angle=math.acos (((b**2+c**2-a**2)/(2*b*c))*57Inorderto distinguish between the various fingers and to identify each one individually, this formula calculates the angles between the two fingers. In order to extract the right number of raised fingers into the image, we may additionally calculate the lengthofeachraisedorcompressedfinger'scoordinatepointsusingthecentroidasasourceofperspective.

Theinputvideoandturnitintoframes.Thetestresultsaredisplayedbelow:

1. TheprocuredRGBpicturehastobepre-processed,asillustratedinfigure7.1,beforethecomponentsareseparatedand anacknowledgementiscreated.

2. WeutilisedOtsu'sthresholdingmethodinthisresearch.

Cluster-based thresholding is carried out automatically using Otsu's thresholding. One threshold approach is used to dividetheimage'spixelhistogramintoitscomponentparts.

Contours are the continuous points on the border that change in integrity and have the same hue or intensity. The contoursareanexcellenttoolforitemdetectionandrecognitionaswell

4. RESULTS

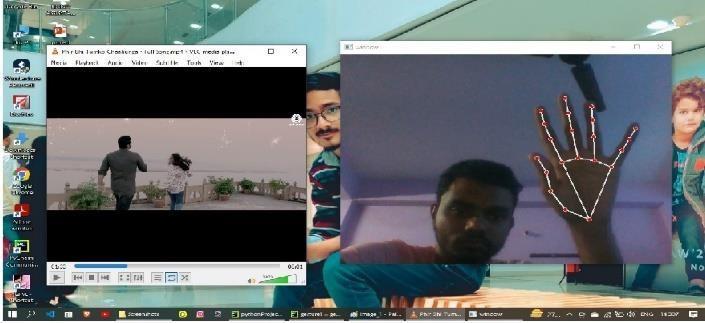

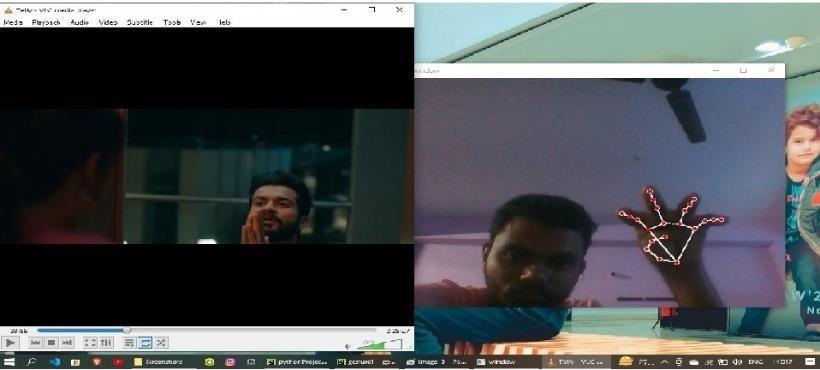

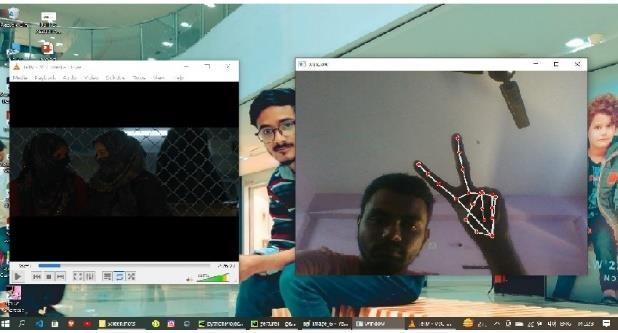

we can get the output by running the python code in any integrated development environment here we use pycharm whichisanIDE.Herewewillbeabletocontrolavideoplayer

The suggested system performs different operations using hand gestures, namely the number of raised fingers in the regionofinterest.Ahandgesturerecognitionsystem,asseeninfigure,identifiesshapesand/ororientationsinorderto assignthesystemataskto

4.1 Results for controlling a video player

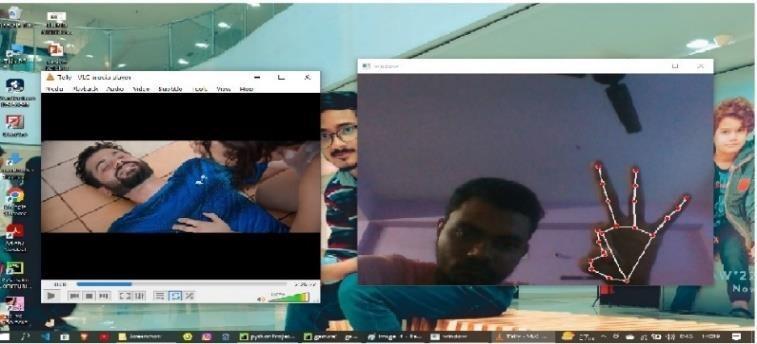

Nowthebelowfigureshowshandlandmarksdetectionbasedonmediapipe.

1.Whenwegiveourhandasaninputthevideoiscaptuedandlandmarksaredetected

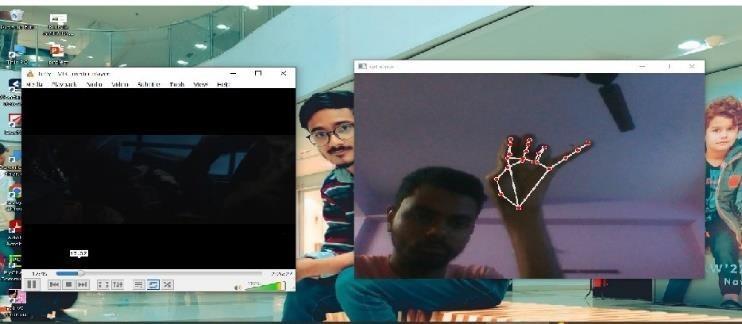

2.Whenwefoldourhandthevideoplayerispaused

3.whenweopenorstretchourfivefingersvideostartsplaying.

4.Whenweraiseourmiddlefinger,ringfingerandlittlefingerthevolumeisraisedorincreases.

5.Whenwelowerourfourfingersleavingthumbfingervolumedecrease.

6.Usethesmallfingertotomoveforwardthevideobyten seconds

7.Raisetwofingerstorewindthevideo

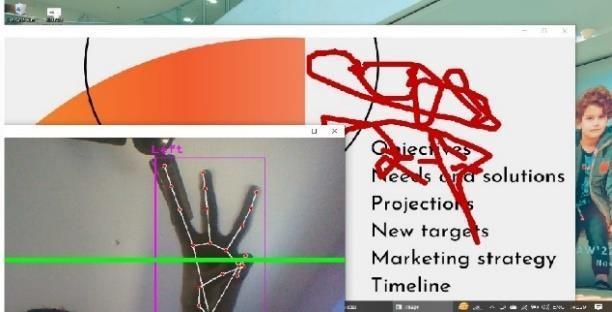

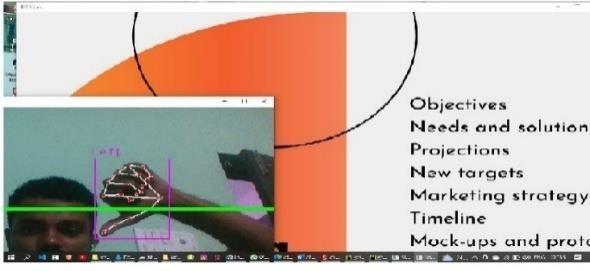

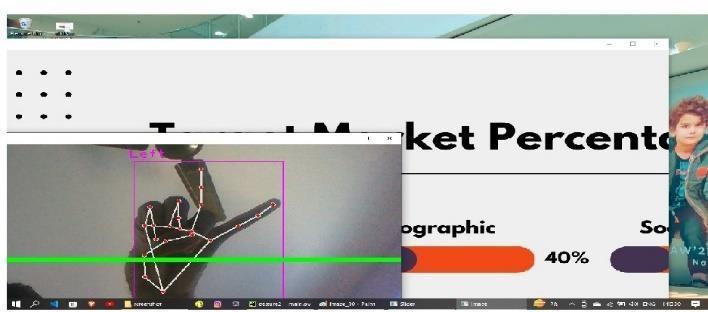

4.2 Results for controlling a presentation

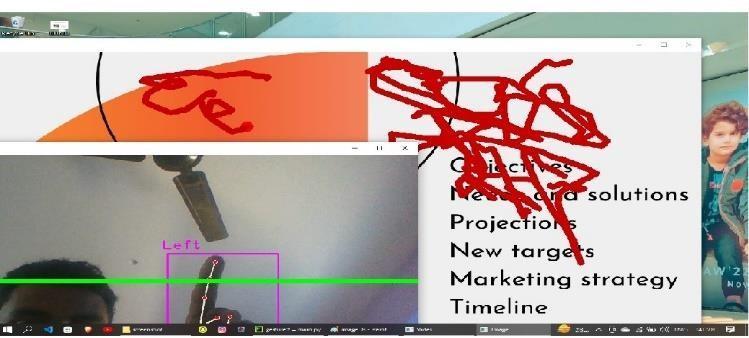

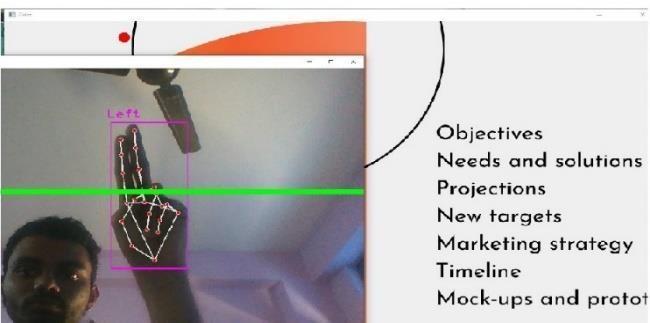

8. Toget apointeronslideuseindexandmiddlefinger jointwofingersthenwegetapointeronscreen

9. Todraworwriteonslideyoucanusesingleindexfinger

10.Toerasethedrawingorthewrittentextraiseindex,middleandringfingers.

11. Tochangetonextslideusethesmallestfinger.

11.Togotopreviousslidemoveyourthumbfingerupwards.

12. Whenwemoveoureyesitactsasacursor.

5. CONCLUSION

The Hand gesture detection system for controlling UI, a standalone application for manipulating the different user interfacecontrolsand/orapplicationslikeVLCMediaPlayer,wasconceived,created,andbuiltinthisproject.Duringthe analysis phase, we gathered data on the numerous gesture recognition systems now in use, their methodologies, algorithms, and success/failure rates. As a result, we carefully compared various solutions and evaluated their effectiveness.Wecreatedthesystemarchitecturediagramsandthedataflowdiagramofthe

When converting a grayscale image to a binary image, the notion of thresholding states is used to locate outlines. For instance,despitetheformaroundthehand,thelightingoverthephotographof thehandmaybeunevenasaresultofthe contours that were drawn around the dark areas. That should be prevented by altering the limit. For an accurate examinationofgesturerecognition,thebackgroundoftheimagesshouldbeclear.Additionalsporadicmonitoringminutes areusefulforensuringthattheshapesofthelayoutpictureandtheindividual'spicturearethesame.

6. REFERENCES

[1] OudahM,Al-NajiA,ChahlJ.HandGestureRecognitionBasedonComputerVision:AReviewofTechniques.J Imaging. 2020 Jul 23;6(8):73. doi: 10.3390/jimaging6080073.PMID:34460688;PMCID:PMC8321080.

[2] KarapinarSenturk,Z.,&Bakay,M.S.(2021).MachineLearningBasedHandGestureRecognitionviaEMGData.ADCAIJ: Advances in Distributed Computing and Artificial Intelligence Journal, 10(2). https://doi.org/10.14201/ADCAIJ2021102123136

[3] Bakheet, S., Al-Hamadi, A. Robust hand gesture recognition using multiple shape-oriented visual cues. J Image Video Proc. 2021, 26 (2021).https://doi.org/10.1186/s13640-021-00567-1

[4] Abhilash Dayanandan, Akshay Chakkungal, Anooj Kommeri, Deepak Koppuliparam bil, Dr. Prashant Nitnaware (May 2020). Gesture Controlled Media Player using TinyYoloV3. IRJET: International Research Journal of Engineering and J.M. (2020). A wearable biosensing system with in-sensor adaptive machine learning for hand gesture recognition. NatureElectronics,4,54-63.

[7] RubinBose,S.andSathieshKumar,V.‘In-situIdentificationandRecognitionofMulti-handGesturesUsingOptimized DeepResidualNetwork’.1Jan.2021:6983–6997.kkkkkk

[8] Verdadero, M. S., Martinez-Ojeda, C. O., & Cruz, J. C. D. (2018). Hand Gesture Recognition System as an Alternative Interface for Remote Controlled Home Appliances. In 2018 IEEE 10th International Conference on Humanoid, Nanotechnology,InformationTechnology,CommunicationandControl,EnvironmentandManagement(HNICEM)(pp. 1-5).IEEE.

[9] https://www.geeksforgeeks.org/find-and-draw-contoursusing-opencv-python/

[10] https://learnopencv.com/contour-detection-using-opencvpython-c/

[11] https://learnopencv.com/convex-hull-using-opencv-inpython-and-c/

[12] https://theailearner.com/2020/11/09/convexity-defectsopencv/

[13] ResearchGate,Google.

[14] Cevikcan,UstunugA,Industry4.0:ManagingTheDigitalTransformation,SpringerSeriesinAdvancedManufacturing, Switzerland.2018.thefollowingDOI:10.1007/978-3-319-57870-5.

[15] PanticM,NijholtA,PentlandA,HuanagTS,HumanCenteredIntelligentHuman-ComputerInteraction(HCI2):HowFar We From Attaining It?,International Jounal of Autonomous and Adaptive Communications Systems (IJAACS), vol.1 no.2, 2008. pp 168-187. DOI:10.1504/IJAACS.2008.019799.

[16] HamedAl-SaediA.K,HassinAl-AsadiA,SurveyofHandGestureRecognitionSystem.IOPConferencesSeries:Journalof Physics:ConferencesSeries1294042003.2019.