Binarization of Degraded Text documents and Palm Leaf Manuscripts

Abstract - DocumentdeteriorationImageprocessingisa specialized field that has recently drawn an influx of researchers. The first step in preparing a document for furtherprocessingisbinarization.Dependingonthedegree of degradation of the original document, global or local thresholding methods are preferred. The threshold phenomenonisausefultechniqueforidentifyingthecluster of pixels that are most likely associated with background information while simultaneously separating the object information.Inthispaper,weproposeatechniquebasedon thecomplementoftheoriginalimage.Thistechniqueisused to evaluate ancient text documents and palm leaf manuscripts, and the quality of the resulting images is assessedusingvariousqualitativemetrics.

Key Words: Binarization, Complementary of the image, Image documentation, Threshold, local method

1. INTRODUCTION

Researchers faced numerous challenges as a result of degradeddocumentimageanalysis.Degradedconditionsof historical documents (e.g., bleed-through, ink stains, torn pages, etc.) prompted researchers to develop binarization andenhancementalgorithmsthatareappropriateforthese challenges.Binarizationisthefirststageofpre-processingin allimageprocessingandanalysissystems.Themajorityof the cultural heritage document collection consists of digitisedimages.Thesepricelessdocumentsareavailablefor manual annotation on the Internet in order to make their contentmoreaccessible.Light,particularlyultravioletlight, whichispresentindaylight,candamagedocuments.Having original documents at home or visiting a local archive or history centre allows us to handle old material with historicalevidence,whichcomesatacost.Frequenthandling oftheseoriginaldocumentscausesphysicalwearandtear, eventuallyleadingtodocumentloss.Furthermore,changes inenvironmentandlightcanharmthedocuments.Inthis context,imageanalysisforremovingbackgroundnoiseand improving document readability requires distinguishing between ancient and modern documents, as well as processing.

Binarizationtechniquesbasedonaglobalorlocal threshold are straightforward and practical. The Global thresholdspecifiesaglobalvalueforallpixelintensitiesin an image in order to distinguish them as text object or background [1]. This method fails to remove image noise

***

thatisnotdistributeduniformly.Incontrast,localthreshold provides an adaptive solution for images with varying backgroundintensities,withthethresholdvaryingaccording to the properties of the local region [6,8]. There are a plethoraofgeneral-purposeBinarizationmethodsavailable that can handle any document image with a complex background.Thesemethodsarecategorizedaseitherlocal or adaptive thresholding. Bernson proposed[2] a neighborhood-basedlocalthreshold,Niblackevaluatedthe threshold at each pixel using local mean and standard deviation,andSauvolausedtwoalgorithms[4]tocalculatea different threshold for each pixel. The authors [9] all proposed a fast entropy-based segmentation method for producinghigh-qualitybinarizedimagesofdocumentswith back-to-backinterference.Xiaoetal.proposedanentropic thresholding algorithm based on gray-level spatial correlation (GLSC) histograms [12]. They revised and expandedonKapuretalalgorithm.SyedSaqibBukharietal. proposed[11]alocalbinarizationmethodadaptationthat uses two distinct sets of free parameter values for the foreground and background regions. They show how to estimate foreground regions in a document image using ridge detection. Using a different set of free parameter values, this information is then used to calculate an appropriatethresholdfortheforegroundandbackground regions.Chien-HsingChouanetal.[13]proposedamethod forsegmentinganimageanddetermininghowtobinarize eachsegment.Thedecisionrulesaretheresultofalearning processthatstartswithtrainingimages.RachidHedjam.Aet al.[14]proposedanadaptivemethodbasedonmaximumlikelihood(ML)classificationthatusesaprioriinformation andspatialrelationshipsontheimagedomaininadditionto maindatatorecoverweaktextandstrokes.MehmetSezgin etal.proposed[5]acomprehensiveassessmentofexisting localinfrastructureaswellasglobalthresholdingmethods. Mitianoudis and Papamarkos [18] proposed a three-stage approachtodocumentimagebinarization.Thebackground was removed in the first stage using an iterative median filter,thenmisclassifiedpixelswereseparatedinthesecond stageusinglocalco-occurrencemapping(LCM)andGaussian mixture clustering, and finally, morphological operators wereusedtoidentifyandsuppressmisclassifiedpixelsfor better classification of text and background image pixels. KhanandMollah[19]proposedabinarizationtechniquethat involved first removing background noise and improving document quality, then using a variant of Sauvola's Binarizationmethod,andfinallyperformingpost-processing tofindsmallareasofconnectedcomponentsinanimageand

removing unnecessary components. A more recent study [20]usedahierarchicaldeepsupervisednetwork(DSN)to binarizedocumentimages.Thenetworkarchitectureissplit into two sections. The first section of the network distinguishes between foreground and noisy background usinghigh-levelfeatures,whilethesecondsectionpreserves foreground (text) information while dealing with noisy backgroundToaccomplishthis,thenetworkisdesignedin suchawaythatdifferentlevelfeaturesareusedtopreserve high details of the foreground. The proposed network is madeupofthreedistinctDSNs,thefirstofwhichhasasmall number of convolution layers to generate the low-level featuremaps.ThesecondDSNisaslightlydeeperstructure forproducingmid-levelfeaturemaps,whilethefinalDSNis adeepstructureforproducinghigh-levelfeaturemaps.The experimentalresultsonthreepublicdatasetsshowthatthe proposedmodelcompletelyoutperformsthestate-of-the-art binarization algorithms. Westphal et al. [21] proposed document image binarization using a recurrent neural network. To incorporate contextual information into each stepofthebinarizationprocess,theyusedgridLSMcellsto handle multidimensional input in this method. They were abletoaccomplishthisbydividingtheinputimageinto64 64pixelnon-overlappingblocks.Theseblocksserveasthe input sequence for the RNN model. The output of four distinctgridLSTMlayersiscombinedandalignedtoproduce themid-levelfeaturesequence(L1).Themid-levelfeatures arethenfedintotwodifferentgridLSTMlayers(L2),which are combined with a bi-directional LSTM to produce the high-level feature map. After that, a full connection layer (L3)wasappliedtothehigh-levelfeaturemaptoproduce thebinarizationresult.Theexperimentalresultsshowthat thequalityofbinarizationhassignificantlyimproved.

Theaveragevalueoftheimageisusedasathreshold inthealgorithminthispapertoproposeageneraltechnique for cleaning degraded documents. One method for distinguishingobjectinformationfrombackgroundnoiseis to compute a global threshold of intensity value that can distinguishtwoclusters.Weusedanalgorithmbasedonthe average valueof the image. Itworksbestwithdocuments withnon-uniformnoisedistribution.

There are four sections to the paper. The first section provided a brief overview of the introduction, literature review, and problem definition. The second section discussed the algorithm for cleaning the noisy documents. The third section discussed the experimental results.Sectionfourdiscussesthefindingsandthescopeof futurework.

2. METHODOLOGY

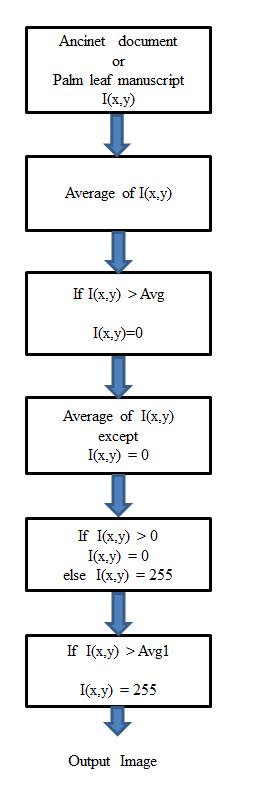

We present (Fig-1) a technique for binarizing ancientdegradeddocumentsandpalmleafmanuscriptsthat falls under the clustering of pixels from an image's backgroundandforeground.Greyleveldataissubjectedtoa clustering analysis in this class of technique, with the

number of clusters always set to two. These two clusters correspondtothetwopeaksofthehistogram.Thismethod computes the average pixel value that can be used as a threshold.Theflowchartoftheproposedmodel,shownin Fig-1,willexplainallofthealgorithm'sdetails.

Fig.1flowchartoftheproposedmodel

The traditional approach in a Global thresholding technique[15]istofindauniquethresholdtoeliminateall pixels representing image background while preserving othersasimageforeground.Manyreal-worldimageshave complicatedbackgroundsorpoorimageforegrounds(some foreground pixels have grey values very close to some backgroundpixels).Itisdifficulttofindasinglethreshold thatcancompletelyseparatetheobjectinformationfromthe background in such cases. The same is true for local thresholding, which determines threshold values locally, suchaspixelbypixelorregionbyregion.Intheproposed algorithm, image equalisation is performed after each thresholding operation while evaluating the relative

importance of a respective pixel intensity toward background. The new threshold is computed to perform backgroundelimination.Thisprocedurewillbecarriedout untilthesensitivethresholdisreached.

2.1. BINARIZATION ALGORITHM

The Binarization algorithm suitable for noisy documents requires a series of sequential steps, which are as follows:

1. Documentextractionfromadegraded(noisy)state I(x,y)

2. Determine the average intensity Avg of the degradeddocument(x,y)

3. Usingtheaveragevalue,setthepixelstoblack;

4. Calculatethedegradeddocument'saveragevalue Avg1,excludingtheblackpixels.

5. Usetheimage'ssecondaveragetosetthepixelsto white(Avg1).

Istandinforthegivenimage(x,y).Wherexandy are the horizontal and vertical coordinates of the image, respectively,andI(x,y)cantakeanyvaluebetween0and1, withI=1representingwhiteandI=0representingblack.The proposed algorithm necessitates shifting the image's intermediate tones to the background. In general, any documentimagecontainsfewusefulpixels(foreground)in comparison to the image size (foreground+ background). Object information is typically less than 10% of the total pixelsinthedocument.Takingadvantageofthisadvantage, itwasassumedthatthebackground woulddeterminethe averagevalueofthepixels,evenifthedocumentwasquite clear.

There is no proper differentiation between foregroundandbackgroundbecausethepixelvaluesinthe degradeddocumentsarenotuniform.Somepixelsfromthe foregroundandbackgroundwilloccupythesameregionin theimagehistogram.Asaresult,theproposedalgorithmwill distinguishpixelsbetweentheforegroundandbackground regions.Thethresholdvalueoftheimageiscriticalinthis segregation. In this case, the average value serves as the thresholdfordistinguishingtheobjectinformationfromthe backgroundinformationinthedocument.So,tobegin,we'll compute the average value of the degraded document. Values that exceed the threshold value are set to black, which equals zero. Recreate the image using the complementarythresholdvaluetotheoriginalimage.Again, we must compute the average of the image while disregarding the black pixels. This will allow for proper separationoftheimage'sforegroundandbackground.Apply thissecondaveragetotheimageasathresholdvalue;values abovethethresholdvaluearesettowhite,indicatingone.As

3. RESULTS AND DISCUSSIONS

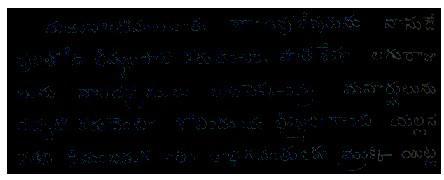

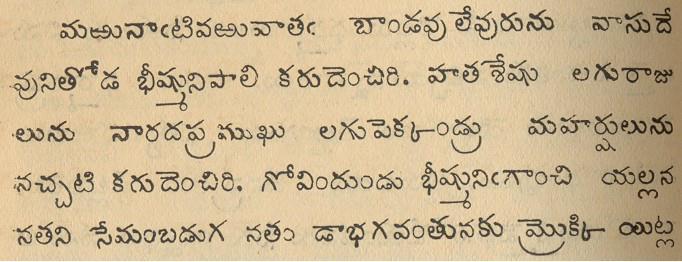

This algorithm is tested on a set of 30 document images. Theyare50to60yearsoldandgatheredfromtheInternet (a Telugu old book titled "Thiagarajaswami Krithis" was publishedin1933atKesariPrintingPressinChennapuri) and scanned copies of old story books (a Telugu old book titled "vydula kathalu" was published in 1942 at Madras Printing Press). Figure 2(a) shows an example of a noisy document with non-uniformly distributed noise. The backgroundofthenoisyimageisgoldenbrownincolour.As shown in Fig.2, it is first converted into a complementary image, which means the background is black and the foreground is white (b). As shown in Fig. 2, the complementedimageisthentransformedintoanoise-free image.

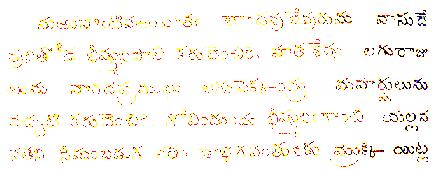

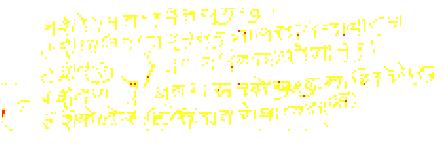

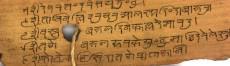

Thealgorithmisthenappliedtoover100-year-oldpalmleaf manuscriptscollectedfromtheInternetinthesecondphase. Nearly30palmleafsamplesaretestedusingthisalgorithm. Figure 3 shows an example of a palm leaf manuscript (a). Thisisthetypicalcasefortestingpalmleafmanuscriptswith thisalgorithmbecausethenoiseconcentrationvariesfrom

aresultofthisaction,thenoiseintheimage'sbackgroundis removed..(a) (b) (c) Fig2.(a)DegradedTelugutextsample (b) Complementaryofimage(a)(c)Resultantimage

one area to another within the sample. As a result, it is difficulttodistinguishthebackgroundfromtheforeground. Inthiscase,theimage'sbackgroundisalsogoldenbrown. Figure 3 shows the complement to the original image (b). Afterapplyingthedefinedalgorithmtothecomplemented image,theresultantimageisdisplayedinFig3.(c).

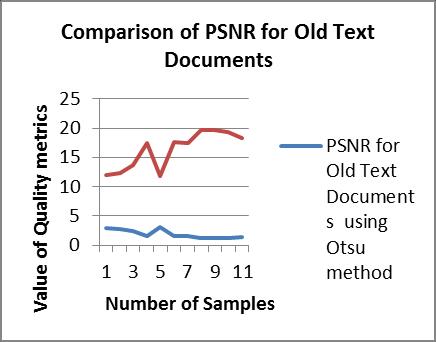

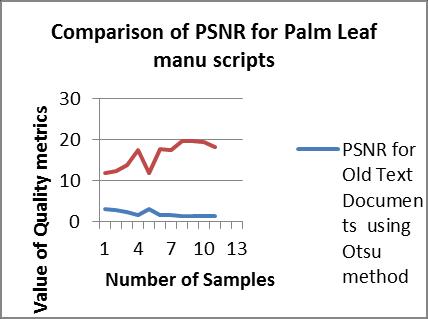

Three metrics are used to assess the algorithm's performance:PeakSignaltoNoiseRatio,MeanSquareError, andAverageDifference.Theseparametersareevaluatedin ordertocomparetheproposedalgorithm'sperformanceto thatoftheOtusmethod.Palmleafmanuscriptsandantique text documents. When compared to the Otus method, the proposed algorithm's metrics show a significant improvement.ThePSNRquantityincreasesinbothgraphs (Figs 4&5). This algorithm works best with older text documents.Itneedstobeimprovedifpalmleafmanuscripts aretoproducebetterresults

5. CONCLUSION

Thecurrentworkproposesusingtheaveragevalue of the image as a threshold. The image of the document undertestisbinarizedusingtheaveragevalueoftheimage Thefirstaveragevalueoftheimageisusedinthisalgorithm to complement the image and avoid unnecessary backgroundinformation.Thesecondaverageremovesany residual background noise from the first average value. During the cleaning process, some text information and background noise are removed. This algorithm has been shown to be effective on low-noise historical document images as well as palm leaf manuscripts. However, it has beendiscoveredthatpalmleafmanuscriptsrequirefurther improvement.

6. FUTURE SCOPE OF THE WORK

Theproceduredescribedabovecouldbeextended tonoise-freesamplesthataremanuallycontaminatedwith various noises at various levels, such as pepper, Gaussian noise, and so on. The noisy documents are then cleaned using a defined algorithm and other methods, and quantitativemetricsforidentifyinginformationlossduring thecleaningprocessareestablished.

REFERENCES

[1] N.Otsu, "A threshold selection method based on grey level histograms," IEEE Transactions on Systems,Man,andCybernet.,9(1),1979,pp.62-66.

[2] W. Niblack “ An Introduction to Digital Image Processing”,Prentice Hall,1986,pp.115-116

[3] J.Sauvola, M.Pietikainen, “ Adaptive Document ImageBinarization,” PatternRecognition,33,2000, pp.225-236

[4] MehmetSezgin,BulentSankur,“Surveyoverimage thresholding techniques and quantitative performanceevaluation,”146/ Journalofelectronic Imaging/ January 2004/vol13(1)

[5] E.Kavallieratou, “ABinarization Algorithm Specialized ondocument imagesand photos,” 8th Int.Conf.onDocumentAnalysisand Recognition, 2005,pp.463-467.

[6] George D.C. Cavalcanti, Edducardo F. A. Silva “A Heuristic Binarization Algorithm for Documents with Complex Background”,1-4244-0481,2006IEEE

[7] B.Gatos,I.Pratikakis,andS.J.Perantoni,“Adaptive degraded document image binarization,” Pattern recognition,vol.39,pp.317-327,2006

[8] João Marcelo Monte da Silva, Rafael Dueire Lins, Fernando Mário Junqueira Martins, Rosita Wachenchauzer, “A New and Efficient Algorithm to Binarize Document Images Removing Back-to-Front Interference,” Journal of Universal Computer Science,vol.14,no.2(2008), 299-313

[9] Xiao.Y,Cao.Z.G,andZhang,“Entropicthresholding basedongray-levelspatialcorrelationhistogram,” Proc.19th Int.Conf. OnPatternRecognition(ICPR 2008), Tampa,FL,USA,8-11December2008, pp. 1-4

[10] Syed Saqib Bukhari, Faisal Shafait, Thomas M. Breuel, “ Adaptive Binarization of Unconstrained Hand-Held Camera-Captured Document Images,” JournalofUniversal ComputerScience,vol.15,no. 18(2009),3343-3363

[11] A.V.S.Rao,Tinnati Sreenivasu, N.V.Rao, T.S.K.Prabhu,A.S.C.S.Sastry,L.P.Reddy,”Binarization of Dcuments with complex Backgrounds,” ProcedingsofInternational ConferenceonMachine Vision,978-0-7695-3944-7/10,2010.

[12] Chien-HsingChou.a,Wen-HsiungLin.b,FuChang .b.Ã, “ A binarization method with learning-built rules for document images produced by cameras,” Pattern Recognition 43 (2010) 1518–1530

[13]Rachid Hedjam Ã, Reza Farrahi Moghaddam, Mohamed Cheriet, “ A spatially adaptivestatistical methodforthe binarizationof historical manuscripts and degraded document images,” PatternRecognition(Elsevier),2011.

[14] MaythapolnunAthimethphat,“AReviewonGlobal Binarization Algorithms for Degraded Document Images ” AU J.T. 14(3): 188-195 (Jan. 2011)

[15] Ntirogiannis .K, Gatos .B, Pratikakis .I, "Performance Evaluation Methodology for Historical Document Image Binarization", IEEE Transactions on Image Processing, Vol22, No.2, PP595-609,2013.

[16]Sehad, Abdenour, "Ancient degraded document image binarization based on texture features." 8th International Symposium on Image andSignalProcessingandAnalysis(ISPA),PP189193,4-6September 2013.

[17]MitianoudisN.,PapamarkosN.Documentimage binarization using local features and Gaussian mixture modeling. Image Vis. Comput. 2015;38:33–51.

[18] Pratikakis I., Zagoris K., Barlas G., Gatos B. ICFHR2016 handwritten document image binarizationcontest(H-DIBCO2016);Proceedings ofthe15thInternationalConferenceonFrontiersin HandwritingRecognition(ICFHR);Shenzhen,China. 23–26October2016;pp.619–623.

[19]Vo Q.N., Kim S.H., Yang H.J., Lee G. Binarization of degraded document images based on hierarchical deep supervised network. Pattern Recognition. 2018;74:568–586

[20]Westphal F., Lavesson N., Grahn H. Document image binarization using recurrent neural networks; Proceedings of the 2018 13th IAPR International Workshop on Document Analysis Systems (DAS); Vienna, Austria. 24–27 April2018;pp.263–268.