SLIDE PRESENTATION BY HAND GESTURE RECOGNITION USING MACHINE LEARNING

G.Reethika *1 , P.Anuhya*2 , M.Bhargavi*3*1JNTU, ECE, Sreenidhi Institute Of Science and Technology, Hyderabad, Telangana, India

(Dr.SN Chandra Shekar, Department of ECE , Sreenidhi Institute Of Science and Technology, Hyderabad, Telangana, India)

***

ABSTRACT

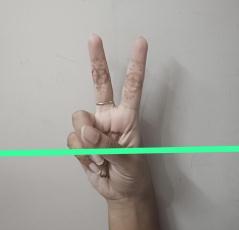

ThemainfocusofstudyinthefieldofHumanComputerInteraction(HCI)inrecentyearshasbeenonanaturalinteraction technique. Applications of real-time hand gesture-based recognition have been implemented in many settings where we interface with computers. The detection of hand motions is dependent on a camera. The primary way of engagement involvescreatingavirtualHCIdeviceusingawebcamera.Inthiswork,handmotionsemployedincontemporaryvisionbased Human Computer Interaction techniques are examined. This project is very helpful in a situation where the users cannotuseanykindsofinputdevicesortouchthem,withthehelpofgesturerecognitiononecaneasilydoaspecificaction neededwithouthavingtophysicallyaccesstheinputmethodsforexamplemousekeypadetc.Theusercandrawusinghis indexfingerandhecanuseapointerbyusingtwofingersthatishisindexfingerandmiddlefinger.Theusercanundothe drawingusingthreefingersthatisindex,middleandringfinger.Theusercanmovetonextfilebyusinglittlefingerandto thepreviousfileusingthumbfingerpointingtoleft

Keywords: Hand Posture, Hand Gesture, Human Computer Interaction (HCI), Segmentation, Feature Extraction, ClassificationTools,NeuralNetworks.

I. INTRODUCTION

Handgesturesareanvitala partofnonverbalcommuniqueandshapeanindispensablepartofourinteractionswiththe environment. Gesture popularity and category systems can aid in translating the gestures. moreover, hand gesture category is a crucial tool in human-pc interplay. these gestures may be used to manipulate equipment within the administrative center and to update traditional enter gadgets which includes a mouse/keyboard in virtual reality programs. There are two fundamental processes within the class of hand gestures. the primary approach is the imaginative and prescient-based totally approach. This entails using cameras to collect the pose and movement of the hand and algorithms to procedure the recorded pix. although this method is famous, it's miles very computationally in depth, as photos should undergo giant preprocessing to phase capabilities consisting of the photo’s colour, pixel values, andformofhand.moreover,themoderngeopoliticalclimatepreventsthebigapplicationofthistechniquedueto thefact customers are less inclined to the placement of cameras of their non-public area, mainly in packages that require consistentmonitoringofthearms.

II. LITERATURE REVIEW MATERIALS AND METHODOLOGY

Handgesturerecognitionisoneofthemostviableandpopularsolutionforimprovinghumancomputerinteraction.

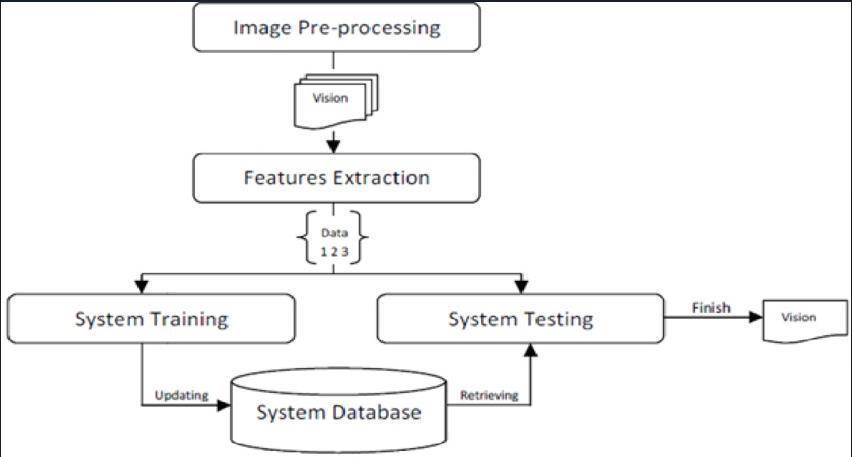

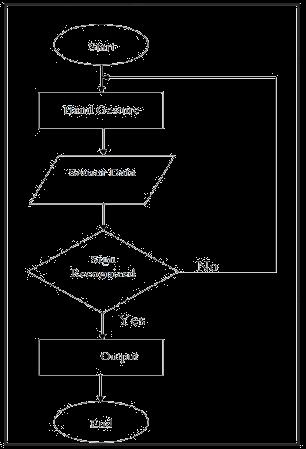

Therearefivemainprocessesinasystemthatrecogniseshandgesturesusingvision.Thefivemainproceduresareinput imageacquisition,pre-processing,featureextraction,gestureclassification,anddevelopmentofanappropriatecommand forthesystem[.

Thepre-processingstageandfeatureextractionstageof a vision-based,gestural controllableHCIsystemusea varietyof techniques.AmajorsourceofinspirationforHCIresearchershasbeenthecreationofanintelligentandeffectivehumancomputerinterfacethatallowsuserstointeractwithcomputersinbrand-newwaysbeyondtheconventionallimitationsof thekeyboardandmouse.Themostprevalentusesofvisualsensorsnowadaysareinrobotics,visualsurveillance,industry, and medical. Applications such as industrial robot control, hand gesture recognition using vision, Translation of sign language, intelligent surveillance, lie detection, manipulation of the visual environment, and rehabilitation of those with physicaldisabilitiesoftheupperextremities.

Anessentialsortofinputmethod,vision-basedhandgesturerecognitionalgorithmsalsohavethebenefitofbeingdiscrete andmaybeanaturalwayofinteractingwithmachines.Accurategesturedetectionatvariousanglesisadifficultelement of this method. The primary objective of this paper is to examine some of the most recent developments in vision-based handgesturerecognitionsystemsforHCI.Todeterminewhetheritisfeasibletoidentifyhumanactivityusinghandgesture analysis, to compile information on best practises in the design and development of a vision-based hand gesture recognitionsystemforhuman-computerinteraction,aswellastoresearchdesignissuesandchallengesinthefield.Here, the various vision-based hand gesture detection systems for human-computer interaction are analysed qualitatively to determine their advantages and disadvantages. A suggestion is also given regarding potential developments for these systemsinthefuture.

Theprimaryissuesraisedinthisstudymightbesummarisedasfollows:

•IdentifyHumanComputerswithCameraVisionstrategiesforhandgesturesduringinteraction.

• Compile and evaluate the methods employed for developing hand gesture recognition using vision human-computer interactionsystem

•Describetheadvantagesanddisadvantagesofeachsystem.

•Inferpotentialaugmentationsandenrichments.

Themaingoalofthisstudyistocontrolanycomputervisionalgorithm-basedapplicationbyusingthetwomostsignificant waysofinteraction theheadandthehand.Segmentingofthevideoinputstreamisdone.Basedontheshapeandpattern ofthehand'smotion,theappropriategestureisidentified.TheheadmovementisrepresentedbyahiddenMarkovmodel. Pre-processing for recognising hand and head gestures 1st Take a picture with the camera. Second: The Viola Jones algorithmisusedtodetecthandsandfaces.Anartificialneuralnetwork isusedtotrainclassifierstorecognisehandsand faces in photographs. The region of the head can be determined using face detection. Method for recognising head gestures:

First, all optical flows estimated using the gradient approach within the extracted head region are taken into account as valuesdenotingheadmovement.Second:Usingfinitestateautomata,theoutcomesofheadmotionsarethenemployedfor recognition.

Static hand gesture recognition's main goal is to classify the given hand gesture data, which is represented by various attributes,intoapreset,finitenumberofgestures.Theprimarygoalofthisworkistoinvestigatetheusageoftwofeature extraction techniques, in particular, hand contour and complex moments, to address the problem of hand gesture detection by highlighting the key benefits and drawbacks of each technique. An artificial neural network is created utilisingtheback-propagationlearningtechniqueforclassification.

Three steps are taken with the hand gesture image: pre-processing, feature extraction, and classification. To prepare the handgestureimageforthefeatureextractionstage,certaintechniquesareusedinthepr-processingstagetoseparatethe handgesturefromitscontext.Thehandcontourisafactorthatisemployedintheveryfirststrategytoaddressthescaling and translation problems. Finding the Inverse Discrete Fourier Transform allows for the reconstruction of the complex moment's algorithm (IDFT). This method uses an affine transformation at a specific angle to make input motions from different angles roughly equal to input gestures made at a 0 degree platform. As a result, the suggested approach can be regarded as a successful one for multi-angle gesture detection.The system's performance can be deemed adequate and usable.

Tomaketheinteractionmoreeffectiveanddependable,avision-basedsystemtocontroldifferentmouseactions,likeleft and right clicking, is presented. In this study, a vision-based interface for controlling a computer mouse with 2D hand movements is described. Hand movements rely on a colour detecting technology based on cameras. This technique primarily focuses on using a web camera to create a virtual HCI device in a practical way. Eachinput image's centroid is located. The centroid is also moved by hand motion, making this the fundamental sensor for changing the cursor on a computerscreen.

III. MODELING AND ANALYSIS

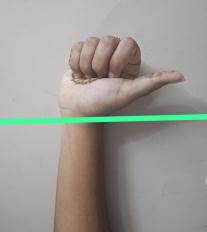

INDEX FINGER AND MIDDLE FINGER ARE USED AS A POINTER

V. CONCLUSION

•Gesturerecognitionalgorithmisrelativelyrobustandaccurate.

•Convolutioncanbeslow,sothereistradeoffbetweenspeedandaccuracy.

•Inthefuture,wewillinvestigateothermethodsofextractingfeaturevectors,withoutperformingexpensiveconvolution operations.

ACKNOWLEDGEMENTS

WewouldliketoexpressourspecialgratitudetoourGuideDr.SNChandraShekarandCoordinatorDr.T.RamaSwamy whogaveusagoldenopportunitytodoawonderfulprojectonthistopic.Itmakesustodoalotofresearchandlearntnew things.Wearereallythankfultothat.Inadditiontothat,wewouldalsothankmyfriendswhohelpedusalotinfinalizing thisprojectwithinthelimitedtimeframe.

VI. REFERENCES

A. Agrawal, R. Raj and S. Porwal, "Vision-based multimodal human-computer interaction using hand and head gestures," 2013 IEEE Conference on Information & Communication Technologies, Thuckalay, Tamil Nadu, India, 2013, pp. 12881292,2013

Haitham Badi, “Recent methods in vision-based hand gesture recognition”, Proceedings of 2013 IEEE Conference on Information and Communication Technologies (ICT 2013), Thuckalay, Tamil Nadu, India, India Vol.31, Issue.4, pp.123141, 2013.

Veluchamy,L.R.KarlmarxandJ. J.Sudha, "Vision based gesturally controllable human computer interaction system," 2015 International Conference on Smart Technologies and Management for Computing, Communication, Controls, Energy and Materials(ICSTM),Chennai,2015,pp.8-1, 2015

S. Koceski and N. Koceska, "Vision-based gesture recognition for human-computer interaction and mobile robot's freight ramp control," Proceedings oftheITI 2010, 32ndInternational ConferenceonInformation TechnologyInterfaces,Cavtat, 2010,pp.289-294, 2010

G. Baruah, A. K. Talukdar and K. K. Sarma, "A robust viewing angle independent hand gesture recognition system," 2015 InternationalConferenceonComputingandNetworkCommunications(CoCoNet),Trivandrum,2015,pp.842-847,2015.

S. Thakur, R. Mehra and B. Prakash, "Vision based computer mouse control using hand gestures," 2015 International ConferenceonSoftComputingTechniquesandImplementations(ICSCTI),Faridabad,2015,pp.85-89, 2015

S. Song, D. Yan and Y. Xie, "Design of control system based on hand gesture recognition," 2018 IEEE 15th International ConferenceonNetworking,SensingandControl(ICNSC),Zhuhai,2018,pp.1-4, 2018.

G.R.S.Murthy,R.S.Jadon.(2009). “AReviewofVisionBased HandGestures Recognition,”

InternationalJournalof InformationTechnologyandKnowledgeManagement,vol.2(2), pp.405-410.

P. Garg, N. Aggarwal and S. Sofat. (2009). “Vision Based Hand Gesture Recognition,” World Academy of Science, EngineeringandTechnology,Vol.49,pp.972-977.

Fakhreddine Karray, Milad Alemzadeh, Jamil Abou Saleh, Mo Nours Arab, (2008) .“Human- ComputerInteraction: OverviewonState ofthe Art”,InternationalJournalonSmart SensingandIntelligentSystems,Vol.1(1).

WikipediaWebsite.

Mokhtar M. Hasan, Pramoud K. Misra, (2011). “Brightness Factor Matching For Gesture RecognitionSystem Using Scaled Normalization”, InternationalJournal ofComputer Science &InformationTechnology(IJCSIT),Vol.3(2).

Xingyan Li. (2003). “Gesture Recognition Based on Fuzzy C-Means Clustering Algorithm”, DepartmentofComputer Science.TheUniversityofTennesseeKnoxville.

S. Mitra, and T. Acharya. (2007). “Gesture Recognition: A Survey” IEEE Transactions on systems, Man and Cybernetics, Part C: Applications and reviews, vol. 37 (3),pp. 311-324, doi:10.1109/TSMCC.2007.893280.

Simei G. Wysoski, Marcus V. Lamar, Susumu Kuroyanagi, Akira Iwata, (2002). “A Rotation Invariant Approach On Static-Gesture Recognition Using Boundary Histograms And Neural International Journal of Artificial Intelligence & Applications(IJAIA),Vol.3,No.4,July2012