International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 10 Issue: 01 | Jan 2023 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 10 Issue: 01 | Jan 2023 www.irjet.net p-ISSN:2395-0072

Usha

Abstract - The partial or complete loss of vision can create many difficulties in movement and other daily activities. People with such problems need to recognize objects around them to support navigation and avoid obstacles. The solution to this is to build a system that recognizes objects and provides voice response for accessibility. The simplest way is to use object localization and classification. Deep learning based approach employs CNN to perform end to end unsupervised object detection. The proposed system aims to serve as an object detector (thereby detecting obstacles) and currency detector. The system adds tags to objects in line of the camera and provide voice feedback for the same. The detection model is built using Tensorflow and Keras on pretrained network Mobilenet

Key Words: CNN, Mobilenet, Tensorflow, Transfer Learning, Detection

T HE biggest challenge for a blind person, especially the onewiththecompletelossofvision,istonavigatearound places. Visually impaired are those who have a reduced abilityorarecompletelyunabletosee. Objectdetectionis a field related to computer vision and image processing that finds real world objects in real time or images. Computer vision uses a combination of machine learning algorithms and image processing algorithms for mimicking the human brain. Object Detection for visually impairedistheneedofthehourastheyneedtorecognize day to day objects around them, save themselves from obstaclesandnavigatebetter.Visuallyimpairedpeoplecan then lead a better and more independent life. Braille (pattern based translation), canes and tactile are some accessibility equipment currently available. This system uses convolutional neural networks for object detection. The main aim of object detection is to locate the object in theimageandclassifyitusingthelabellingclasses.Hereit works better than it’s simple image processing counterpart as it describes the scene under the camera instead of returning a processed image. This system will use the mobile camera to capture the scene in real time while simultaneously detecting objects in the frame and providing voice output. This system will be used for obstaclesdetectionaswellascurrencydetection

***

There are different approaches used for object detection andcurrencydetectionbydifferentresearchers.

Mishra, Phadnis,Bendale[1] use Google’s Tensorflow objectdetectionAPI,aframeworkfordeeplearningandused ittotrainontheircustomdataset.SSDMobileNet,apredefinedmodelofferedbyTensorFlowisusedasthebaseand fine-tuned to improve the accuracy and the range of objects that can be detected. The downside of this tracking project was the privacy invasion. Semary et al.[2]in their case study demonstrate simple image processing techniquesforcurrencydetection.ContrarilyZhangand Yan[3] usedeeplearningtechniquesforcurrencydetection.Four different models were tested using the Single Shot Detector(SSD)algorithmandthemodelwithbestaccuracywas pickedfordeployment.

In Object Detection with Deep Learning: A Review [4], deep learning based object detection frameworks which handle different sub-problems are reviewed, with different degrees of modifications on R-CNN. The review starts on generic object detection pipelines which provide base architectures for other related tasks. Then, three other commontasks,namelysalientobjectdetection,facedetection and pedestrian detection, are also briefly reviewed. Through[1],weareintroducedtoaclassofefficientmodelscalledMobileNetformobileapplications.MobileNets[5] are based on a streamlined architecture that uses depthwise separable convolutions to build light weight deep neural networks. This paper presents extensive experimentstoshowthestrongperformanceofMobileNetcomparedtootherpopularmodelsonImageNetclassification.

All existing accessibility applications for the visually impaired contain object detection module and currency recognition modules as separate applicants. This means multiple apps have to be downloaded for different purposes. This project combines two such modules into one projectapplicationforeasieruse.Thisprojectwillusetwo neuralnetworksfordetection- oneforobjectsandobstacles and the other for currency detection. These two neural networks will be used in one android application for detectionandvoice output willbeprovidedforalertsand recognitions.Thesystemhardwareiscomposedofasmart phone with a rear end camera for real time capturing of

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 10 Issue: 01 | Jan 2023 www.irjet.net p-ISSN:2395-0072

frames. The model is trained and tested on Google ColabaratorywithGPUusingPython.

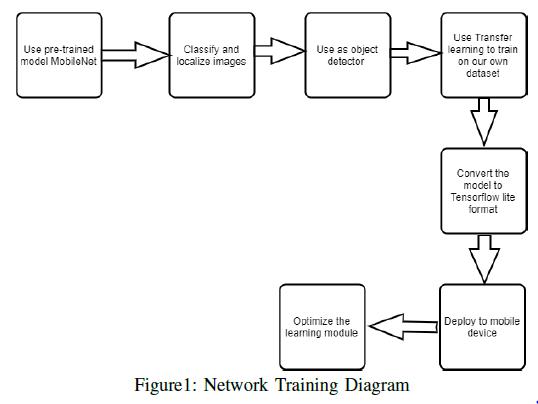

This system uses two neural networks, both using MobileNet. It uses depthwise separable convolutions which basically means it performs a single convolution on each colourchannelratherthancombiningallthreeandflatteningit.[5]Thishastheeffectoffilteringtheinputchannels. Itisalsoverylowmaintenancethusperformingquitewell with high speed. Different models like ResNet, AlexNet, DarkNet as pre-trained model can also be used but will concludethatMobileNet,duetolightweight isveryquick to run on smartphones, hence apt for the project.Figure1 describestheNetworkTrainingplanusedforbuildingthe system.

ning on tensorflow is used. Transfer learning is used to manipulate the MobileNet architecture by training it on a collected currency dataset of different denominations. This is done by freezing the base layer and adding new layers.ThetrainingdataisloadedintotheImageDataGeneratorfunctionwhichsendsthedatafortraininginbatches.Themodel isthencompiledover10 epochsusingGPU using Adam [6] optimizer algorithm and then the trained model can be used for predictions. Epoch is a hyperparameterthatisdefinedfortraining.Oneepochmeansthat the training dataset is passed forward and backward throughtheneuralnetwork.

The medium to run the neural networks will be a mobile application.Whentheappisopenedandcameraisturned on, the network will locate objects in the line of the camera and create bounding boxes around it followed by the class that is identified. The other network will detect currency denominations using only classification. Both networks will use Google’s Text to Speech to convert into voiceoutput.

The proposed system detects the object through mobile camera and provides voice output for objects, warns against obstacles and detects right currency. The project solves the basic problems faced by the visually impaired i.e.to recognize objects around and to support navigation andavoidbumpingintoobstacles.

ThefirstoneisusingGoogle’sTensorflowobjectdetection API. The detection module is built over Pre trained MobilenetusingtheSSD[8]algorithmovertheCOCOdataset. Therefore it is trained over 90 classes. This network will be used for object and obstacles detection. The model is picked from the Tensorflow Model Zoo which consists of several models with different algorithms. Single Shot means that the tasks of object localization and classification are done in a single forward pass of the network. MultiBox is the name of a technique for bounding box regressiondevelopedbySzegedyet al.[8].Thenetworkisan objectdetectorthatalsoclassifiesthosedetectedobjects.

ThesecondneuralnetworkisthesameMobilenettrained over Imagenet which has 1000 classes. This network will beretrainedusingcurrencydatasetforcurrencydetection. Theprocessoftransferlearningwillbeusedforretraining. Keras, which is a neural network library capable of run-

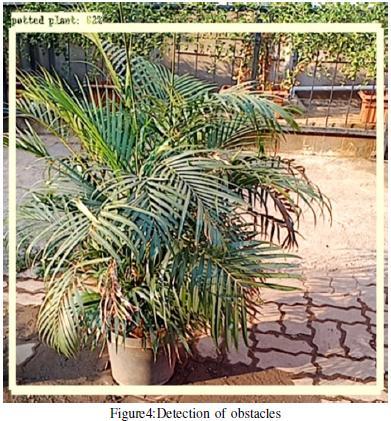

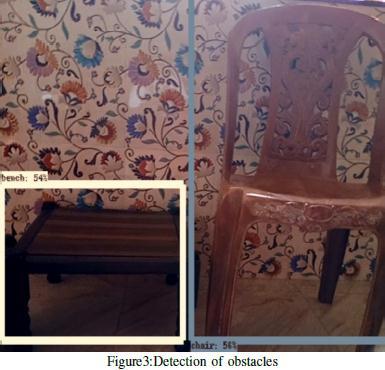

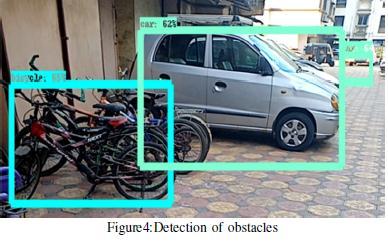

The system creates bounding box with appropriate class labelsandconfidencescore. Confidencescore here means the probabilitythat a box contains theobject represented inpercentage.Figure2andFigure3depictindoorobjects andobstacleslikebottle,chair,remoteetc.

e-ISSN:2395-0056

p-ISSN:2395-0072

This application will help visually impaired people both indoors and outdoors to save themselves from harm. It will also eliminate the need to carry any extra equipment (stick,cane,etc.)aswellasminimizethecostofpurchase. The only object required will be a basic smartphone. This project can be extended to self-driving cars, object tracking, pedestrian detection, anomaly detection, people countingandfacerecognition.

[1] R. Phadnis, J. Mishra and S. Bendale, ”Objects TalkObjectDetectionandPatternTrackingUsingTensorFlow,” 2018 Second International Conference on Inventive CommunicationandComputationalTechnologies (ICICCT),Coimbatore,2018,pp.1216-1219.

[2]N..A.Semary,S.M.Fadl,M.S.EssaandA.F.Gad,”Currency recognition system for visually impaired: Egyptian banknote asa study case,” 20155th International Conference on Information Communication Technology and Accessibility(ICTA),Marrakech,2015,pp.1-6.

[3] Q. Zhang and W. Q. Yan, ”Currency Detection and Recognition Based on Deep Learning,” 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 2018, pp.1-6.

4] Zhong-Qiu Zhao, Member, IEEE, Peng Zheng, Shou-tao Xu, and Xindong Wu, Fellow,IEEE.Object Detection with DeepLearning:AReview

[5] A. Howard,M. Zhu,B. Chen,D. Kalenichenko,W.Wang T.Weyand,M. Andreetto, H. Adam.MobileNets: Efficient ConvolutionalNeuralNetworksforMobileVisionApplications

[6] Y. Sun et al., ”Convolutional Neural Network Based Models for Improving Super-Resolution Imaging,” in IEEE Access,vol.7,pp.43042-43051,2019.

[7] Mane,S.,Prof.Mangale,M . Moving object detection and trackingUsingConvolutionalNeuralNetworks

[8] Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C BergAC(2016) “Ssd:Single shotmultibox detector. Europeanconferenceoncomputervision”,Springer:21-37.

[9] A. Nishajith, J. Nivedha, S. S. Nair and J. Mohammed Shaffi, ”Smart Cap - Wearable Visual Guidance System for Blind,” 2018 International Conference on Inventive Research in Computing Applications (ICIRCA),Coimbatore, 2018,pp.275-278.

[10] https://towardsdatascience.com/transfer-learningusing-mobilenet-andkeras-c75daf7ff299