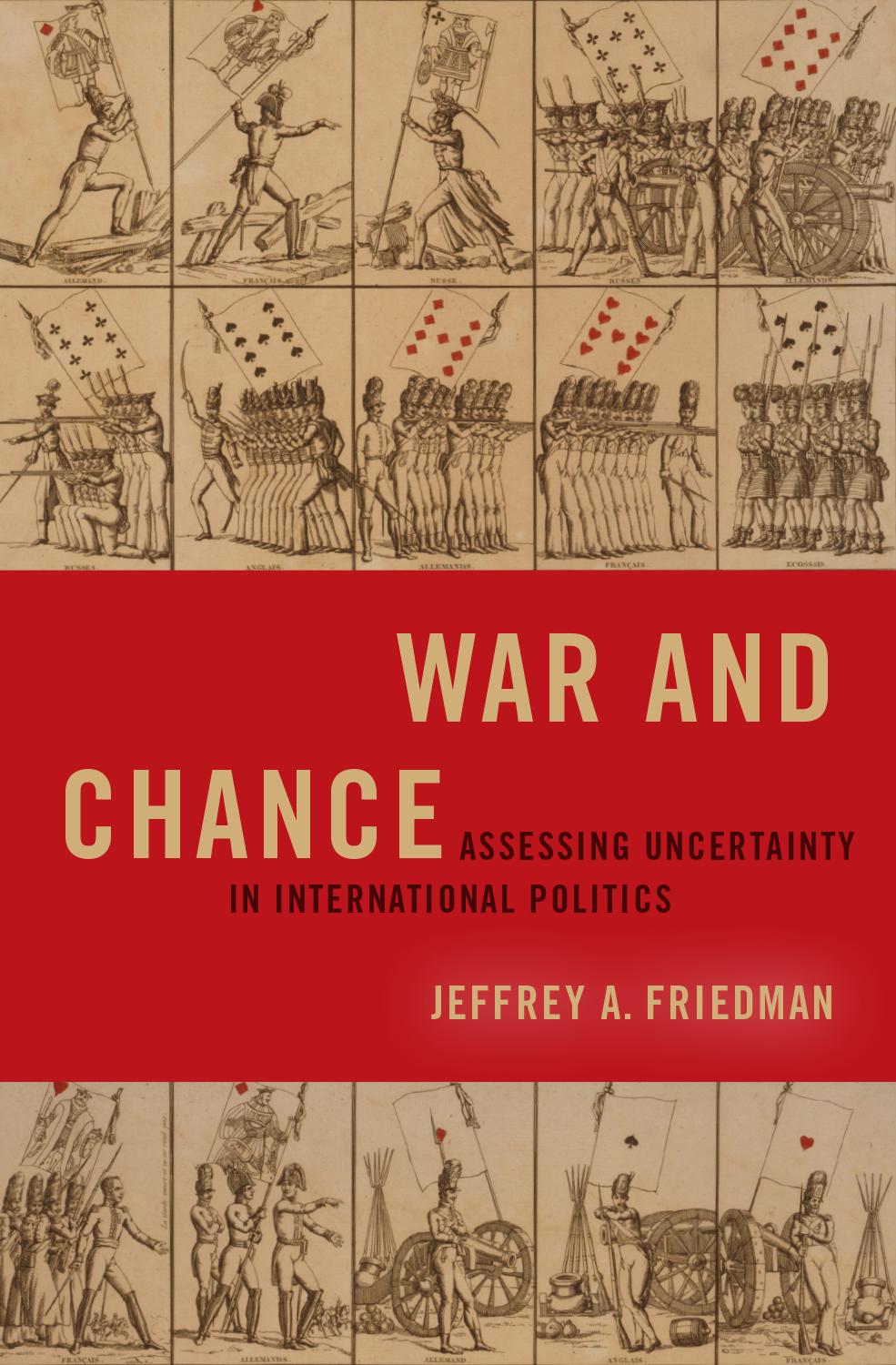

CONTENTS

Acknowledgments ix

Introduction: “One of the Things You Learn as President Is That You’re Always Dealing with Probabilities” 1

1. Pathologies of Probability Assessment 17

2. Subjective Probability and International Politics 51

3. The Value of Precision in Probability Assessment 69

4. Dispelling Illusions of Rigor 95

5. The Politics of Uncertainty and Blame 129

6. Analysis and Decision 161

7. Placing Probability Front and Center in Foreign Policy Discourse 187

Appendix: Supporting Information for Chapters 3–5 197 Index 223

ACKNOWLEDGMENTS

I am delighted to have so many people to thank for their input, guidance, and support.

Stephen Walt, Monica Toft, and Robert Bates were my dissertation advisers at Harvard. In addition to providing careful feedback on my research over many years, each of them has served as a role model for producing scholarship that is interesting, rigorous, and policy-relevant. I am also especially grateful for the mentorship of Richard Zeckhauser, who inspired my interest in decision analysis, and Stephen Biddle, who played a crucial role in launching my academic career.

Dartmouth has provided an ideal environment in which to study international politics. It has been wonderful to be surrounded by so many outstanding faculty who work in this area, including Dan Benjamin, Steve Brooks, Udi Greenberg, Brian Greenhill, Jenny Lind, Mike Mastanduno, Ed Miller, Jennie Miller, Nick Miller, Katy Powers, Daryl Press, Ben Valentino, and Bill Wohlforth. I was lucky to arrive at Dartmouth along with a cohort of brilliant, supportive junior scholars, especially David Cottrell, Derek Epp, Jeremy Ferwerda, Herschel Nachlis, Paul Novosad, Julie Rose, Na’ama Shenhav, Sean Westwood, and Thomas Youle. Dartmouth’s undergraduates have also served as a valuable sounding board for many of the ideas presented in this book. It has been a pleasure to work with them and to learn from their insights.

While conducting this research, I held fellowships at the Weatherhead Center for International Affairs, the Belfer Center for Science and International Affairs, the Tobin Project, the Dickey Center for International Understanding, and the Institute for Advanced Study in Toulouse, France. Each institution provided a stimulating environment for research and writing, particularly given the interdisciplinary nature of my work. The U.S. Department of Homeland Security funded portions of this research via the University of Southern California’s Center for Risk and Economic Analysis of Terrorism Events. That funding was especially important in ensuring the ethical compensation of survey respondents.

I presented previous versions of the book’s content to seminars at Brown University, George Washington University, Hamilton College, Harvard University, Mercyhurst University, Middlebury College, MIT, the University of Pennsylvania, the University of Pittsburgh, Princeton University, the University of Virginia, and Yale University. Just as importantly, I received invaluable feedback as a result of presenting this material to practitioners at the U.S. National War College, the U.S. National Intelligence Council, the U.S. Department of Homeland Security’s Science and Technology Directorate, the Military Operations Research Society, the Canadian Forces College, and the Norwegian Defense Intelligence School. I owe particular thanks to Colonel Mark Bucknam for the vital role he played in facilitating practitioners’ engagement with my work.

Portions of chapters 3 and 4 present research that I coauthored with Joshua Baker, Jennifer Lerner, Barbara Mellers, Philip Tetlock, and Richard Zeckhauser. I am grateful for their permission to use that analysis in the book, but more importantly for the opportunity to benefit from their collaboration and insight. Oxford University Press and Cambridge University Press have kindly allowed me to reprint that material. David McBride, Emily Mackenzie, and James Goldgeier played the key roles in steering the book through Oxford’s review and editing process. It is a particular privilege to publish this work through Oxford’s Bridging the Gap book series, a collection that reflects the highest standard of policy-relevant scholarship.

Charles Glaser and Robert Jervis chaired a workshop that reviewed an early version of the manuscript and led to several important revisions to the book’s framing and content. Countless other scholars provided thoughtful comments on different portions of this research over the years, including Dan Altman, Ivan Arreguin-Toft, Alan Barnes, Dick Betts, David Budescu, Welton Chang, Walt Cooper, Greg Daddis, Derek Grossman, Michael Herron, Yusaku Horiuchi, Loch Johnson, Josh Kertzer, Joowon Kim, Matt Kocher, Jack Levy, Mark Lowenthal, David Mandel, Stephen Marrin, Jason Matheny, Peter Mattis, James McAllister, Rose McDermott, Victor McFarland, Rich Nielsen, Joe Nye, Brendan Nyhan, Randy Pherson, Paul Pillar, Mike Poznansky, Dan Reiter, Steve Rieber, Steve Rosen, Josh Rovner, Brandon Stewart, Greg Treverton, Tom Wallsten, Kris Wheaton, Justin Wolfers, Keren Yarhi-Milo, Yuri Zhukov, and two anonymous reviewers at Oxford University Press. Charlotte Bacon provided tremendous help with articulating the book’s core arguments. Thanks also to Jeff, Jill, and Monte Odel, Jacquelyn and Pierre Vuarchex, Nelson Kasfir, and Anne Sa’adah for their advice and encouragement over many years.

I owe the greatest debts to my family: my parents, Barbara and Ben; my brother, John; my sister-in-law, Hilary; my uncle, Art; and my partner, Kathryn. Each of you has set a magnificent example for me to follow, both personally and professionally. I love you and will always be grateful for your support.

Introduction

“One of the Things You Learn as President Is That You’re Always Dealing with Probabilities”

Over the past two decades, the most serious problems in U.S. foreign policy have revolved around the challenge of assessing uncertainty. Leaders underestimated the risk of terrorism before 2001, overestimated the chances that Saddam Hussein was pursuing weapons of mass destruction in 2003, and did not fully appreciate the dangers of pursuing regime change in Iraq, Afghanistan, or Libya. Many of this generation’s most consequential events, such as the 2008 financial crisis, the Arab Spring, the rise of ISIS, and Brexit, were outcomes that experts either confidently predicted would not take place or failed to anticipate entirely. Those experiences provide harsh reminders that scholars and practitioners of international politics are far less clairvoyant than we would like them to be.

The central difficulty with assessing uncertainty in international politics is that the most important judgments also tend to be the most subjective. No known methodology can reliably predict the outbreak of wars, forecast economic recessions, project the results of military operations, anticipate terrorist attacks, or estimate the chances of countless other events kinds of uncertainty that shape foreign policy decisions.1 Many scholars and practitioners therefore believe that it is better to keep foreign policy debates focused on the facts—that it is, at best, a waste of time to debate uncertain judgments that will often prove to be wrong.

1 On how irreducible uncertainty surrounds most major foreign policy decisions, see Robert Jervis, System Effects: Complexity in Political and Social Life (Princeton, N.J.: Princeton University Press, 1997); Richard K. Betts, Enemies of Intelligence: Knowledge and Power in American National Security (New York: Columbia University Press, 2006). On the limitations of even the most state-ofthe-art methods for predicting international politics, see Gerald Schneider, Nils Petter Gleditsch, and Sabine Carey, “Forecasting in International Relations,” Conflict Management and Peace Science, Vol. 20, No. 1 (2011), pp. 5–14; Michael D. Ward, “Can We Predict Politics?” Journal of Global Security Studies, Vol. 1, No. 1 (2016), pp. 80–91.

This skepticism raises fundamental questions about the nature and limits of foreign policy analysis. How is it possible to draw coherent conclusions about something as complicated as the probability that a military operation will succeed? If these judgments are subjective, then how can they be useful? To what extent can fallible people make sense of these judgments, particularly given the psychological constraints and political pressures that surround foreign policy decision making? These questions apply to virtually every element of foreign policy discourse, and they are the subject of this book.

The book has two main goals. The first of these goals is to show how foreign policy officials often try to avoid the challenge of probabilistic reasoning. The book’s second goal is to demonstrate that assessments of uncertainty in international politics are more valuable than the conventional wisdom expects. From a theoretical standpoint, we will see that foreign policy analysts can assess uncertainty in clear and structured ways; that foreign policy decision makers can use those judgments to evaluate high-stakes choices; and that , in some cases , it is nearly impossible to make sound foreign policy decisions without assessing subjective probabilities in detail. The book’s empirical chapters then demonstrate that real people are remarkably capable of putting those concepts into practice. We will see that assessments of uncertainty convey meaningful information about international politics; that presenting this information explicitly encourages decision makers to be more cautious when they place lives and resources at risk; and that, even if foreign policy analysts often receive unfair criticism, that does not necessarily distort analysts’ incentives to provide clear and honest judgments.

Altogether, the book thus explains how foreign policy analysts can assess uncertainty in a manner that is theoretically coherent, empirically meaningful, politically defensible, practically useful, and sometimes logically necessary for making sound choices.2 Each of these claims contradicts widespread skepticism about the value of probabilistic reasoning in international politics, and shows that placing greater emphasis on this subject can improve nearly any foreign policy debate. The book substantiates these claims by examining critical episodes in the history of U.S. national security policy and by drawing on a diverse range of quantitative evidence, including a database that contains nearly one million geopolitical forecasts and experimental studies involving hundreds of national security professionals.

The clearest benefit of improving assessments of uncertainty in international politics is that it can help to prevent policymakers from taking risks that they do not fully understand. Prior to authorizing the Bay of Pigs invasion in April 1961, for example, President Kennedy asked his Joint Chiefs of Staff to evaluate the

2 Here and throughout the book, I use the term foreign policy analysts to describe anyone who seeks to inform foreign policy debates, both in and out of government.

plan’s feasibility. The Joint Chiefs submitted a report that detailed the operation’s strengths and weaknesses, and concluded that “this plan has a fair chance of ultimate success.”3 In the weeks that followed, high-ranking officials repeatedly referenced the Joint Chiefs’ judgment when debating whether or not to set the Bay of Pigs invasion in motion. The problem was that no one had a clear idea of what that judgment actually meant.

The officer who wrote the Joint Chiefs’ report on the Bay of Pigs invasion later said that the “fair chance” phrase was supposed to be a warning, much like a letter grade of C indicates “fair performance” on a test.4 That is also how Secretary of Defense Robert McNamara recalled interpreting the Joint Chiefs’ views.5 But other leaders read the report differently. When Marine Corps Commandant David Shoup was later asked to say how he had interpreted the “fair chance” phrase, he replied that “the plan they had should have accomplished the mission.”6 Proponents of the invasion repeatedly cited the “fair chance” assessment in briefing materials.7 President Kennedy came to believe that the Joint Chiefs had endorsed the plan. After the invasion collapsed, he wondered why no one had warned him that the mission might fail.8

3 “Memorandum from the Joint Chiefs of Staff to Secretary McNamara,” Foreign Relations of the United States [FRUS] 1961-1963, Vol. X, Doc 35 (3 February 1961). Chapter 5 describes this document in greater detail.

4 When the historian Peter Wyden interviewed the officer fifteen years later, he found that “[thenBrigadier General David] Gray was still severely troubled about his failure to have insisted that figures [i.e., numeric percentages] be used. He felt that one of the key misunderstandings in the entire project was the misinterpretation of the word ‘fair.’ ” Gray told Wyden that the Joint Chiefs believed that the odds of the invasion succeeding were roughly three in ten. Peter Wyden, Bay of Pigs: The Untold Story (New York: Simon and Schuster, 1979), pp. 88–90.

5 In an after-action review conducted shortly after the Bay of Pigs invasion collapsed, McNamara said that he knew the Joint Chiefs thought the plan was unlikely to work, but that he had still believed it was the best opportunity the United States would get to overthrow the Castro regime. “Memorandum for the Record,” FRUS 1961-1963, Vol. X, Doc 199 (3 May 1961).

6 “Memorandum for the Record,” FRUS 1961-1963, Vol. X Doc 209 (8 May 1961).

7 James Rasenberger, The Brilliant Disaster: JFK, Castro, and America’s Doomed Invasion of the Bay of Pigs (New York: Scribner, 2011), p. 119, explains that “the entire report, on balance, came off as an endorsement of the CIA’s plan.” On the CIA’s subsequent use of the “fair chance” statement to support the invasion, see “Paper Prepared in the Central Intelligence Agency,” FRUS 1961–1963, Vol. X, Doc 46 (17 February 1961). For post-mortem discussions of the invasion decision, see FRUS 1961–1963, Vol. X, Docs 199, 209, 210, and 221.

8 President Kennedy later complained to an aide that the Joint Chiefs “had just sat there nodding, saying it would work.” In a subsequent interview, Kennedy recalled that “five minutes after it began to fall in, we all looked at each other and asked, ‘How could we have been so stupid?’ When we saw the wide range of the failures we asked ourselves why it had not been apparent to somebody from the start.” Richard Reeves, President Kennedy: Profile of Power (New York: Simon and Schuster, 1993), p. 103; Hugh Sidey, “The Lesson John Kennedy Learned from the Bay of Pigs,” Time, Vol. 157, No. 15 (2001).

Regardless of the insight that the Joint Chiefs provided about the strengths and weaknesses of the Bay of Pigs invasion, their advice thus amounted to a Rorschach test: it allowed policymakers to adopt nearly any position they wanted, and to believe that their view had been endorsed by the military’s top brass. This is just one of many examples we will see throughout the book of how failing to assess uncertainty in clear and structured ways can undermine foreign policy decision making. Yet, as the next example shows, the challenge of assessing uncertainty in international politics runs deeper than semantics, and it cannot be solved through clear language alone.

In April 2011—almost exactly fifty years after the Bay of Pigs invasion— President Barack Obama convened his senior national security team to discuss reports that Osama bin Laden might be living in Abbottabad, Pakistan. Intelligence analysts had studied a suspicious compound in Abbottabad for months. They possessed clear evidence connecting this site to al Qaeda, and they knew that the compound housed a tall, reclusive man who never left the premises. Yet it was impossible to be certain about who that person was. If President Obama was going to act on this information, he would have to base his decision on probabilistic reasoning. To make that reasoning as rigorous as possible, President Obama asked his advisers to estimate the chances that bin Laden was living in the Abbottabad compound.9

Answers to the president’s question ranged widely. The leader of the bin Laden unit at the Central Intelligence Agency (CIA) said there was a ninety-five percent chance that they had found their man. CIA Deputy Director Michael Morell thought that those chances were more like sixty percent. Red Teams assigned to make skeptical arguments offered figures as low as thirty or forty percent. Other views reportedly clustered around seventy or eighty percent. While accounts of this meeting vary, all of them stress that participants did not know how to resolve their disagreement and that they did not find the discussion to be helpful.10 President Obama reportedly said at the time that the debate had provided “not more certainty but more confusion.” In a subsequent interview, he

9 The following account is based on Michael Morell, The Great War of Our Time: The CIA’s Fight against Terrorism from Al Qa’ida to ISIS (New York: Twelve, 2014), ch. 7; along with David Sanger, Confront and Conceal: Obama’s Secret Wars and Surprising Use of American Power (New York: Crown 2012); Peter Bergen, Manhunt: The Ten-Year Search for Bin Laden from 9/11 to Abbottabad (New York: Crown 2012); and Mark Bowden, The Finish: The Killing of Osama bin Laden (New York: Atlantic, 2012).

10 Reflecting later on this debate, James Clapper, who was then the director of national intelligence, said, “We put a lot of discussion [into] percentages of confidence, which to me is not particularly meaningful. In the end it’s all subjective judgment anyway.” CNN, “The Axe Files,” Podcast Ep. 247 (31 May 2018).

told a reporter that his advisers’ probability estimates had “disguised uncertainty as opposed to actually providing you with more useful information.”11

Of course, President Obama’s decision to raid the Abbottabad compound ended more successfully than President Kennedy’s decision to invade the Bay of Pigs. Yet the confusion that President Obama and his advisers encountered when pursuing bin Laden was, in many ways, more troubling. The problem with the Joint Chiefs’ assessment of the Bay of Pigs invasion was a simple matter of semantics. By contrast, the Obama administration’s efforts to estimate the chances that bin Laden was living in Abbottabad revealed a deeper conceptual confusion: even when foreign policy officials attempted to debate the uncertainty that surrounded one of their seminal decisions, they still struggled to understand what those judgments meant and how they could be useful.

War and Chance seeks to dispel that confusion. The book describes the theoretical basis for assessing uncertainty in international politics, explains how those judgments provide crucial insight for evaluating foreign policy decisions, and shows that the conventional wisdom underestimates the extent to which these insights can aid foreign policy discourse. These arguments apply to virtually any kind of foreign policy analysis, from debates in the White House Situation Room to op-eds in the New York Times. As the following chapters explain, it is impossible to evaluate foreign policy choices without assessing uncertainty in some way, shape, or form.

To be clear, nothing in the book implies that assessing uncertainty in international politics should be easy or uncontroversial. The book’s main goal is, instead, to show that scholars and practitioners handle this challenge best when they confront it head-on, and to explain that there is no reason why these debates should seem to be intractable. Even small advances in understanding this subject matter could provide substantial benefit—for, as President Obama reflected when the bin Laden raid was over, “One of the things you learn as president is that you’re always dealing with probabilities.”12

Subjective Probability and Its Skeptics

The military theorist Carl von Clausewitz wrote in his famous book, On War, that “war is a matter of assessing probabilities” and that “no other human activity is so continuously or universally bound up with chance.”13 Clausewitz believed that assessing this uncertainty required considerable intellect, as “many of the

11 Bowden, The Finish, pp. 160–161; Bergen, Manhunt, p. 198; Sanger, Confront and Conceal, p. 93.

12 Bowden, The Finish, p. 161.

13 Carl von Clausewitz, On War, tr. Michael Howard and Peter Paret (Princeton, N.J.: Princeton University Press, 1984), pp. 85–86. The U.S. Marine Corps’ capstone doctrine reflects this sentiment,

decisions faced by the commander-in-chief resemble mathematical problems worthy of the gifts of a Newton or an Euler.” Yet Clausewitz argued elsewhere in On War that “logical reasoning often plays no part at all” in military decision making and that “absolute, so-called mathematical, factors never find a firm basis in military calculations.”14

Though Clausewitz is famous for offering inscrutable insights about many aspects of military strategy, his views of assessing uncertainty are not contradictory, and they help to frame the analysis presented in this book. From a logical standpoint, it is impossible to support any foreign policy decision without believing that its chances of success are large enough to make expected benefits exceed expected costs. And that logic has rules. Probability and expected value are quantifiable concepts that obey mathematical axioms. Yet these axioms also have important limits. Rational choice theory can instruct decision makers about how to behave in a manner that is consistent with their personal beliefs, but it cannot tell decision makers how to form those beliefs in the first place, particularly not when they are dealing with subject matter that involves as much complexity, secrecy, and deception as world politics.15 The foundation for any foreign policy decision thus rests on individual, subjective judgment.

Many scholars and practitioners see little value in debating these subjective judgments, and the book describes a range of arguments to that effect. Broadly speaking, we can divide those arguments into three camps. The book will refer to these camps as the agnostics, the rejectionists, and the cynics

The agnostics argue that assessments of uncertainty in international politics are too unreliable to be useful.16 Taken to its logical extreme, this argument suggests that foreign policy analysts can never make rigorous judgments about a policy’s likely outcomes. As stated by the former U.S. secretary of defense, James Mattis, “It is not scientifically possible to accurately predict the outcome of [a

stating on its opening page that “War is intrinsically unpredictable. At best, we can hope to determine possibilities and probabilities.” U.S. Marine Corps Doctrinal Publication 1, Warfighting, p. 7.

14 Clausewitz, On War, pp. 80, 86, 112, 184.

15 On how rational choice logic is contingent on personal beliefs, see Ken Binmore, Rational Decisions (Princeton, N.J.: Princeton University Press, 2009). Chapter 2 discusses this point in more detail. There is, for example, a large literature that describes how foreign policy analysts can use Bayesian mathematics to assess uncertainty, but Bayesian reasoning depends on subjective probability estimates.

16 This view is premised on the notion that international politics and armed conflicts involve indefinable levels of complexity. On complexity and international politics, see Richard K. Betts, “Is Strategy an Illusion?” International Security, Vol. 25, No. 2 (2000), pp. 5–50; Jervis, System Effects; Thomas J. Czerwinski, Coping with the Bounds: Speculations on Nonlinearity in Military Affairs (Washington, D.C.: National Defense University Press, 1998); and Ben Connable, Embracing the Fog of War (Santa Monica, Calif.: Rand, 2012).

military] action. To suggest otherwise runs contrary to historical experience and the nature of war.”17

The agnostic viewpoint carries sobering implications for foreign policy discourse. If it is impossible to predict the results of foreign policy decisions, then it is also impossible to say that one choice has a higher chance of succeeding than another. This stance would render most policy debates meaningless and it would undermine a vast range of international relations scholarship. If there is no rigorous way to evaluate foreign policy decisions on their merits, then there can also be no way to define rational behavior, because all choices could plausibly be characterized as leaders pursuing what they perceive to be sufficiently large chances of achieving sufficiently important objectives. Since this would make it impossible to prove that any decision did not promote the national interest, there would also be no purpose in arguing that any high-stakes decisions were driven by nonrational impulses.18

A weaker and more plausible version of the agnostics’ thesis accepts that assessments of subjective probability provide some value, but only at broad levels of generality. As Aristotle put it, “The educated person seeks exactness in each area to the extent that the nature of the subject allows.”19 And perhaps that threshold of “allowable exactness” is extremely low when it comes to assessing uncertainty in international politics. Avoiding these judgments or leaving them vague could thus be seen as displaying appropriate humility rather than avoiding controversial issues.20 Chapters 2 and 3 explore the theoretical and empirical foundations of this argument in detail.

17 James N. Mattis, “USJFCOM Commander’s Guidance for Effects-based Operations,” Parameters, Vol. 38, No. 3 (2008), pp. 18–25. For similar questions about whether assessments of uncertainty provide a sound basis for decision making in international politics, see Alexander Wendt, “Driving with the Rearview Mirror: On the Rational Science of Institutional Design,” International Organization, Vol. 55, No. 4 (2001), pp. 1019–1049; Jonathan Kirshner, “Rationalist Explanations for War?” Security Studies, Vol. 10, No. 1 (2000), pp. 143–150; Alan Beyerchen, “Clausewitz, Nonlinearity, and the Unpredictability of War,” International Security, Vol. 17, No. 3 (1992/93), pp. 59–90; and Barry D. Watts, Clausewitzian Friction and Future War, revised edition (Washington, D.C.: National Defense University, 2004).

18 On how any international relations theory relies on a coherent standard of rational decision making, see Charles L. Glaser, Rational Theory of International Politics (Princeton, N.J.: Princeton University Press, 2010), pp. 2–3. On how assessments of uncertainty play a crucial role in nearly all international relations paradigms, see Brian C. Rathbun, “Uncertain about Uncertainty: Understanding the Multiple Meanings of a Crucial Concept in International Relations Theory,” International Studies Quarterly, Vol. 51, No. 3 (2007), pp. 533–557.

19 Aristotle, Nicomachean Ethics, tr. Terence Irwin (Indianapolis, Ind.: Hackett, 1985), p. 1094b.

20 For arguments exhorting foreign policy analysts to adopt such humility, see Stanley Hoffmann, Gulliver’s Troubles: Or, the Setting of American Foreign Policy (New York: McGraw-Hill, 1968), pp. 87–175; David Halberstam, The Best and the Brightest (New York: Random House, 1972).

The rejectionist viewpoint claims that assessing uncertainty in international politics is not just misguided, but also counterproductive. This argument is rooted in the fact that foreign policy is not made by rational automata, but rather by human beings who are susceptible to political pressures and cognitive biases.21 Chapter 4, for example, describes how scholars and practitioners often worry that probability assessments surround arbitrary opinions with illusions of rigor.22 Chapter 5 then examines common claims about how transparent probabilistic reasoning exposes foreign policy analysts to unjustified criticism, thereby undermining their credibility and creating incentives to warp key judgments.23

The rejectionists’ thesis is important because it implies that there is a major gap between what rigorous decision making entails in principle and what fallible individuals can achieve in practice when they confront high-stakes issues. That claim alone is unremarkable in light of the growing volume of scholarship that documents how heuristics and biases can undermine foreign policy decisions.24 Yet, in most cases, scholars believe that the best way to mitigate these cognitive flaws is to conduct clear, well-structured analysis.25 By contrast, the rejectionists suggest that attempts to clarify and structure probabilistic reasoning can backfire, exchanging one set of biases for another in a manner that would only make decisions worse. This argument raises fundamental questions about the extent

21 Robert Jervis, Perception and Misperception in International Politics (Princeton, N.J.: Princeton University Press, 1976); Rose McDermott, Risk-Taking in International Politics (Ann Arbor, Mich.: University of Michigan Press, 1998); Philip E. Tetlock, Expert Political Judgment: How Good Is It? How Can We Know? (Princeton, N.J.: Princeton University Press, 2005).

22 Mark M. Lowenthal, Intelligence: From Secrets to Policy, 3rd ed. (Washington, D.C.: CQ Press, 2006), p. 129; Yaakov Y. I. Vertzberger, Risk Taking and Decisionmaking: Foreign Military Intervention Decisions (Stanford, Calif.: Stanford University Press, 1998), pp. 27–28.

23 Chapter 5 explains that this impulse is not purely self-serving. If national security analysts lose the trust of their colleagues or the general public as a result of unjustified criticism, then this can undermine their effectiveness regardless of whether that loss of standing is deserved. If analysts seek to avoid probability assessment in order to escape justified criticism, then this would reflect the cynical viewpoint.

24 Jack S. Levy, “Psychology and Foreign Policy Decision-Making,” in Leonie Huddy, David O. Sears, and Jack S. Levy, eds., The Oxford Handbook of Political Psychology, 2nd ed. (New York: Oxford University Press, 2013); Emilie M. Hafner-Burton et al., “The Behavioral Revolution and the Study of International Relations,” International Organization, Vol. 71, No. S1 (April 2017), pp. S1– S31; Joshua D. Kertzer and Dustin Tingley, “Political Psychology in International Relations,” Annual Review of Political Science, Vol. 21 (2018), pp. 319–339.

25 Daniel Kahneman famously captured this insight with the distinction between “thinking fast” and “thinking slow,” where the latter is less prone to heuristics and biases. Daniel Kahneman, Thinking, Fast and Slow (New York: Farrar, Straus and Giroux, 2011). In national security specifically, see Richards Heuer, Jr., Psychology of Intelligence Analysis (Washington, D.C.: Center for the Study of Intelligence, 1999).

to which traditional conceptions of analytic rigor provide viable foundations for foreign policy discourse.26

The cynics claim that foreign policy analysts and decision makers have selfinterested motives to avoid assessing uncertainty. Political leaders may thus deliberately conceal doubts about their policy proposals in order to make tough choices seem “clearer than truth.”27 Marginalizing assessments of uncertainty may also allow foreign policy analysts to escape reasonable accountability for mistaken judgments.28

Having spoken with hundreds of practitioners while conducting my research, I do not believe that this cynical behavior is widespread. My impression is that this behavior is primarily concentrated at the highest levels of government and punditry, whereas most foreign policy analysts and decision makers are committed to doing their jobs as rigorously as possible.29 Yet the prospect of cynical behavior, whatever its prevalence, only makes it more important to scrutinize other objections to probabilistic reasoning. As chapter 7 explains, the best way to prevent leaders from marginalizing or manipulating assessments of uncertainty is to establish a norm that favors placing those judgments front and center in high-stakes policy debates. It is impossible to establish this kind of norm—or to say whether such a norm would even make sense—without dispelling other sources of skepticism about the value of assessing uncertainty in international politics.

Though I will argue that the skepticism described in this section is overblown, it is easy to understand how those views have emerged. As noted at the beginning of the chapter, the history of international politics is full of cases in

26 Wendt, “Driving with the Rearview Mirror”; Stanley A. Renshon and Deborah Welch Larson, eds., Good Judgment in Foreign Policy (Lanham, Md.: Rowman and Littlefield, 2003); and Peter Katzenstein and Lucia Seybert, “Protean Power and Uncertainty: Exploring the Unexpected in World Politics,” International Studies Quarterly, Vol. 62, No. 1 (2018), pp. 80–93.

27 Dean Acheson, Present at the Creation: My Years at the State Department (New York: Norton, 1969), p. 375; John M. Schuessler, Deceit on the Road to War (Ithaca, N.Y.: Cornell University Press, 2015); Uri Bar-Joseph and Rose McDermott, Intelligence Success and Failure: The Human Factor (New York: Oxford University Press, 2017). In other cases, decision makers may prefer to leave assessments of uncertainty vague, so as to maintain freedom of action. Joshua Rovner, Fixing the Facts: National Security and the Politics of Intelligence (Ithaca, N.Y.: Cornell University Press, 2011); Robert Jervis, “Why Intelligence and Policymakers Clash,” Political Science Quarterly, Vol. 125, No. 2 (Summer 2010), pp. 185–204.

28 H. R. McMaster, Dereliction of Duty (New York: HarperCollins, 1997); Philip E. Tetlock, “Reading Tarot on K Street,” The National Interest, No. 103 (2009), pp. 57–67; Christopher Hood, The Blame Game: Spin, Bureaucracy, and Self-Preservation in Government (Princeton, N.J.: Princeton University Press, 2011). As mentioned in note 23, the desire to avoid unjustified blame falls within the rejectionists’ viewpoint.

29 This is consistent with the (much more informed) views of Robert Jervis, “Politics and Political Science,” Annual Review of Political Science, Vol. 21 (2018), p. 17.

which scholars and practitioners misperceived or failed to recognize important elements of uncertainty.30 Yet there is an important difference between asking how good we are at assessing uncertainty on the whole and determining how to assess uncertainty as effectively as possible. Indeed, the worse our performance in this area becomes, the more priority we should place on preserving and exploiting whatever insight we actually possess. The book documents how a series of harmful practices interfere with that goal; it demonstrates that these practices reflect misplaced skepticism about the logic, psychology, and politics of probabilistic reasoning; and it shows how it is possible to improve the quality of these judgments in nearly any area of foreign policy discourse.

Chapter Outline

The book contains seven chapters. Chapter 1 describes how foreign policy analysts often avoid assessing uncertainty in a manner that supports sound decision making. This concern dates back to a famous 1964 essay by Sherman Kent, which remains one of the seminal works in intelligence studies.31 But chapter 1 explains that aversion to probabilistic reasoning is not just a problem for intelligence analysts and that the issue runs much deeper than semantics. We will see how scholars, practitioners, and pundits often debate international politics without assessing the most important probabilities at all, particularly by analyzing which policies offer the best prospects of success or by debating whether actions are necessary to achieve their objectives, without carefully assessing the chances that high-stakes decisions will actually work. Chapter 1 shows how this behavior is ingrained throughout a broad range of foreign policy discourse, and it describes how these problematic practices shaped the highest levels of U.S. decision making during the Vietnam War.

Chapter 2 explores the theoretical foundations of probabilistic reasoning in international politics. It explains that, even though the most important assessments of uncertainty in international politics are inherently subjective, foreign policy analysts always possess a coherent conceptual basis for debating these judgments in clear and structured ways. Chapter 3 then examines the

30 See, for example, Jack Snyder, Myths of Empire: Domestic Politics and International Ambition (Ithaca, N.Y.: Cornell University Press, 1991); John Lewis Gaddis, We Now Know: Rethinking Cold War History (New York: Oxford University Press, 1997); Dominic D. P. Johnson, Overconfidence and War (Cambridge, Mass.: Harvard University Press, 2004); and John Mueller, Overblown: How Politicians and the Terrorism Industry Inflate National Security Threats, and Why We Believe Them (New York, Free Press 2006).

31 Sherman Kent, “Words of Estimative Probability,” Studies in Intelligence, Vol. 8, No. 4 (1964), pp. 49–65.

empirical value of assessing uncertainty in international politics. By analyzing a database containing nearly one million geopolitical forecasts, it shows that foreign policy analysts can reliably estimate subjective probabilities with numeric precision. Together, chapters 2 and 3 refute the idea that there is some threshold of “allowable exactness” that constrains assessments of uncertainty in international politics. Avoiding these judgments or leaving them vague should not be seen as displaying appropriate analytic humility, but rather as a practice that sells analysts’ capabilities short and diminishes the quality of foreign policy discourse.

Chapter 4 examines the psychology of assessing uncertainty in international politics, focusing on the concern that clear probabilistic reasoning could confuse decision makers or create harmful “illusions of rigor.” By presenting a series of survey experiments that involved more than six hundred national security professionals, the chapter shows that foreign policy decision makers’ choices are sensitive to subtle variations in probability assessments, and that making these assessments more explicit encourages decision makers to be more cautious when they are placing lives and resources at risk.32 Chapter 5 then explores the argument that assessing uncertainty in clear and structured ways would expose foreign policy analysts to excessive criticism. By combining experimental evidence with a historical review of perceived intelligence failures, chapter 5 suggests that the conventional wisdom about the “politics of uncertainty and blame” may actually have the matter exactly backward: by leaving their assessments of uncertainty vague, foreign policy analysts end up providing their critics with an opportunity to make key judgments seem worse than they really were.

Chapter 6 takes a closer look at how foreign policy decision makers can use assessments of uncertainty to evaluate high-stakes choices. It explains why transparent probabilistic reasoning is especially important when leaders are struggling to assess strategic progress. In some cases, it can actually be impossible to make rigorous judgments about the extent to which foreign policies are making acceptable progress without assessing subjective probabilities in detail. Chapter 7 concludes by exploring the book’s practical implications for improving foreign policy debates. It focuses on the importance of creating norms that place assessments of uncertainty front and center in foreign policy analysis, and explains how the practice of multiple advocacy can help to generate those norms.

32 One irony of these findings is that the national security officials who participated in the experiments often insisted that fine-grained probabilistic distinctions would not shape their decisions, even as the experimental data unambiguously demonstrated that this information influenced their views. The notion that decision makers may not always be aware of how they arrive at their own beliefs is one of the central motivations for conducting experimental research in political psychology. Yet, unlike areas of political psychology that show how decision makers’ views are susceptible to unconscious biases, the book’s empirical analysis suggests that national security officials are more sophisticated than they give themselves credit for in handling subjective probabilities.

This argument further highlights that the goal of improving assessments of uncertainty in international politics is not just an issue for government officials, but that it is also a matter of how scholars, journalists, and pundits can raise the standards of foreign policy discourse.

Methods and Approach

If presidents are always dealing with probabilities, then how can there be so much confusion about handling that subject? And if this topic is so important, then why have other scholars not written a book like this one already?

One answer to these questions is that the study of probabilistic reasoning requires combining disciplinary approaches that scholars tend to pursue separately. Understanding what subjective probability assessments mean and how they shape foreign policy decisions (chapters 2 and 6) requires adapting general principles from decision theory to specific problems of international politics. Understanding the extent to which real people can employ these concepts (chapters 3 and 4) requires studying the psychological dimensions of foreign policy analysis and decision making. Understanding how the prospect of criticism shapes foreign policy analysts’ incentives (chapter 5) requires merging insights from intelligence studies and organizational management.

In this sense, no one academic discipline is well-suited to addressing the full range of claims that skeptics direct toward assessing uncertainty in foreign policy discourse. And though the book’s interdisciplinary approach involves an inevitable trade-off of depth for breadth, it is crucial to examine these topics together and not in isolation. As the rejectionists point out, well-intentioned efforts to mitigate one set of flaws with probabilistic reasoning could plausibly backfire by amplifying others. Addressing these concerns requires taking a comprehensive view of the logic, psychology, and politics of assessing uncertainty in international affairs. To my knowledge, War and Chance is the first book to do so.

A second reason why scholars and practitioners lack consensus about the value of probabilistic reasoning in international politics is that this subject is notoriously difficult to study empirically. Uncertainty is an abstract concept that no one can directly observe. Since analysts and decision makers tend to be vague when they assess uncertainty, it is usually hard to say what their judgments actually mean. And even when analysts make their judgments explicit, it can still be difficult to evaluate them. For instance, if you say that an event has a thirty percent chance of taking place and then it happens, how can we tell whether you were wrong or just unlucky? Chapters 3 through 5 will show that navigating these issues requires gathering large volumes of well-structured data. Most areas

of foreign policy do not lend themselves to this kind of data collection. Scholars have therefore tended to treat the assessment of uncertainty as a topic bettersuited to philosophical debate than to empirical analysis.33

In recent years, however, social scientists have developed new methods to study probabilistic reasoning, and governmental organizations have become increasingly receptive to supporting empirical research on the subject. Chapter 3’s analysis of the value of precision in probability assessment would not have been possible without the U.S. Intelligence Community’s decision to sponsor the collection of nearly one million geopolitical forecasts.34 Similarly, chapter 4’s analysis of how decision makers respond to assessments of uncertainty depended on the support of the National War College and the willingness of more than six hundred national security professionals to participate in experimental research. Thus, even if none of the following chapters represents the final word on its subject, one of the book’s main contributions is simply to demonstrate that it is possible to conduct rigorous empirical analysis of issues that many scholars and practitioners have previously considered intractable.

This book also differs from previous scholarship in how it treats the relationship between explanation and prescription. Academic studies of international politics typically prioritize the explanatory function of social science, in which scholars focus on building a descriptive model of the world that helps readers to understand why states and leaders act in puzzling ways. Most scholars therefore orient their analyses around theoretical and empirical questions that are important for explaining observed behavior. Although such studies can generate policy-relevant insights, those insights are often secondary to scholars’ descriptive aims. Indeed, some of the most salient insights that these studies produce is that there is relatively little we can do to improve problematic behavior, either because foreign policy officials have strong incentives to act in a harmful fashion or because their choices are shaped by structural forces outside of their control.35

This book, by contrast, prioritizes the prescriptive value of social science. It aims to understand what sound decision making entails in principle and how close we can get to that standard in practice. To serve these objectives, the following chapters focus on theoretical and empirical questions that are important for understanding how to improve foreign policy analysis and decision making,

33 Mandeep K. Dhami, “Towards an Evidence-Based Approach to Communicating Uncertainty in Intelligence Analysis,” Intelligence and National Security, Vol. 33, No. 2 (2018), pp. 257–272.

34 Philip E. Tetlock and Daniel Gardner, Superforecasting: The Art and Science of Prediction (New York: Crown, 2015).

35 For a critique of how international relations scholars often privilege descriptive aims over prescriptive insights, see Alexander George, Bridging the Gap: Theory and Practice in Foreign Policy (Washington, D.C.: U.S. Institute of Peace, 1993); and Bruce W. Jentleson, “The Need for Praxis: Bringing Policy Relevance Back In,” International Security, Vol. 26, No. 4 (2002), pp. 169–183.