PREFACE

A theory is not to be considered complete until you have made it so clear that you can explain it to the first man whom you meet on the street.

Joseph-Diez Gergonne1

The most complicated skill is to be simple.

Dejan

Stojanovic2

Success is the ability to go from one failure to another with no loss of enthusiasm.

Winston Churchill3

As far as I remember, there was only one rule. “Be home before it gets dark.” The definition of darkness was, of course, negotiable. My childhood included many animals: turtles, a family of hedgehogs, fish in a toilet tank, pigeons, and barn owls, in addition to our family’s cats and chickens. Our pig, Rüszü, and I were good friends. He was always eager to get out from his sty and follow me to our favorite destination, a small, shallow bay of Lake Balaton, in Hungary. A short walk across the street from our house, Lake Balaton was the source of many happy moments of my early life. It provided everything I needed: swimming during the summer, skating in the winter, and fishing most of the year around. I lived in complete freedom, growing up in the streets with other kids from the neighborhood. We made up rules, invented games, and built fortresses from rocks and abandoned building material to defend our territory against imagined invaders. We wandered around the reeds, losing ourselves only to

1. As quoted in Barrow-Green and Siegmund-Schultze (2016).

2. https://www.poemhunter.com/poem/simplicity-30/

3. https://philosiblog.com/

find our way out, developing a sense of direction and self-reliance. I grew up in a child’s paradise, even if those years were the worst times of the communist dictatorship for my parents’ generation.

Summers were special. My parents rented out our two bedrooms, bathroom, and kitchen to vacationers from Budapest, and we temporarily moved up to the attic. Once my father told me that one of the vacationers was a “scientistphilosopher” who knew everything. I wondered how it would be possible to know everything. I took every opportunity to follow him around to figure out whether I could discover something special about his head or eyes. But he appeared to be a regular guy with a good sense of humor. I asked him what Rüszü thought about me and why he could not talk to me. He gave me a long answer with many words that I did not understand, and, at the end, he victoriously announced, “Now you know.” Yet I did not, and I kept wondering whether my pig friend’s seemingly affectionate love was the same as my feelings for him. Perhaps my scientist knew the answer, but I did not understand the words he used. This was the beginning of my problem with words used in scientific explanations.

My childhood curiosity has never evaporated. I became a scientist as a consequence of striving to understand the true meaning behind explanations. Too often, what my peers understood to be a logical and straightforward answer remained a mystery to me. I had difficulty comprehending gravity in high school. OK, it is an “action at a distance” or a force that attracts a body having mass toward another physical body. But are these statements not just another way of saying the same thing? My physics teacher’s answer about gravity reminded me the explanation of Rüszü’s abilities given by “my” scientist. My troubles with explanatory terms only deepened during my medical student and postdoctoral years after I realized that my dear mentor, Endre Grastyán, and my postdoctoral advisor, Cornelius (Case) Vanderwolf, shared my frustration. Too often, when we do not understand something, we make up a word or two and pretend that those words solve the mystery.4

Scientists started the twenty-first century with a new goal: understanding ourselves and the complexity of the hardware that supports our individual minds. All of a sudden, neuroscience emerged from long obscurity into everyday language. New programs have sprung up around the world. The BRAIN Initiative in the United States put big money into public–private collaborations aimed at developing powerful new tools to peek into the workings of the brain. In Europe, the Human Brain Project promises to construct a model of the

4. These may be called “filler terms,” which may not explain anything; when used often enough in scientific writing, the innocent reader may believe that they actually refer to a mechanism (e.g., Krakauer et al., 2017).

human brain—perhaps an overambitious goal—in the next decade. The main targets of the China Brain Project are the mechanisms of cognition and brain diseases, as well as advancing information technology and artificial intelligence projects.

Strikingly, none of these programs makes a priority of understanding the general principles of brain function. That decision may be tactically wise because discoveries of novel principles of the brain require decades of maturation and distillation of ideas. Unlike physics, which has great theories and is constantly in search for new tools to test them, neuroscience is still in its infancy, searching for the right questions. It is a bit like the Wild West, full of unknowns, where a single individual has the same chance to find the gold of knowledge as an industry-scale institution. Yet big ideas and guiding frameworks are much needed, especially when such large programs are outlined. Large-scale, top-down, coordinated mega projects should carefully explore whether their resources are being spent in the most efficient manner. When the BRAIN Initiative is finished, we may end up with extraordinary tools that will be used to make increasingly precise measurements of the same problems if we fail to train the new generation of neuroscientists to think big and synthesize.

Science is not just the art of measuring the world and converting it into equations. It is not simply a body of facts but a gloriously imperfect interpretation of their relationships. Facts and observations become scientific knowledge when their widest implications are explored with great breadth and force of thinking. While we all acknowledge that empirical research stands on a foundation of measurement, these observations need to be organized into coherent theories to allow further progress. Major scientific insights are typically declared to be important discoveries only later in history, progressively acquiring credibility after scrutiny by the community and after novel experiments support the theory and refute competing ones. Science is an iterative and recursive endeavor, a human activity. Recognizing and synthesizing critical insights takes time and effort. This is as true today as it has always been. A fundamental goal in neuroscience is identifying the principles of neuronal circuit organization. My conviction about the importance of this goal was my major motivation for writing this volume.

Writing a book requires an internal urge, an itching feeling that can be suppressed temporarily with distraction but always returns. That itch for me began a while ago. Upon receiving the Cortical Discoverer Prize from the Cajal Club (American Association of Anatomists) in 2001, I was invited to write a review for a prominent journal. I thought that the best way to exploit this opportunity was to write an essay about my problems with scientific terms and argue that our current framework in neuroscience may not be on the right track. A month later arrived the rejection letter: “Dear Gyuri, . . . I hope you

understand that for the sake of the journal we cannot publish your manuscript” [emphasis added]. I did not understand the connection between the content of my essay and the reputation of the journal. What was at stake? I called up my great supporter and crisis advisor, Theodore (Ted) Bullock at the University of California at San Diego, who listened carefully and told me to take a deep breath, put the issue on the back burner, and go back to the lab. I complied.

Yet, the issues kept bugging me. Over the years, I read as much as I could find on the connection between language and scientific thinking. I learned that many of “my original ideas” had been considered already, often in great detail and depth, by numerous scientists and philosophers, although those ideas have not effectively penetrated psychology or neuroscience. Today’s neuroscience is full of subjective explanations that often rephrase but do not really expound the roots of a problem. As I tried to uncover the origins of widely used neuroscience terms, I traveled deeper and deeper into the history of thinking about the mind and the brain. Most of the terms that form the basis of today’s cognitive neuroscience were constructed long before we knew anything about the brain, yet we somehow have never questioned their validity. As a result, human-concocted terms continue to influence modern research on brain mechanisms. I have not sought disagreement for its own sake; instead, I came slowly and reluctantly to the realization that the general practice in large areas of neuroscience follows a misguided philosophy. Recognizing this problem is important because the narratives we use to describe the world shape the way we design our experiments and interpret what we find. Yet another reason I spent so many hours thinking about the contents of this book is because I believe that observations held privately by small groups of specialists, no matter how remarkable, are not really scientific knowledge. Ideas become real only when they are explained to educated people free of preconceived notions who can question and dispute those ideas. Gergonne’s definition, cited at the beginning of this Preface, is a high bar. Neuroscience is a complex subject. Scientists involved in everyday research are extremely cautious when it comes to simplification— and for good reasons. Simplification often comes at the expense of glossing over depth and the crucial details that make one scientific theory different from another. In research papers, scientists write to other scientists in language that is comprehensible to perhaps a handful of readers. But experimental findings from the laboratory gain their power only when they are understood by people outside the trade.

Why is it difficult for scientists to write in simple language? One reason is because we are part of a community where every statement and idea should be credited to fellow scientists. Professional science writers have the luxury of borrowing ideas from anyone, combining them in unexpected ways, simplifying and illuminating them with attractive metaphors, and packaging them in a

mesmerizing narrative. They can do this without hesitation because the audience is aware that the author is a smart storyteller and not the maker of the discoveries. However, when scientists follow such a path, it is hard to distinguish, both for them and the audience, whether the beautiful insights and earthshaking ideas were sparked from their own brains or from other hard-working colleagues. We cannot easily change hats for convenience and be storytellers, arbitrators, and involved, opinionated players at the same time because we may mislead the audience. This tension is likely reflected by the material presented in this book. The topics I understand best are inevitably written more densely, despite my best efforts. Several chapters, on the other hand, discuss topics that I do not directly study. I had to read extensively in those areas, think about them hard, simplify the ideas, and weave them into a coherent picture. I hope that, despite the unavoidable but perhaps excusable complexity here and there, most ideas are graspable.

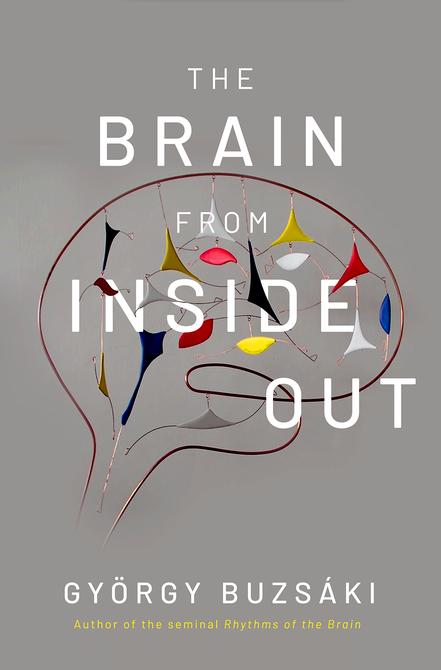

The core argument of this book is that the brain is a self-organized system with preexisting connectivity and dynamics whose main job is to generate actions and to examine and predict the consequences of those actions. This view—I refer to it as the “inside-out” strategy—is a departure from the dominant framework of mainstream neuroscience, which suggests that the brain’s task is to perceive and represent the world, process information, and decide how to respond to it in an “outside-in” manner. In the pages ahead, I highlight the fundamental differences between these two frameworks. Many arguments that I present have been around for quite some time and have been discussed by outstanding thinkers, although not in the context of contemporary neuroscience. My goal is to combine these ideas in one place, dedicating several chapters to discussing the merits of my recommended inside-out treatment of the brain.

Many extraordinary findings have surfaced in neuroscience over the past few decades. Synthesizing these discoveries so that we can see the forest beyond the trees and presenting them to readers is a challenge that requires skills most scientists do not have. To help meet that challenge, I took advantage of a dual format in this volume. The main text is meant to convey the cardinal message to an intelligent and curious person with a passion or at least respect for science. Expecting that the expert reader may want more, I expand on these topics in footnotes. I also use the footnotes to link to the relevant literature and occasionally for clarification. In keeping with the gold standards of scientific writing, I cite the first relevant paper on the topic and a comprehensive review whenever possible. When different aspects of the same problems are discussed by multiple papers, I attempt to list the most relevant ones.

Obviously, a lot of subjectivity and unwarranted ignorance goes into such a choice. Although I attempted to reach a balance between summarizing large chunks of work by many and crediting the deserving researchers, I am aware

that I did not always succeed. I apologize to those whose work I may have ignored or missed. My goals were to find simplicity amid complexity and create a readable narrative without appearing oversimplistic. I hope that I reached this goal at least in a few places, although I am aware that I often failed. The latter outcome is not tragic, as failure is what scientists experience every day in the lab. Resilience to failure, humiliation, and rejection are the most important ingredients of a scientific career.

ACKNOWLEDGMENTS

No story takes place in a vacuum. My intellectual development owes a great deal to my mentor Endre Grastyán and to my postdoctoral advisor Cornelius (Case) Vanderwolf. I am also indebted to the many students and postdoctoral fellows who have worked with me and inspired me throughout the years.5 Their success in science and life is my constant source of happiness. Without their dedication, hard work, and creativity, The Brain from Inside Out would not exist simply because there would not have been much to write about. Many fun discussions I had with them found their way into my writing.

I thank the outstanding colleagues who collaborated with me on various projects relating to the topic of this book, especially Costas Anastassiou, László Acsády, Yehezkel Ben-Ari, Antal Berényi, Reginald Bickford, Anders Björklund, Anatol Bragin, Ted Bullock, Carina Curto, Gábor Czéh, János Czopf, Orrin Devinsky, Werner Doyle, Andreas Draguhn, Eduardo Eidelberg, Jerome (Pete) Engel, Bruce McEwen, Tamás Freund, Karl Friston, Fred (Rusty) Gage, Ferenc Gallyas, Helmut Haas, Vladimir Itskov, Kai Kaila, Anita Kamondi, Eric Kandel, Lóránd Kellényi, Richard Kempter, Rustem Khazipov, Thomas Klausberger, Christof Koch, John Kubie, Stan Leung, John Lisman, Michael Long, Nikos Logothetis, András Lörincz, Anli Liu, Attila Losonczy, Jeff Magee, Drew Maurer, Hannah Monyer, Bruce McNaughton, Richard Miles, István Mody, Edvard Moser, Lucas Parra, David Redish, Marc Raichle, John Rinzel, Mark Schnitzer, Fernando Lopes da Silva, Wolf Singer, Ivan Soltész, Fritz Sommer, Péter Somogyi, Mircea Steriade, Imre Szirmai, Jim Tepper, Roger Traub, Richard Tsien, Ken Wise, Xiao-Jing Wang, Euisik Yoon, László Záborszky, Hongkui Zeng, and Michaël Zugaro.

5. See them here https://neurotree.org/beta/tree.php?pid=5038

Although I read extensively in various fields of science, books were not my only source of ideas and inspiration. Monthly lunches with Rodolfo Llinás, my admired colleague at New York University, over the past five years have enabled pleasurable exchanges of our respective views on everything from world politics to consciousness. Although our debates were occasionally fierce, we always departed in a good mood, ready for the next round.

I would also like to thank my outstanding previous and present colleagues at Rutgers University and New York University for their support. More generally, I would like to express my gratitude to a number of people whose examples, encouragement, and criticism have served as constant reminders of the wonderful collegiality of our profession: David Amaral, Per Andersen, AlbertLászló Barabási, Carol Barnes, April Benasich, Alain Berthoz, Brian Bland, Alex Borbely, Jan Born, Michael Brecht, Jan Bures, Patricia Churchland, Chiara Cirelli, Francis Crick, Winfried Denk, Gerry Edelman, Howard Eichenbaum, Andre Fenton, Steve Fox, Loren Frank, Mel Goodale, Katalin Gothard, Charlie Gray, Ann Graybiel, Jim McGaugh, Michale Fee, Gord Fishell, Mark Gluck, Michael Häusser, Walter Heiligenberg, Bob Isaacson, Michael Kahana, George Karmos, James Knierim, Bob Knight, Nancy Kopell, Gilles Laurent, Joe LeDoux, Joe Martinez, Helen Mayberg, David McCormick, Jim McGaugh, Mayank Mehta, Sherry Mizumori, May-Britt Moser, Tony Movshon, Robert Muller, Lynn Nadel, Zoltán Nusser, John O’Keefe, Denis Paré, Liset de la Prida, Alain Prochiantz, Marc Raichle, Pasko Rakic, Jim Ranck, Chuck Ribak, Dima Rinberg, Helen Scharfmann, Menahem Segal, Terry Sejnowski, László Seress, Alcino Silva, Bob Sloviter, David Smith, Larry Squire, Wendy Suzuki, Karel Svoboda, Gábor Tamás, David Tank, Jim Tepper, Alex Thomson, Susumu Tonegawa, Giulio Tononi, Matt Wilson, and Menno Witter. There are many more people who are important to me, and I wish I had the space to thank them all. Not all of these outstanding colleagues agree with my views, but we all agree that debate is the engine of progress. Novel truths are foes to old ones.

Without the freedom provided by my friend and colleague Dick Tsien, I would never have gotten started with this enterprise. Tamás Freund generously hosted me in his laboratory in Budapest, where I could peacefully focus on the problem of space and time as related to the brain. Going to scientific events, especially in far-away places, has a hidden advantage: long flights and waiting for connections in airports, with no worries about telephone calls, emails, review solicitations, or other distractions. Just read, think, and write. I wrote the bulk of this book on such trips. On two occasions, I missed my plane because I got deeply immersed in a difficult topic and neglected to respect the cruelty of time.

But my real thanks go to the generous souls who, at the expense of their own time, read and improved the various chapters, offered an invaluable

mix of encouragement and criticism, saved me from error, or provided crucial insights and pointers to papers and books that I was not aware of. I am deeply appreciative of their support: László Acsády, László Barabási, Jimena Canales, George Dragoi, Janina Ferbinteanu, Tibor Koos, Dan Levenstein, Joe LeDoux, Andrew Maurer, Sam McKenzie, Lynn Nadel, Liset Menendez de la Prida, Adrien Peyrache, Marcus Raichle, Dima Rinberg, David Schneider, JeanJacque Slotine, Alcino Silva, and Ivan Soltész.

Sandra Aamodt was an extremely efficient and helpful supervisor of earlier versions of the text. Thanks to her experienced eyes and language skills, the readability of the manuscript improved tremendously. I would also like to acknowledge the able assistance of Elena Nikaronova for her artistic and expert help with figures and Aimee Chow for compiling the reference list.

Craig Panner, my editor at Oxford University Press, has been wonderful; I am grateful to him for shepherding me through this process. It was rewarding to have a pro like him on my side. I owe him a large debt of thanks.

Finally, and above all, I would like to express my eternal love and gratitude to my wife, Veronika Solt, for her constant support and encouragement and to my lovely daughters, Lili and Hanna, whose existence continues to make my life worthwhile.

The Problem

The confusions which occupy us arise when language is like an engine idling, not when it is doing work.

Ludwig Wittgenstein1

There is nothing so absurd that it cannot be believed as truth if repeated often enough.

William James2

A dream we dream together is reality.

TMan 2017

he mystery is always in the middle. I learned this wisdom early as a course instructor in the Medical School of the University at Pécs, Hungary. In my neurophysiology seminars, I enthusiastically explained how the brain interacts with the body and the surrounding world. Sensory stimuli are transduced to electricity in the peripheral sensors, which then transmit impulses to the midbrain and primary sensory cortices and subsequently induce sensation. Conversely, on the motor arc, the direct cortical

1. “Philosophy is a battle against the bewitchment of our intelligence by means of our language” (Wittgenstein: Philosophical Investigations, 1973). See also Quine et al. (2013). “NeuroNotes” throughout this book aim to remind us how creativity and mental disease are intertwined (Andreasen, 1987; Kéri, 2009; Power et al., 2015; Oscoz-Irurozqui and Ortuño, 2016). [NeuroNote: Wittgenstein, a son of one of Austria’s wealthiest families, was severely depressed. Three of his four brothers committed suicide; Gottlieb, 2009].

2. http://libertytree.ca/quotes/William.James.Quote.7EE1.

Burning

pathway from the large pyramidal cells of the primary motor cortex and multiple indirect pathways converge on the anterior horn motor neurons of the spinal cord, whose firing induces muscular contraction. There was a long list of anatomical details and biophysical properties of neurons that the curriculum demanded the students to memorize and the instructors to explain them. I was good at entertaining my students with the details, preparing them to answer the exam questions, and engaging them in solving mini-problems. Yet a minority of them—I should say the clever ones—were rarely satisfied with my textbook stories. “Where in the brain does perception occur?” and “What initiates my finger movement, you know, before the large pyramidal cells get fired?”—were the typical questions. “In the prefrontal cortex” was my usual answer, before I skillfully changed the subject or used a few Latin terms that nobody really understood but that sounded scientific enough so that my authoritative-appearing explanations temporarily satisfied them. My inability to give mechanistic and logical answers to these legitimate questions has haunted me ever since—as it likely does every self-respecting neuroscientist.3 How do I explain something that I do not understand? Over the years, I realized that the problem is not unique to me. Many of my colleagues— whether they admit it or not—feel the same way. One reason is that the brain is complicated stuff and our science is still in its young phase, facing many unknowns. And most of the unknowns, the true mysteries of the brain, are hidden in the middle, far from the brain’s sensory analyzers and motor effectors. Historically, research on the brain has been working its way in from the outside, hoping that such systematic exploration will take us some day to the middle and on through the middle to the output. I often wondered whether this is the right or the only way to succeed, and I wrote this book to offer a complementary strategy.

In this introductory chapter, I attempt to explain where I see the stumbling blocks and briefly summarize my alternative views. As will be evident throughout the following chapters, I do believe in the framework I propose. Some of my colleagues will side with me; others won’t. This is, of course, expected whenever the frontiers of science are discussed and challenged, and I want to state this clearly up front. This book is not to explain the understood but is instead an invitation to think about the most fascinating problems humankind can address. An adventure into the unknown: us.

3. The term “neuroscientist” was introduced in 1969 when the Society for Neuroscience was founded in the United States.

ORIGIN OF TODAY’S FRAMEWORK IN NEUROSCIENCE

Scientific interest in the brain began with the epistemological problem of how the mind learns the “truth” and understands the veridical, objective world. Historically, investigations of the brain have moved from introspection to experimentation, and, along this journey, investigators have created numerous terms to express individual views. Philosophers and psychologists started this detective investigation by asking how our sensors—eyes, ears, and nose—sense the world "out there," and how they convey its features to our minds. The crux of the problem lies right here. Early thinkers, such as Aristotle, unintentionally assumed a dual role for the mind: making up both the explanandum (the thing to-be-explained) and providing the explanans (the things that explain).4 They imagined things, gave them names, and now, millennia later, we search for neural mechanisms that might relate to their dreamed-up ideas.5

As new ideas about the mind were conceived, the list of things to be explained kept increasing, resulting in a progressive redivision of the brain’s real estate. As a first attempt, or misstep, Franz Joseph Gall and his nineteenthcentury followers claimed that our various mental faculties are localized in distinct brain areas and that these areas could be identified by the bumps and uneven geography of the skull—a practice that became known as phrenology (Figure 1.1). Gall suggested that the brain can be divided into separate "organs," which we would call “regions” today. Nineteen of the arbitrarily divided regions were responsible for faculties shared with other animals, such as reproduction, memory of things, and time. The remaining eight regions were specific to humans, like the sense of metaphysics, poetry, satire, and religion. 6 Today, phrenology is ridiculed as pseudoscience because we know that bumps on the skull have very little to do with the shape and regionalization of the brain. Gall represented to neuroscience what Jean-Baptiste Lamarck represented to

4. Aristotle (1908). We often commit similar mistakes of duality in neuroscience. To explain our results, we build a “realistic” computational model to convince ourselves and others that the model represents closely and reliably the “thing-to-be-explained.” At the same time, the model also serves to explain the biological problem.

5. A concise introduction to this topic is by Vanderwolf (2007). See also Bullock (1970).

6. Gall’s attempt to find homes for alleged functions in the body was not the first one. In Buddhism, especially in Kundalini yoga, “psychological centers” have been distributed throughout the entire body, known as chakras or “wheels.” These levels are the genitals (energy), the navel (fire, insatiable power), heart (imaginary of art, dreams), the larynx (purification), mystic inwardly looking eye (authority), and the crown of the head (thoughts and feelings). The different levels are coordinated by the spine, representing a coiled serpent, in a harmonious rhythmic fashion. See also Jones et al. (2018).

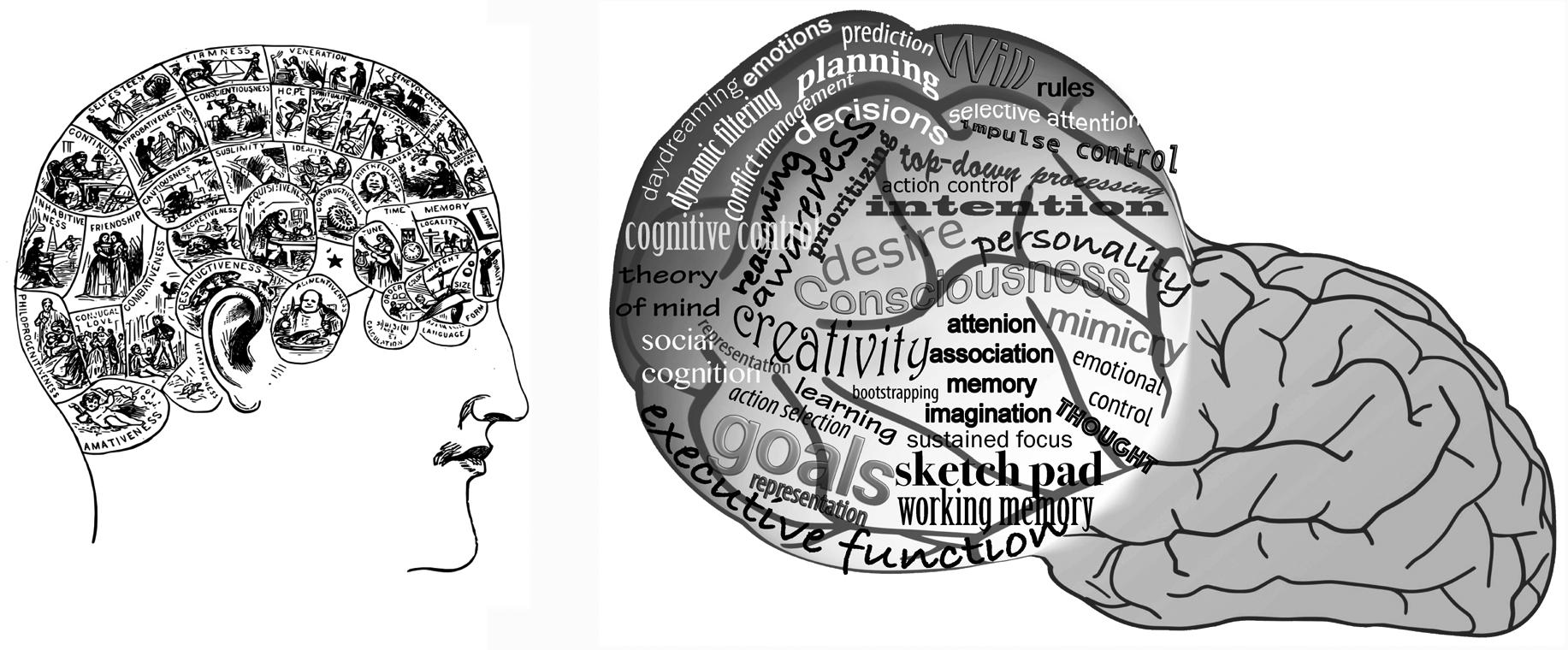

Figure 1.1. A: Franz Joseph Gall and his phrenologist followers believed that our various mental faculties are localized in distinct brain areas that could be identified by the bumps and uneven geography of the skull. Phrenology (or cranioscopy) is ridiculed as pseudoscience today. B: Imaging-based localization of our alleged cognitive faculties today. I found more than 100 cognitive terms associated with the prefrontal cortex alone, some of which are shown here.

evolution. A reminder that being wrong does not mean being useless in science. Surprisingly, very few have complained about the more serious nonsense, which is trying to find “boxes” in the brain for human-invented terms and concepts. This strategy itself is a bigger crime than its poor implementation—the failure to find the right regions.

There Are Too Many Notes

“There are simply too many notes. Just cut a few and it will be perfect,” said the emperor to the young Mozart. While this was a ludicrous line in the movie Amadeus, it may be a useful message today in cognitive neuroscience jargon. There is simply not enough space in the brain for the many terms that have accumulated about the mind prior to brain research (Figure 1.1). Anyone versed in neuroanatomy can tell you that Korbinian Brodmann’s monumental work on the cytoarchitectural organization of the cerebral cortex distinguished 52 areas in the human brain. Many investigators inferred that differences in intrinsic anatomical patterns amount to functional specialization. Contemporary methods using multimodal magnetic resonance imaging (MRI) have identified 180 cortical areas bounded by relatively sharp changes in cortical architecture, connectivity, and/or topography. Does this mean that now we have many more potential boxes in the brain to be equated with our

preconceived ideas?7 But even with this recent expansion of brain regions, there are still many more human-concocted terms than there are cortical areas. As I discuss later in the book, cognitive functions usually arise from a relationship between regions rather than from local activity in isolated areas. But even if we accept that interregional interactions are more important than computation in individual areas, searching for correspondences between a dreamed-up term and brain activity cannot be the right strategy for understanding the brain.

THE PHILOSOPHICAL ROOTS

If cognitive psychology has a birthdate, it would be 1890, when The Principles of Psychology, written by the American philosopher and psychologist William James, was published.8 His treatment of the mind–world connection (the "stream of consciousness") had a huge impact on avant garde art and literature, and the influence of his magnum opus on cognitive science and today’s neuroscience is hard to overestimate. While each of its chapters was an extraordinary contribution at the time, topics discussed therein are now familiar and acceptable to us. Just look at the table of contents of that 1890 work:

Volume 1

Chapter IV—Habit

Chapter VI —The mind–stuff theory

Chapter IX— The stream of thought

Chapter X—The consciousness of self

Chapter XI—Attention

Chapter XII—Conception

Chapter XIII—Discrimination and comparison

Chapter XIV—Association

Chapter XV—Perception of time

Chapter XVI—Memory

Volume 2

Chapter XVII—Sensation

Chapter XVIII—Imagination

7. Brodmann (1909); Glasser et al. (2016). For light reading about a recent quantitative cranioscopy analysis, see Parker Jones et al. (2018).

8. James (1890).

Chapter XIX—The perception of “things”

Chapter XX—The perception of space

Chapter XXI—The perception of reality

Chapter XXII—Reasoning

Chapter XXIII—The production of movement

Chapter XXIV—Instinct

Chapter XXV—The emotions

Chapter XXVI—Will

Over the years, these terms and concepts assumed their own lives, began to appear real, and became the de facto terminology of cognitive psychology and, subsequently, cognitive neuroscience.

Our Inherited Neuroscience Vocabulary

When neuroscience entered the scene in the twentieth century, it unconditionally adopted James’s terms and formulated a program to find a home in the brain for each of them (e.g., in human imaging experiments) and to identify their underlying neuronal mechanisms (e.g., in neurophysiology). This strategy has continued to this day. The overwhelming majority of neuroscientists can pick one of the items from James’s table of contents and declare, “this is the problem I am interested in, and I am trying to figure out its brain mechanisms.” Notice, though, that this research program—finding correspondences between assumed mental constructs and the physical brain areas that are “responsible” for them—is not fundamentally different from phrenology. The difference is that instead of searching for correlations between mind-related terms and the skull landscape, today we deploy high-tech methods to collect information on the firing patterns of single neurons, neuronal connections, population interactions, gene expression, changes in functional MRI (fMRI), and other sophisticated variables. Yet the basic philosophy has remained the same: to explain how human-constructed ideas relate to brain activity.

Let’s pause a bit here. How on earth do we expect that the terms in our dreamed-up vocabulary, concocted by enthusiastic predecessors hundreds (or even thousands) of years before we knew anything about the brain, map onto brain mechanisms with similar boundaries? Neuroscience, especially its cognitive branch, has become a victim of this inherited framework, a captive of its ancient nomenclature. We continue to refer to made-up words and concepts and look for their places in the brain by lesion studies, imaging, and other methods. The alleged boundaries between predetermined concepts guide the search, rather than the relationships between interactive brain processes. We

identify hotspots of activity in the brain by some criteria, correlate them with James’s and others’ codified categories, and mark those places in the brain as their residences. To drive home my point with some sarcasm, I call this approach “neo-phrenology.”9 In both phrenology and its contemporary version, we take ideas and look for brain mechanisms that can explain those ideas. Throughout this book, I refer to this practice as the “outside-in” strategy, as opposed to my alternative “inside-out” framework (Chapters 3, 5 and 13).

That brings me to the second needed correction in the direction of modern neuroscience: the thing-to-be-explained should be the activities of the brain, not the invented terms. After all, the brain gives rise to behavior and cognition. The brain should be treated as an independent variable because behavior and cognition depend on brain activity, not the other way around. Yet, in our current research, we take terms like emotions, memory, and planning as the to-beexplained independent categories. We will address these issues head on when we discuss the role of correlations and causation in neuroscience in Chapter 2.

The Outside- In Program

How has the outside-in program come to dominate modern neuroscientific thought? We can find clues in James’s Table of Contents. Most of the titles have something to do with sensation and perception, which we can call the inputs to the brain coming from the outside. This is not by chance; William James and most early psychologists were strongly influenced by British empiricism, which in turn is based on Christian philosophies. Under the empiricist framework, knowledge derives from the (human) brain’s ability to perceive and interpret the objective world. According to the empiricist philosopher David Hume,10 all our knowledge arises from perceptual associations and inductive reasoning to reveal cause-and-effect relationships.11 Nothing can exist in the mind without first being detected by the senses because the mind is built out of sensations of the physical world. He listed three principles of association: resemblance, contiguity in time and place, and causation. For example, reading a poem can make you think of a related poem. Recalling one thing (cause) leads another thing to

9. Poldrack (2010) also sees a parallel between phrenology and the new fMRI mapping approach. However, his suggested program for a “search for selective associations” still remains within the outside-in framework.

10. [NeuroNote: Hume had several “nervous breakdown” episodes in his life.]

11. Associational theories assume that sequential events, such as our memories and action plans, are built by chaining associated pairs of events (a with b, b with c, etc). However, neuronal sequences (Chapter 7) contain important higher order links as well.