From prediction to precision

When machines start reasoning

Intelligence on the work surface

Designing the intelligent facility

Managing

Director

Adam Soroka

Editor Mark Venables

Artificial intelligence has moved from the margins of innovation to the centre of industrial strategy. In oil and gas, it is no longer a futuristic promise but a working reality, one that touches every aspect of exploration, production, and maintenance. What distinguishes today’s transformation from previous digital revolutions is the depth of integration. AI is not simply analysing operations; it is beginning to participate in them.

This issue of Future Digital Twin & AI explores that evolution through in-depth features that trace how intelligence is being engineered into the industry’s fabric. From data federation and contextualisation to agentic reasoning and immersive remote operations, each article examines a different stage in the journey toward a connected, autonomous, and human-centred energy enterprise.

The series begins with a look at how federated data infrastructures and interoperable platforms are transforming the way information flows across engineering and operational domains. It shows that the path to intelligence starts with context, with uniting data that has long been fragmented across silos and systems. The second feature moves into the realm of design, examining how digital twins and AI are redefining facility planning and introducing a new vocabulary of responsibility, security, and openness.

From there, the narrative expands into organisational transformation. The third article introduces agentic AI and retrieval-augmented generation, technologies that enable machines to reason and collaborate with humans through dynamic digital twins. The fourth turns the lens to the operational front line, exploring how AI-infused models are changing exploration,

drilling, and predictive maintenance while highlighting the legal, cultural, and ethical frameworks needed to keep innovation grounded.

The final feature looks toward the horizon, where remote operations, immersive technologies, and AI converge within the industrial metaverse. It portrays a future where digital twins act as command hubs of distributed intelligence, enabling safer, more efficient, and more connected ways of working across global energy networks.

Together, these articles capture a defining moment for the sector. Intelligence is no longer confined to the control room or the data centre; it is being woven into every process, asset, and decision. The challenge now is to ensure that this new intelligence serves not only machines but the people who operate, design, and depend on them.

The future of oil and gas will not be written by algorithms alone. It will be shaped by the collaboration between human insight and digital reasoning, by the engineers who understand that the next great resource is not data itself, but the intelligence we create from it.

Mark Venables Editor

Future Digital Twin & AI

Turning data into context in the connected oilfield

Data is now the most valuable asset in oil and gas, but its true potential lies not in collection but in context. Transforming isolated engineering and operational data into an intelligent, connected fabric demands federation, interoperability, and a commitment to common standards that bridge silos and disciplines.

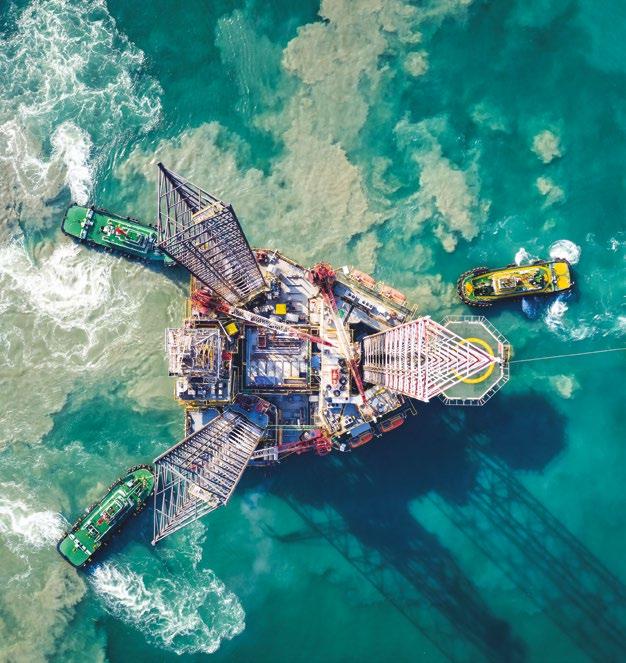

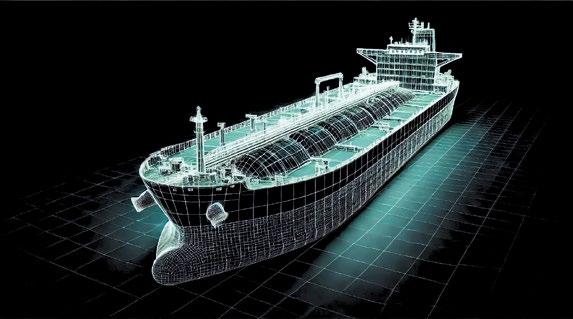

When prediction becomes precision in the digital oilfield

Machine learning and AI-infused models are reshaping how oil and gas companies explore, drill, and maintain their assets. The shift from reactive analysis to predictive intelligence is transforming operations, but its success depends as much on people and process as it does on algorithms.

Wassim Ghadban, SVP Digital and AI at Kent, explores how artificial intelligence and digital twins are converging to transform the oil and gas industry. By combining human expertise with AI-driven intelligence, this new era of automation promises to accelerate decarbonisation, enhance safety, and create truly autonomous operations across the energy value chain.

When machines start reasoning in the energy enterprise

Agentic AI and retrieval-augmented generation are beginning to change how oil and gas organisations think, plan, and act. Their integration with digital twins is creating intelligent systems that no longer just describe operations but interpret, reason, and decide across the entire value chain.

26 Intelligence on the industrial work surface

Artificial intelligence is transforming the digital twin from a passive representation into an operational command layer. By merging physics, data, and human expertise, this new generation of twins is becoming the decision surface for the modern energy enterprise.

30 Intelligence by design in the next generation oil facility

The oil and gas industry is entering a new era where artificial intelligence and digital twins are reshaping how facilities are conceived, built, and run. This convergence promises greater efficiency and resilience, but it also demands new thinking about cybersecurity, governance, and the responsible use of machine intelligence.

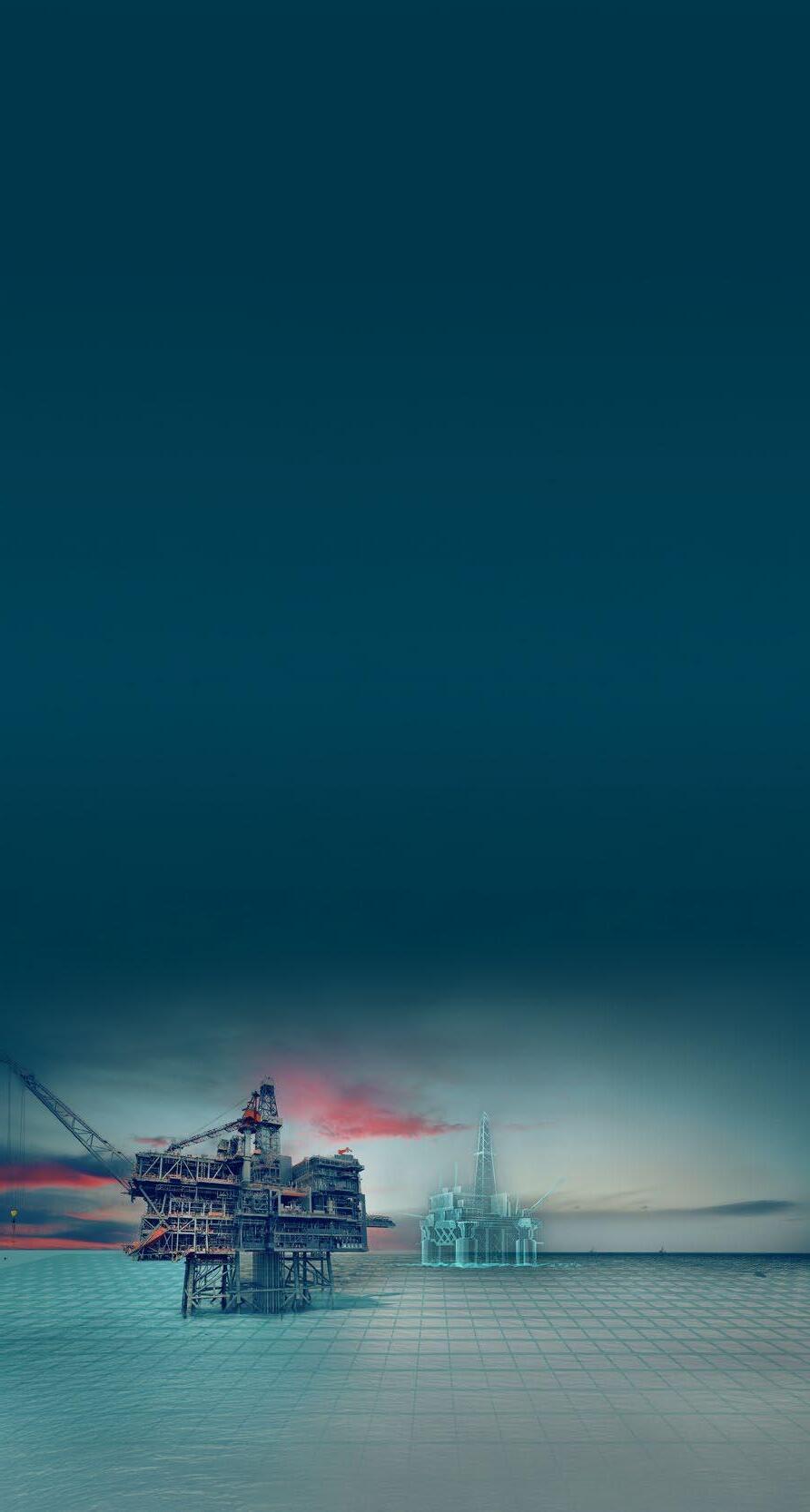

36 When distance becomes intelligence in the connected oilfield

The convergence of artificial intelligence, digital twins, and immersive technologies is redefining remote operations in oil and gas. What was once a matter of monitoring from afar is becoming a dynamic, data-rich environment where assets think, learn, and collaborate with people in real time.

40 Human intelligence at the heart of the digital twin revolution

The next phase of digital transformation in offshore asset management will not be defined by autonomy alone. It will depend on how effectively digital twins augment, rather than replace, the judgement of engineers tasked with safeguarding ageing infrastructure.

44 44 Digital twins and large language models redefine intelligence in the energy enterprise

Arvin Singh, Partner - Head of Digital Advisory MEA and SE-Asia, Wood explains how the fusion of large language models and digital twins marks a decisive shift in how the energy industry creates and applies intelligence. By enabling natural-language interaction with complex operational data, this integration transforms digital twins from static systems of record into dynamic ecosystems of reasoning that drive efficiency, agility, and sustainable value across the entire energy value chain

Final Word: When data learns to think

Artificial intelligence has been described as the next industrial revolution, but revolutions are rarely tidy. They disrupt assumptions, expose weaknesses, and redefine what expertise means. In oil and gas, the arrival of AI has done something even more profound: it has changed the rhythm of decisionmaking.

Empower your teams with AI-driven* digital twin and integrated information management.

*In development

As a global software company, we make complex engineering and operational data simple so you can focus on results.

Data is now the most valuable asset in oil and gas, but its true potential lies not in collection but in context. Transforming isolated engineering and operational data into an intelligent, connected fabric demands federation, interoperability, and a commitment to common standards that bridge silos and disciplines.

Every major oil and gas operator has amassed vast quantities of data over decades of exploration, drilling, production, and maintenance. Yet most of it remains trapped in silos, locked away in incompatible formats, or confined to specific assets and teams. As digital transformation accelerates, the challenge is no longer gathering data but connecting and contextualising it. A federated data infrastructure that unites engineering, process, and operational information can turn this fragmented landscape into a coherent system of insight, enabling the industry to model, predict, and optimise with new precision.

At the heart of data federation lies the ambition to create a single, logical view of all operational and engineering data without physically centralising it. The approach recognises that most organisations operate across complex technology stacks, legacy systems, and varying data ownership models. Rather than forcing migration to a single repository, a federated platform connects data sources where they reside, harmonising their meaning and structure so they can be used seamlessly across applications.

Companies such as Cognite have demonstrated the potential of this approach through Cognite Data Fusion, which contextualises information from multiple systems, from historians and control networks to maintenance logs and 3D models, to build a live digital representation of assets. AVEVA’s Unified Operations Centre applies a similar logic, integrating engineering and operational data within a single environment to allow operators to visualise performance in real time while maintaining traceability back

to the source. These platforms illustrate how federation can enable interoperability without compromising data integrity or security.

Security and latency remain major considerations. Federation requires distributed access to data across operational technology (OT) and information technology (IT) networks, often spanning offshore installations, refineries, and global headquarters. Ensuring that access is governed, encrypted, and compliant with cybersecurity frameworks such as IEC 62443 is essential. Edge computing has become a critical enabler, allowing raw data to be processed locally before being synchronised with the enterprise layer. This minimises latency, reduces network load, and protects sensitive control information while still feeding contextualised insights into the cloud for analysis.

A strong foundation also depends on robust

metadata management and governance. Without consistent frameworks to define ownership, access rights, and quality standards, even the most sophisticated platforms become fragmented. The most successful federations embed governance rules within the architecture itself, enforcing consistency while preserving flexibility. This balance is essential in oil and gas, where data is drawn from an extraordinary range of sources, seismic surveys, drilling logs, well completions, compressor stations, and control systems. When integrated through federation, these datasets form a single contextual layer that underpins everything from safety assurance to energy optimisation.

Creating a federation is as much a cultural transformation as it is a technical one. Engineering and operational data originate from systems built for specific purposes, each with its own

conventions and interfaces. Achieving true interoperability demands standardisation and automation, the ability to link data sources in a way that is logical, repeatable, and scalable.

Industry initiatives such as the Open Group’s OSDU Data Platform have advanced common standards for subsurface and well data, while OPC UA provides a reliable framework for process automation interoperability. Combined with emerging metadata and ontology models, these initiatives make it possible to describe assets, measurements, and relationships consistently across an enterprise. Once that foundation exists, automation can handle the heavy lifting of mapping, transforming, and synchronising data.

Emerson’s DeltaV and Rockwell Automation’s FactoryTalk exemplify how automation frameworks can integrate contextual data at

scale. By linking control systems, historians, and analytics engines, they allow operators to automate workflows such as condition monitoring and predictive maintenance. The benefit is twofold: operational efficiency improves, and engineers spend less time reconciling data. The goal is an environment where new sources can be connected with minimal manual effort and insights flow freely between engineering, operations, and business functions.

Automation also underpins trust. Algorithms detect anomalies in data streams, correct outliers, and even infer missing contextual information by identifying patterns across similar assets. What was once a manual and error-prone process is now reliable, traceable, and repeatable. Combined with AI-driven semantic search, this automation enables engineers to ask complex questions, about asset health, throughput, or emissions performance, and

obtain answers derived from millions of interlinked data points rather than isolated systems.

Neutralising process and physical models

Once data is contextualised, it becomes possible to bridge the gap between simulation and reality. Oil and gas operations rely heavily on process and physical models, from reservoir simulations to compressor dynamics, but these models are often constrained by their assumptions and isolated from live data. Through federation, these models can be neutralised, decoupled from proprietary formats, and enriched with continuous feedback from the field.

This concept supports what many now call the ‘living model’, where simulations evolve as the asset operates. Schneider Electric’s EcoStruxure and Siemens’ Xcelerator ecosystems enable virtual models that adapt in real time as new data flows in from sensors, control systems, and enterprise databases. The result is not just better optimisation but a transformation in how engineers work. They can test strategies virtually, assess the impact of maintenance interventions, and understand energy performance before changes are implemented in the field. This loop between physical operations and digital insight closes the gap between planning and execution.

A neutral model also drives collaboration. Design teams, operations personnel, and maintenance engineers can share the same digital representation of an asset without duplicating data. A modification to a process parameter, pump curve, or control loop can instantly appear across the model. This visibility accelerates decision-making, improves accuracy, and reduces rework. It also instils confidence that digital decisions

correspond precisely to physical behaviour, an essential condition for autonomous operations and future AI-driven optimisation.

The next step is coupling these living models with optimisation algorithms. By linking federated datasets to reinforcement learning systems or advanced analytics, engineers can explore operating conditions that balance output, cost, and emissions. The combination of simulation, data context, and autonomy has the potential to redefine asset performance management, turning every platform, rig, and refinery into a selfimproving system.

A digital twin comes to life only when static and dynamic data coexist in harmony. Static data defines the structure, the equipment, topology, and design intent, while dynamic data adds

movement through live process measurements, alarms, and events. Integrating these two within a common data model remains one of the most demanding challenges in industrial digitalisation.

Achieving it requires absolute clarity over data lineage and governance. Each data point must be traceable to its source, transformation rules must be visible, and underlying assumptions must be auditable. Without this transparency, trust erodes, and decision-makers revert to spreadsheets and intuition. Bentley Systems and Hexagon have made substantial progress by linking design and operational data, enabling engineers to move seamlessly from a digital P&ID to live asset performance data. This evolution transforms the twin from a visualisation tool into a strategic decision platform.

When contextual data models are unified, analytics becomes more powerful. Machine learning thrives

on relationships: temperature to vibration, pressure to flow, age to probability of failure. Federation exposes these relationships across entire networks, allowing AI to identify subtle patterns that human intuition might overlook. Predictive maintenance, energy efficiency, and emissions reduction then evolve from discrete projects into continuous, embedded capabilities.

Shell, for example, has deployed digital twins that merge static design information with real-time process data, enabling early detection of deviations and continuous integrity monitoring. Engineers can now simulate maintenance strategies virtually, testing alternatives before acting. This fusion of context and computation marks a new phase in digital operations, one where data no longer just records the past but actively shapes the present.

For oil and gas executives, the implications of

data federation and contextualisation stretch far beyond technology. The ability to unify data across disciplines redefines how organisations collaborate, innovate, and make strategic choices. It enables safer, more efficient, and more sustainable operations. Yet it also demands governance, cultural alignment, and long-term commitment. Data must be recognised as a strategic resource, not an IT project.

Progressive operators such as Equinor and BP demonstrate what this transformation looks like in practice. Equinor’s Omnia platform federates data from across the enterprise, supporting cross-domain analytics that improve both production and carbon management. BP’s digital twin programmes blend live data with engineering models to optimise uptime and reduce maintenance costs. Chevron, ADNOC, and Saudi Aramco are pursuing similar initiatives to create operational intelligence layers capable of connecting predictive maintenance, emissions management, and production optimisation into unified digital ecosystems.

Beyond the operational benefits lies a more strategic imperative. Contextualised data provides the foundation for decarbonisation and energy efficiency at scale. When emissions data is integrated with production and supply chain models, companies can measure, verify, and reduce carbon intensity with far greater accuracy. It also supports workforce transformation: engineers gain access to trusted, contextual information regardless of location, enabling remote collaboration and faster decision-making. As experienced personnel retire, this knowledge transfer becomes essential to maintaining performance across global operations.

As the industry continues to adapt to the energy transition, the capacity to turn data into context will define competitive advantage. Federation and interoperability are not abstract technical goals; they are the mechanisms by which organisations achieve agility in a volatile world. Those who master this capability will not merely automate operations but reshape how the oilfield thinks, turning data into the common language of decision-making.

Machine learning and AI-infused models are reshaping how oil and gas companies explore, drill, and maintain their assets. The shift from reactive analysis to predictive intelligence is transforming operations, but its success depends as much on people and process as it does on algorithms.

The digital transformation of oil and gas has entered a decisive stage. After years of experimentation, machine learning and artificial intelligence are now embedded in day-to-day workflows, influencing how decisions are made from the subsurface to the refinery floor. What began as an exercise in automation has evolved into a discipline of precision prediction, enabling operators to anticipate problems before they occur, adapt drilling parameters on the fly, and optimise maintenance schedules across global fleets.

Yet as the technology matures, it raises new questions about trust, governance, and the human role in a world increasingly guided by models. The challenge is no longer just about developing better algorithms but about integrating them responsibly, aligning human expertise, legal frameworks, and corporate culture with the pace of innovation.

Predictive maintenance remains one of the most tangible success stories for artificial intelligence in oil and gas. Using sensors, data historians, and physics-based simulations, operators can now detect patterns that signal early signs of equipment degradation. Machine learning models trained on years of operational data can predict when a compressor bearing will fail, when a pump’s vibration pattern deviates from normal behaviour, or when a subsea valve begins to drift out of tolerance.

The impact on safety and cost is measurable. Predictive analytics reduces unplanned shutdowns, lowers maintenance costs, and extends the life of critical assets. A study by Baker Hughes indicated that AI-driven maintenance strategies can reduce downtime by up to 30 per cent and cut inspection costs by nearly half. For offshore platforms, where every hour of production loss carries a heavy financial penalty, these savings quickly compound into millions of dollars.

Machine learning is also reshaping the maintenance culture itself. Instead of following

rigid schedules, teams can prioritise interventions based on risk profiles generated by AI. Halliburton’s Digital Well Operations and SLB’s Delfi platform both incorporate predictive algorithms that learn continuously from field data, improving the precision of maintenance forecasts. Over time, these systems evolve from advisory tools into trusted operational partners that understand not only the physical condition of assets but also the context in which they operate.

However, the real advance lies in how predictive maintenance integrates with wider safety systems. By combining machine learning with digital twin technology, operators can simulate potential failure scenarios, test contingency plans, and validate emergency responses before they are needed. In effect, AI transforms maintenance from a reactive function into a proactive layer of risk management.

The rapid adoption of AI has forced the oil and gas sector to confront questions that reach far beyond technology. Data privacy, intellectual property, and regulatory compliance have become strategic considerations for executives deploying machine learning and generative models at scale.

At the heart of this debate lies the balance between innovation and control. AI systems thrive on large datasets, but those datasets often contain proprietary information, geological surveys, equipment specifications, or operational records, that cannot

be shared freely. To protect sensitive material while still benefiting from AI insights, companies are adopting layered governance frameworks that define how data is accessed, anonymised, and audited.

BP and Shell, for instance, have both implemented internal ‘AI assurance, programmes that evaluate algorithms for transparency, fairness, and security before deployment. These frameworks mirror emerging international standards such as ISO/IEC 23894 on AI risk management and the EU AI Act, which classify industrial AI as high-risk technology requiring continuous oversight.

Privacy concerns extend to digital twins, which are increasingly used as integration points for AI models. A twin may consolidate data from design, operations, and supply chain systems, creating a

comprehensive digital replica of the facility. If governance is weak, that replica can inadvertently expose trade secrets or sensitive operational data. The use of retrievalbased AI architectures, where models query secure data stores rather than ingesting raw data, is becoming an important safeguard against such leaks.

Legal accountability is another emerging frontier. If an AI model recommends that leads to a costly error or safety incident, determining liability is complex. The industry is therefore beginning to adopt shared responsibility models in which both human operators and algorithm developers maintain joint accountability. This collaborative approach ensures that human oversight remains integral to every AI-driven decision.

Technology can only deliver its full potential when people understand, trust, and engage with it. The human dimension of AI adoption is often underestimated, yet it is crucial in an industry where safety culture and domain expertise remain paramount.

The introduction of AI-infused models is transforming job roles across the energy value chain. Geoscientists are becoming data interpreters, drilling engineers are learning to work with reinforcement learning algorithms, and maintenance teams are using predictive dashboards instead of manual logs. This shift requires not only technical upskilling but cultural adaptation. Employees must move from deterministic thinking to probabilistic reasoning, from expecting precise answers to interpreting patterns and probabilities.

Progressive operators are investing in training programmes that blend engineering fundamentals with data science. Chevron’s digital academy and Equinor’s machine learning centre of excellence are examples of initiatives that aim to bridge the gap between domain knowledge and analytical capability. These efforts are also reshaping workforce demographics. The influx of data

specialists, software developers, and AI ethicists into traditionally mechanical environments is creating a more interdisciplinary culture.

Diversity itself has become an asset in the AI era. Teams that mix operational experience with computational expertise tend to produce more resilient models and challenge assumptions that homogeneous groups might overlook. In times of rapid change, psychological safety, the ability to question algorithms and challenge outputs without fear of reprisal, becomes as important as cybersecurity.

The goal is alignment: a workforce confident enough to use AI insights, sceptical enough to validate them, and empowered enough to integrate them into safe, productive workflows.

The digital twin is evolving into something far more sophisticated than a static 3D model. Dynamic twins, enriched by machine learning and advanced visualisation, are now forming the backbone of autonomous operations. They integrate process data, simulation models, and predictive analytics into immersive environments where engineers can observe, test, and interact with live systems in real time.

In drilling, this approach is particularly powerful. AI-infused twins can simulate downhole conditions with extraordinary accuracy, allowing engineers to adjust parameters such as mud weight, bit pressure, and rotation speed on the fly. Predictive models trained on historical drilling data can identify signs of kick, stuck pipe, or borehole instability before they escalate. When linked to automated control systems, these insights enable real-time optimisation with minimal human intervention.

Companies such as Nabors Industries, SLB, and Halliburton are already using AI-enhanced digital twins in their rig control platforms. Nabors’ SmartROS and Halliburton’s iCruise system, for example, combine sensor analytics and machine learning to refine drilling trajectories and improve rate of penetration. In production, dynamic twins help operators optimise energy consumption, monitor corrosion in pipelines,

and simulate process changes before they are implemented.

The next frontier is the integration of digital twin operator training simulators (OTS) with AI. These immersive environments use 3D visualisation, augmented reality, and natural-language interaction to train crews for complex scenarios such as blowouts or equipment malfunctions. AI agents can evaluate trainee decisions, adapt scenarios in real time, and provide personalised feedback. The result is a continuous learning loop between human and machine, a digital rehearsal space for the autonomous oilfield.

Autonomy, however, must be approached with caution. As decision-making shifts from human intuition to algorithmic reasoning, maintaining transparency becomes vital. Engineers must always understand how and why an AI system has reached a conclusion. Responsible autonomy means embedding explainability, validation, and override mechanisms at every level of the control hierarchy.

LLMs and generative AI applications

Large language models (LLMs) and generative AI

are the newest entrants to the oil and gas technology stack. While early applications focused on administrative tasks, document summarisation, report generation, or technical writing, the latest wave of development is targeting more specialised industrial use cases.

Engineers can now query digital twins using natural language, asking complex questions such as “What is the estimated corrosion rate in the third separator under current conditions?” or “Show all compressors operating above 90 per cent capacity for more than 12 hours.” Generative models retrieve the relevant data, interpret it within context, and present clear visual answers. This humanAI interface dramatically lowers the barrier between data and decision.

Microsoft, NVIDIA, and OpenAI are collaborating with major energy firms to develop domain-specific language models trained on engineering data and safety documentation. BP has tested naturallanguage interfaces for asset management, while Shell has piloted generative AI to streamline engineering design reviews. These tools accelerate workflows, reduce cognitive load, and free engineers to focus on strategic analysis rather than routine information retrieval.

However, generative AI introduces new risks. LLMs are prone to hallucination, producing plausible but incorrect information when confronted with ambiguous queries or incomplete data. In an industrial context, such errors can have serious consequences. To mitigate this, companies are adopting retrievalaugmented generation (RAG) techniques that anchor model responses to verified data sources. Each answer is supported by traceable references, ensuring that generative tools remain grounded in fact rather than probability.

Security is another concern. Because LLMs require vast computational resources, many organisations rely on cloudbased inference services. Ensuring that

confidential data is not exposed during this process demands robust encryption and private model deployment. The use of air-gapped AI systems, models trained and run entirely within secure corporate infrastructure, is becoming increasingly common in critical operations.

Despite these challenges, generative AI is opening new possibilities for creativity and collaboration. Engineers can co-create process documentation, maintenance schedules, or even control logic with AI systems that understand the language of engineering. The key is to maintain a balance between automation and human oversight, using AI as an assistant rather than an authority.

The convergence of machine learning, AI-infused models, and human expertise is redefining the operational rhythm of oil and gas. Predictive maintenance, intelligent drilling, and generative design are not isolated innovations but interconnected capabilities that rely on trust, governance, and adaptability.

The future of AI in this sector will depend on how effectively companies align people with process. Technology can provide foresight, but it is human discipline that ensures that foresight translates into value. Legal frameworks, data privacy, and ethical standards will continue to shape what is possible, while workforce diversity and continuous learning will determine how well organisations adapt.

AI has already proved that it can predict failure and optimise production. The next test is whether it can help build organisations that learn as fast as their machines. In that sense, the most important transformation is not technological but cultural, a shift from managing assets to managing intelligence.

Wassim Ghadban, SVP Digital and AI at Kent, explores how artificial intelligence and digital twins are converging to transform the oil and gas industry. By combining human expertise with AI-driven intelligence, this new era of automation promises to accelerate decarbonisation, enhance safety, and create truly autonomous operations across the energy value chain.

Digital technology alone is no longer seen as disruptive unless it incorporates AI. Automation is evolving beyond mere efficiency to true autonomy. AI is emerging as the secret weapon to accelerate emission reduction and enhance safety in the oil and gas industry. From generating insightful analyses to autonomously operating plants and providing intelligent recommendations, AI is set to redefine the future of this sector.

In the energy sector, there is no point in nice-to-have technologies and applications; they need to build value. With a holistic approach to building the digital twin, companies can realize improvements in project execution, operational efficiency, sustainability, and revenue.

Generative AI sets itself apart from other forms of machine learning and artificial intelligence in its generative nature. Rather than merely analysing data, generative AI produces new data across all forms of media – text, code, images, audio, video, and more. This ability to generate new content opens up a world of possibilities in the oil and gas industry. One of the most remarkable capabilities of AI in oil and gas is its potential to autonomously generate comprehensive analyses and operational recommendations. Imagine AI systems creating detailed operational reports, predictive maintenance schedules, and even safety protocols, all based on realtime data and historical trends. Imagine a scenario where an AI system continuously monitors plant operations, identifies inefficiencies, and provides real-time optimisation suggestions. This level of intelligence not only boosts productivity but also ensures higher safety standards and significant reductions in downtime and emissions. The potential for innovation is immense.

The Covid-19 pandemic highlighted many gaps in project operations in the energy industry. We quickly saw the many challenges of remotely operating plants and being efficient from any location at any time. It became clear that we had to find

a way to unify the operations of assets, reduce repetitive tasks, and improve efficiencies. It became apparent that digital technology was key.

However, while technology is everywhere today, applying it in the right way isn’t nearly as common. We see frequent failures related to digital transformation and the implementation of new technologies because the people working on them don’t understand the challenges of the industry. Meanwhile, those in the industry who have the knowledge of the systems, processes, and challenges do not necessarily understand emerging technologies.

Ghadban goes on to discuss the changes in practices and ways of working required to embrace AI, and the importance of collaboration. “Let us embrace and thrive in this revolution, augmenting our capabilities with AI rather than replacing them. By maintaining our core values and leveraging AI, we can shape a sustainable and innovative future for the oil and gas industry, he concludes.

Having great technology alone is never enough if companies are unable or unwilling to apply them effectively. In the energy sector, the digital twin is known as the digital plant. It is the correlation of data coming from different systems and timelines to create a system of systems.

There are many definitions of “digital twin” related to different industries. The first concept was developed by Michael Grieves in 2002 and was aimed at using the digital environment to run simulations. The first application was by NASA in 2010, but since then, there have been many others: Google Maps, smart cities, autonomous vehicles, health-care simulations, construction planning, and more. Every sector has its own definition and use cases. Many

of these uses focus on processed data. In the energy sector, we have both static and dynamic data. With the operations phase accounting for about 80% of a project life cycle, real-time information is fundamental. The progressive handover of information during a project starts with the development of a class library—the classification of data and information for every piece of equipment, asset, and project.

Digital twin—the correlation of data from different systems to create one single source of truth; a system of systems where correlated data is represented in digital context, i.e., “the digital asset.” A digital twin is about how to display that information in context.

Since its inception, we have extended the concept of a digital twin to create maturity levels. Digital twin technology can be implemented at a very early stage of the project life cycle. While at first, it can be as simple as a point cloud, a 3D model can be enriched with data and improved by emerging technology to create increasing dimensions of information and complexity.

Once information is correlated and represented, you can do more with it: Analysis, intelligence, and applications can sit on top of this correlation of data. AI can be applied to create insights and embed additional functionalities. The brain of data, the digital twin, can be used for remote monitoring and autonomous operations—the goal of oil and gas operators. Once there is a digital twin to represent information in context, it can help inform the way designs are reviewed and create immersive tools to help visualize plants before they are even built.

The energy sector has invested in automation since the 1990s, but while it innovated in digital technologies, its processes changed more slowly. After all, technology is just 10% of innovation; the rest is about people. That said, there has been a great acceleration in technology in the energy sector recently, both in

conventional and renewable energy.

Recently, we have seen many applications of AI in IIoT data to create insights and improve the safety of workers. Digital twins are undoubtedly the key to reducing costs, downtime, and emissions and improving efficiency and safety, which are all vital to a sustainable future.

What we really need to achieve is an integrated life cycle in a digital environment where people, processes, assets, and systems are connected for efficient project execution and unified operations using AI and advanced technologies. In this way, the combination of AI and digital twin technology is set to power the future of the oil and gas industry.

Agentic AI and retrievalaugmented generation are beginning to change how oil and gas organisations think, plan, and act. Their integration with digital twins is creating intelligent systems that no longer just describe operations but interpret, reason, and decide across the entire value chain.

The oil and gas industry has always been defined by scale and complexity. From deep-water platforms to LNG liquefaction trains and downstream refining, each operation produces a torrent of data that has traditionally been monitored, analysed, and acted upon by humans. Today, that model is shifting. Artificial intelligence is moving beyond pattern recognition and automation into a new phase of reasoning and collaboration.

Agentic AI and retrieval-augmented generation (RAG) represent a fundamental step in that evolution. Rather than generating text or analysis in isolation, these systems actively retrieve relevant, verified information from trusted sources, integrate it with live operational data, and produce contextual recommendations that humans can test and implement. When connected to a digital twin, this intelligence becomes operational: a self-updating model that interprets conditions, proposes actions, and can even trigger automated responses under controlled parameters.

The combination of agentic AI and digital twins is redefining the structure of control in oil and gas operations. Where traditional automation relied on fixed logic and human intervention, an operational twin enhanced with agentic AI can evaluate multiple variables simultaneously and adapt in real time.

In distributed control systems, this means a shift from reactive control loops to proactive orchestration. An AI agent can continuously analyse plant data, recognise deviations, and recommend corrective actions before performance deteriorates. In advanced process control, it can test those recommendations against simulation data inside the twin, verifying the likely outcome before suggesting implementation in the live system. The result is an operating model that blends the rigour of engineering with the agility of AI reasoning.

Companies such as Honeywell, AspenTech, and Yokogawa are already embedding generative and agentic intelligence into their control and optimisation platforms. AspenTech’s industrial AI suite, for instance, uses hybrid models that combine first-principles engineering with data-driven inference, enabling predictive control that learns and refines itself over time. Honeywell’s Experion platform and Yokogawa’s OpreX suite are evolving towards architectures where AI agents support operators with real-time decision insights rather than static dashboards.

This transformation also extends to organisational workflows. Process engineers, control specialists, and data scientists are beginning to work together in cross-functional environments where the digital twin becomes a shared decision interface. The agentic AI acts as an intermediary, retrieving contextual information from design databases, maintenance histories, and live sensors to support each discipline’s reasoning. The model becomes not just a mirror of operations but a collaborative workspace for continuous optimisation.

The integration of agentic AI and RAG into digital twin ecosystems is influencing every segment of the oil and gas value chain. In upstream exploration and production, AI agents trained on geological and production data can assist reservoir engineers in evaluating well performance and predicting decline curves with greater precision. When connected to a live operational twin, these agents can simulate the impact of different drilling parameters or injection strategies, enabling faster and more confident decisionmaking.

In midstream operations, the technology enhances the optimisation of LNG plants and gas networks. AI models can balance throughput against energy consumption, analyse heat exchanger fouling patterns, or

predict compressor performance under variable ambient conditions. Digital twins feed this intelligence back into planning systems, reducing downtime and improving energy efficiency. For downstream refiners, agentic AI offers an even broader opportunity: to integrate market data, energy costs, and maintenance schedules into production planning, allowing refineries to adapt more dynamically to fluctuations in demand and feedstock quality.

The cumulative impact is a redefinition of the enterprise itself. Agentic AI transforms the flow of value across functions by turning information into an operational resource. Instead of separate silos for design, operations, and maintenance, the digital twin becomes a living value chain, one that learns continuously from every action, transaction, and condition.

BP, Shell, and ADNOC are among the operators experimenting with such frameworks. BP has explored AI-driven process optimisation within its global refining network, while ADNOC has piloted digital twin applications that integrate AI reasoning to optimise flare performance and energy intensity. These initiatives illustrate how AI-augmented twins can support both profitability and sustainability by aligning real-time data with strategic business objectives.

Limitations and controls of AI models

Despite the promise of agentic intelligence, the technology introduces new forms of risk. AI systems require access to large volumes of data to generate useful insights, but in an industry built on proprietary knowledge and intellectual property, data exposure can be as dangerous as data loss.

Retrieval-augmented generation offers partial mitigation by separating data storage from model logic. In a RAG framework, the AI model queries a secure knowledge base rather than retaining information internally. This prevents sensitive or proprietary data from being permanently encoded within the model’s parameters, reducing the risk of leakage through unintended outputs. Vendors such as Microsoft, IBM, and OpenAI are refining this approach for industrial use, introducing private retrieval layers that ensure all data remains within the operator’s environment.

However, technical safeguards are only part of the solution. Oil and gas companies must establish governance frameworks that define what data models can access, how results are validated, and who holds accountability for their recommendations. Model drift, the gradual divergence of an AI system’s behaviour from its intended design, remains a critical concern. Continuous monitoring, retraining, and validation are necessary to ensure that AI systems remain aligned with operational goals and regulatory requirements.

There are also limits to what AI can interpret. Complex physical systems still require human intuition, particularly when cause-and-effect relationships are poorly defined. An AI may predict a pressure anomaly, but understanding whether that anomaly stems from instrumentation error, corrosion, or a process upset often requires engineering judgement. Recognising these boundaries is essential to maintaining safety and reliability in hybrid human-machine environments.

Practising secure and responsible AI

Responsible AI in oil and gas extends beyond compliance; it is a matter of operational safety and social licence. As AI agents increasingly influence decision-making in safety-critical contexts, from pressure management to emergency response, operators must ensure that these systems behave predictably and transparently.

Explainability is therefore becoming a design requirement. AI models must provide not just answers but

rationales that can be scrutinised by engineers and regulators alike. This is especially important in environments governed by strict process safety standards such as IEC 61511 or API 554. Agentic AI can support compliance by documenting the reasoning behind each recommendation, linking every output to the data sources and assumptions that informed it.

Cybersecurity must evolve in parallel. Each AI component, from inference engines to retrieval databases, expands the attack surface of the digital twin ecosystem. Techniques such as differential privacy, homomorphic encryption, and secure multiparty computation are being explored to protect data while still allowing AI to learn from it. Meanwhile, operators are developing AI assurance programmes, systematic assessments that evaluate models for bias, robustness, and resilience under adversarial conditions.

The cultural dimension of responsibility is equally important. Engineers and managers need to understand not only how AI works but how it fails. Training programmes are emerging that combine process safety with AI literacy, helping personnel interpret probabilistic outputs and respond appropriately. In this respect, AI becomes not a black box but a partner in decision-making, one whose authority is tempered by transparency and human oversight.

End-to-end digital twin verification and validation

One of the most promising applications of agentic AI is the automation of verification and validation within digital twin environments. Complex twins require continuous calibration to remain accurate, yet manual validation is labour-intensive and prone to error. AI can streamline this process by comparing predicted and observed data across thousands of parameters, identifying discrepancies, and recommending recalibration where necessary.

Uncertainty quantification, understanding the confidence bounds of predictions, is an area where AI provides particular value. Machine learning algorithms can analyse historical deviations to estimate error margins and assign probability

The world’s first visual operations platform Work visually. From anywhere.

scores to model outputs. When combined with RAG-enabled retrieval of design and maintenance data, this allows engineers to understand not only what the model predicts but how reliable that prediction is.

NVIDIA, working with partners such as Schlumberger and Rockwell Automation, is advancing real-time simulation frameworks that integrate AI validation loops directly into industrial twins. These systems use GPU-accelerated computation to test multiple operating scenarios simultaneously, comparing outcomes to live sensor data to maintain alignment between the virtual and physical asset. The result is a self-correcting model that can evolve as the plant ages or as new data sources become available.

Verification extends beyond the twin itself. AI can evaluate the entire digital supply chain, from sensor calibration and network latency to data pipeline consistency, ensuring that every layer of the system contributes reliably to the final insight. By embedding these verification routines, the digital twin becomes a continuously audited environment where trust is measurable rather than assumed.

The intelligence continuum

The convergence of agentic AI, RAG, and digital twins marks a shift from automation to cognition. Oil and gas companies are no longer building systems that merely execute tasks; they are creating environments capable of reasoning about those tasks, drawing from a constant flow of structured and unstructured data.

The implications reach across the organisation. For engineers, this means faster problem-solving and greater confidence in decision-making. For executives, it offers a transparent link between operational performance and strategic outcomes. And for the industry as

a whole, it opens the door to new forms of collaboration, where companies, suppliers, and regulators can interact through shared data models without sacrificing confidentiality or control.

Challenges remain. The integration of AI with legacy infrastructure requires patience and precision, and regulatory frameworks are still catching up with the pace of innovation. Yet the direction is unmistakable. As agentic AI becomes embedded within the digital backbone of oil and gas enterprises, it will redefine how intelligence is organised, distributed, and applied.

The next generation of energy companies will operate less like hierarchies of departments and more like networks of reasoning systems, human and digital, cooperating to anticipate, adapt, and act. Digital twins will no longer be passive mirrors of reality but active participants in shaping it. In that future, the measure of success will not be how much data a company possesses, but how effectively it turns that data into thought.

At Worley Consulting, we help operators connect data across their business to create a single, trusted view of every asset. That clarity drives better decisions, faster collaboration, and stronger performance across the lifecycle.

• Digital twins

• AI/ML

• Robotics

• Remote operations

Together, they automate, simulate and scale performance. Making your assets smarter, safer and more sustainable.

• Higher margins

• Lower costs

• Improved efficiency

• Greater control

Partner with Worley to build your asset of the future. Scan to explore how digitalization can transform performance.

Use-case driven design

We begin with workshops to frame your challenge, define outcomes, and map a clear path to value.

End-to-end data integration

We unify process, IoT devices, asset management, ERP systems, and engineering data across silos into one coherent accessible platform.

Advanced analytics

Using AI/ML and predictive modelling, we simulate scenarios and drive better, faster decision-making.

Persona-specific experiences

Operators, maintainers, and executives: each role can access what information they need, when they need it delivered in the right format.

Cybersecure architecture

Every digital asset is built on secure, resilient infrastructure aligned with your security requirements and next generation architecture standards.

Artificial intelligence is transforming the digital twin from a passive representation into an operational command layer. By merging physics, data, and human expertise, this new generation of twins is becoming the decision surface for the modern energy enterprise.

Digital transformation in oil and gas has entered its most consequential phase. The focus has shifted from connecting systems to connecting decisions, from visualising data to acting upon it. For Øystein Hole, Chief Product Officer at Kongsberg Digital, this change marks the beginning of what he calls the “industrial work surface”, where engineers, AI agents, and live operational data coexist in a unified environment to drive better decisions.

The role of the digital twin has evolved. It is no longer a mirror of the plant but an active participant in daily operations, combining operational technology (OT) and information technology (IT) to support prediction, simulation, and execution. In Hole’s view, the introduction of AI has elevated the twin from a static model into an intelligent decision-support system that transforms how people interact with data.

“The twin has become an operational decision layer,” he explains. “It unifies data, orchestrates workflows, and provides agentic copilots for industrial intelligence that keeps humans in the loop.”

Thousands of users across the globe now collaborate on shared twin environments, accessing real-time insights from remote assets and interacting with systems through natural-language interfaces. Engineers can explore the digital plant as though walking through it, observing performance, diagnosing issues, and simulating outcomes without leaving the control room. This, Hole argues, is the future of work.

Digitalisation in oil and gas has often stumbled at the point of adoption. The technology is powerful, but the tools are frequently designed for experts rather than the people who use them every day. Hole believes that true transformation begins when frontline workers find technology intuitive, accessible, and immediately useful.

“The goal is to transform how people execute their daily tasks by empowering them to make better decisions,” he says. User experience, in this context, is not a matter of aesthetics but of operational effectiveness. At sites from Equinor to Shell and LNG Canada, the company’s digital twin deployments have been measured by one test: whether they make a technician’s or production coordinator’s day easier.

Feedback from the field suggests they do. One coordinator described having “information at my fingertips” that previously required multiple systems and manual effort. That accessibility has become a decisive factor in scaling adoption.

The twin, Hole notes, connects people and workflows across locations, giving everyone a common understanding of operational reality. A production planner in one region and a maintenance supervisor in another can collaborate through the same digital interface, viewing the same data in context. It removes ambiguity, accelerates response, and encourages consistency across the organisation.

Scaling intelligence across assets

One of the challenges facing digital twin adoption has been scale. Many organisations succeed with

a single-site deployment but struggle to expand. The problem is rarely technical; it is architectural. To scale effectively, the twin must be designed for repeatability while preserving the individuality of each asset.

Hole describes this as a “portfolio approach”. The core data model and services are engineered to be transferable, allowing customers to onboard asset after asset without reinventing the foundation. Once patterns of operation are established, they can be replicated across regions and facilities, accelerating transformation across the enterprise.

This approach is paying dividends. Kongsberg Digital now delivers digital twin deployments to major operators globally, including multi-asset programmes in the Middle East. By establishing consistent data standards and collaborative

workflows, the twin becomes a bridge between geographies, a single digital language for industrial performance.

Scaling, however, is not simply a question of duplication. Each deployment enriches the collective intelligence of the system. The more assets connected, the more patterns can be recognised, and the faster AI models can learn from the variability inherent in real-world operations. Over time, this creates an organisational feedback loop, a continuously improving body of operational knowledge shared across sites.

The question often raised by engineers is how AI can be trusted to make recommendations in environments governed by physical law. Hole’s answer lies in hybrid modelling, where machine

learning is constrained by physics.

He quotes NVIDIA’s Jensen Huang: “For AI to go beyond the screen and function in the physical world, we need to understand real-world forces better.” Hole agrees, adding that the real world obeys physics, not statistics.

Hybrid machine learning, combining data-driven models with physics-based simulations, anchors AI in reality. In this framework, data-driven models are trained using synthetic data from simulators that capture the physics of heat transfer, friction, and flow. This ensures that the algorithms learn from valid physical scenarios rather than abstract patterns. “The next wave of intelligence comes from combining the physical world, simulation, AI, and human expertise,” Hole says.

This approach eliminates one of AI’s greatest risks: the convincing but wrong answer. It also generates high volumes of accurate training data without resorting to potentially flawed historical sets. By grounding predictions in physics, digital twins become capable of anticipating behaviour under unseen conditions, a capability that rules-based systems could never achieve.

Trust remains the ultimate barrier to AI adoption in operations. Oil and gas is an industry where every decision carries weight, and recommendations that cannot be explained are quickly dismissed. Hole is clear that validation, governance, and transparency are the bedrock of industrial intelligence.

To ensure accuracy, every digital twin output undergoes twin–plant parity checks: comparing simulator results with live plant data and flagging discrepancies through outlier detection. Human oversight remains integral, supported by role-based access controls and bounded autonomy that defines which decisions the AI can make independently and which require human confirmation.

Security is equally stringent. AI-enhanced twins are developed within hardened software lifecycles that include threat modelling, static code analysis, and 24hour monitoring by security operations centres. Compliance frameworks span ISO standards for quality, environment, and information security, alongside GDPR adherence and independent audits.

Hole summarises the philosophy simply: “Building trust requires lineage and traceability. Every recommendation must be explainable.”

Trust also extends to quantifiable results. Operators using AI-augmented twins have reported measurable improvements within months of deployment. Shell Norway, for instance, achieved a three-million-dollar operating-cost reduction in its first year, with greater uptime and more accurate energy forecasting. Eighty-five per cent of users reported higher efficiency within three months of onboarding.

Regional momentum and the human factor

The Middle East has become a proving ground for large-scale digital transformation. Hole sees the region moving from pilot projects to enterprisewide deployments across asset operations, drilling, and LNG. A proof-of-concept with Aramco for drilling and well operations demonstrates how cloud-based platforms like SiteCom can unify data and empower frontline teams.

Oman’s Petroleum Development Oman (PDO) is taking a similar path, deploying six enterprise twins within a single year to unify operations and maintenance. Their goals, safer operations, higher production, and a competitive cost base, reflect a regional focus on operational integrity and performance optimisation.

Success, however, still depends on people. “It is essential to keep humans at the centre,” Hole stresses. Building data literacy, providing copilot support, and developing intuitive interfaces help users move from reactive to predictive ways of working. Change management, in his view, is as much about empathy as it is about training. The technology will only succeed if it amplifies, rather than alienates, the human workforce.

A new era of collaboration

The next phase of AI in oil and gas will be defined by coordination — not of isolated systems but of entire value chains. Hole foresees a shift towards closed-loop optimisation, multi-asset orchestration, and agentic maintenance planning, where

autonomous agents collaborate to manage complex operations with human oversight.

“Any system with sufficient complexity will be augmented or replaced by agentic systems,” he says. “Coupled with a digital twin, this allows people to bypass complexity and express their intentions directly — how they want to work and what they want to achieve.”

This concept forms the basis of Kongsberg Digital’s long-term development: an Industrial Work Surface where live data, engineering knowledge, and AI converge. It represents a new interface for human–machine collaboration, one where intention replaces command and knowledge becomes collective.

Hole sees the destination clearly: real-time, AI-supported decisions embedded in end-to-end workflows that directly impact business objectives. The convergence of physics-informed AI, transparent governance, and human creativity marks not just a technological shift but a cultural one.

In this vision of the future, intelligence is not confined to algorithms or individuals but distributed across systems, teams, and disciplines. The twin is no longer a digital model, it is the medium through which the industry thinks, acts, and learns together.

The oil and gas industry is entering a new era where artificial intelligence and digital twins are reshaping how facilities are conceived, built, and run. This convergence promises greater efficiency and resilience, but it also demands new thinking about cybersecurity, governance, and the responsible use of machine intelligence.

Oil and gas facilities have always represented the pinnacle of engineering complexity. Every refinery, LNG plant, and offshore platform is an intricate ecosystem of processes designed to balance safety, performance, and reliability. What is changing today is not the ambition, but the means. Facility planning has evolved from a static exercise in engineering to a dynamic, continuously adaptive process fuelled by simulation, analytics, and machine learning. Artificial intelligence and digital twins are central to this transformation, blending physical modelling with computational reasoning to design assets that learn and improve over their lifetime.

The shift is profound. Planning no longer ends once construction begins. With digital twins acting as living models, every design choice and operational adjustment feeds back into the loop. The facility becomes a self-referencing organism, one that can analyse its own efficiency, anticipate maintenance needs, and test new strategies in a safe virtual environment. Yet the path towards this level of intelligence is not without barriers. Scaling AI across such complex, safety-critical systems raises serious questions about cybersecurity, data integrity, and human oversight.

Artificial intelligence has already changed the rules of facility design. AI-driven optimisation tools can evaluate thousands of layout configurations for a refinery or gas plant in hours, weighing variables such as cost, safety margins, and energy efficiency. Digital twins provide the canvas for these simulations, allowing engineers

to validate choices before construction begins and to understand how future operational changes will ripple through the system.

Companies including Bentley Systems, AVEVA, and Hexagon are integrating AI directly into their design platforms. Their software can detect conflicts across disciplines, generate design options automatically, and predict the implications of specific materials or configurations under fluctuating conditions. The result is faster project delivery and fewer surprises downstream. But the deeper value lies in knowledge retention. Once the model learns from each iteration, the intelligence embedded in one project becomes the foundation for the next.

AI is also changing attitudes to the asset life cycle. Rather than planning facilities around fixed production

assumptions, engineers are now designing for flexibility. Digital twins, constantly updated with live data, can forecast when process technologies will need upgrading or when changing feedstock composition might affect output. This visibility allows planners to schedule interventions well in advance, aligning maintenance with production goals and sustainability targets.

As the boundary between virtual and physical operations disappears, cybersecurity becomes mission critical. A modern refinery or offshore platform produces vast streams of real-time data, temperatures, pressures, flow rates, maintenance records, and thousands of control signals. When that data feeds into AI models, it becomes both a strategic asset and a potential vulnerability.

The danger is not theoretical. A corrupted or incomplete dataset can distort how an AI model learns, resulting in false insights or unsafe recommendations. Hallucinations, where AI generates seemingly valid but inaccurate information, present another challenge. In a safety-critical environment, even small deviations can have serious consequences.

To address these risks, companies are embedding cybersecurity controls deep within their twin architectures. Zero-trust network principles, end-to-end encryption, and continuous anomaly detection are now standard features of industrial AI ecosystems. Siemens’ Xcelerator and Rockwell Automation’s FactoryTalk Hub, for example, incorporate real-time integrity monitoring that validates the accuracy of every data exchange. Cloud providers such as Microsoft and AWS have expanded their industrial AI offerings to include

compliance layers that meet IEC 62443 and NIST 800-82 standards.

Data privacy is equally critical. AI models need large volumes of information, yet not all data can or should be shared across systems. Federated learning has emerged as an elegant solution. Instead of centralising sensitive data, AI models are trained locally on distributed nodes, and only the insights, not the raw data, are exchanged. This allows multiple operators to collaborate and improve their algorithms without exposing commercially or operationally sensitive information.

The human dimension remains the weakest link and the strongest defence. Engineers and analysts must understand how to interpret AI outputs and validate their reasoning. The industry is now building cross-functional teams that combine process knowledge, data science, and ethical governance, a skill set that will become as vital as traditional control engineering once was.

The next leap in facility intelligence lies in the creation of custom AI agents, specialised digital entities designed to carry out specific engineering or operational tasks within the twin environment. These agents act independently but coordinate with humans and other systems, making decisions within defined parameters.

Unlike general-purpose AI models, these agents are trained on domain-specific data: process diagrams, equipment specifications, and years of operational history. A planning agent might evaluate pipeline configurations to minimise energy losses, while an operational agent could analyse equipment behaviour to prevent pump cavitation. Over time, these agents build domain memory, a contextual awareness that allows them to recommend improvements before problems occur.

Vendors are already moving in this direction. Cognite is developing orchestration tools within its Data Fusion platform to manage multiple AI agents simultaneously. IBM’s watsonx Orchestrate and Microsoft’s Copilot Studio are evolving toward similar architectures, enabling coordination between predictive models, optimisation engines, and human operators. Within a digital twin, these agents form a cooperative ecosystem where humans define the strategy and AI manages the execution.

However, autonomy introduces accountability. The industry cannot rely on black-box algorithms where the decision logic is invisible. Explainability must be a design feature, not an afterthought. Standards bodies are now working on frameworks for responsible AI deployment in industrial environments, establishing principles for auditability, transparency, and ethical compliance. The goal is clear: AI must enhance, not replace, human responsibility.

The boundary between AI reasoning and robotic execution is narrowing. Robotic process automation (RPA), once confined to digital back-office tasks, is now moving into the

physical world of pumps, valves, and sensors. In the oil and gas sector, RPA combined with IoT and AI is giving rise to autonomous operational platforms that can perform inspections, data collection, and even corrective actions without direct supervision.

Digital twins provide the contextual intelligence these systems rely on. They act as both a testbed and a command hub, simulating actions before they are executed in the field. ABB, Emerson, and Honeywell are integrating robotic automation within their process control systems, enabling operators to model and validate entire maintenance routines virtually before implementation.

The impact on safety and cost is significant. Remote assets such as unmanned gas compression stations or subsea facilities can be managed with reduced on-site personnel and faster response times. RPA systems, guided by AI, can coordinate logistics across multiple sites, dispatching autonomous inspection drones or maintenance robots where needed. Each improvement reduces human exposure to risk while maintaining full operational visibility.

Nevertheless, autonomy requires discipline. Every automated sequence introduces dependencies between code, sensors, and machinery. Continuous monitoring and layered fail-safes are essential to prevent cascading faults. The industry’s long

experience with control automation provides a valuable foundation, but the addition of AI adds both power and complexity. True autonomy will only be achieved when these systems can learn responsibly within boundaries set by human oversight.

Open architectures for a connected future Interoperability has long been a stumbling block in oil and gas digitalisation. Proprietary data formats and incompatible systems hinder collaboration and slow innovation. Open architecture represents the next stage of maturity, an environment where data can move freely across applications and vendors without losing meaning or security.

The Digital Twin Consortium and the Open Group’s OSDU Forum are driving this vision. By defining common data models and standard APIs, they aim to make digital twins interoperable across the entire energy ecosystem. This means information generated in one platform , for instance, a 3D layout from AVEVA or a process optimisation from AspenTech, can flow seamlessly into another without costly integration work.

Open standards such as ISO 23247 for digital manufacturing and OPC UA companion specifications for energy form the technical backbone of this interoperability. When applied to oil and gas, they allow data from engineering, procurement, construction, and operations to be combined into a coherent digital narrative that spans the facility’s lifetime.

The benefits extend far beyond technical convenience. Open architectures reduce vendor lock-in, encourage competition, and lower barriers for smaller innovators to contribute specialised tools. They also support more sophisticated AI by providing larger, cleaner datasets. Over time, a globally interoperable network of twins could emerge, a living ecosystem of shared intelligence where operators benchmark performance, exchange insights, and accelerate progress across the sector.

Responsible AI and the human dimension Technology alone cannot guarantee progress. The integration of AI into critical infrastructure raises ethical and organisational questions that reach beyond the control room. Responsible AI is no longer a theoretical debate; it is an operational necessity.

Transparency is the foundation. Engineers and executives must understand how models reach conclusions, what data informs their outputs, and where uncertainties lie. Explainability becomes essential when AI

recommendations influence safety-critical decisions. Bias, too, must be addressed, ensuring that historical data does not reinforce outdated assumptions about efficiency, cost, or safety.

Governance mechanisms are emerging to manage this new responsibility. Several major operators are establishing AI assurance boards and ethics committees to review algorithms before deployment. Others are experimenting with AI passports, digital certificates that document how each model was trained, validated, and approved. These systems provide a verifiable chain of trust and align with global regulatory trends toward accountability and auditability in AI.

Human expertise remains the anchor. Engineers bring the intuition and contextual awareness that no algorithm can replicate. The most advanced digital ecosystems will therefore combine human judgement with AI insight in a balanced, symbiotic partnership. The twin may detect anomalies or inefficiencies, but it is human experience that determines how to act upon them.

The convergence of AI, digital twins, and robotics represents more than a technological milestone; it marks a philosophical shift in how oil and gas companies conceive intelligence itself. Facilities are no longer passive constructs but dynamic systems that think, adapt, and evolve. Planning becomes predictive, operations become collaborative, and improvement becomes continuous.

Yet the goal is not automation for its own sake. It is the creation of a sustainable, transparent, and resilient energy infrastructure, one where insight flows as freely as hydrocarbons once did. Achieving that vision will require discipline, open collaboration, and a culture that treats data not as an output but as the fabric of decision-making.

AI will not replace the engineer. It will extend their reach, accelerate their analysis, and amplify their creativity. When combined with robust digital twins, open architectures, and responsible governance, it has the potential to redefine how energy infrastructure is conceived and managed.

The connected oilfield of the future will not simply be more digital; it will be more intelligent, contextual, and humane. Intelligence by design will no longer describe technology, it will describe the industry itself.

The convergence of artificial intelligence, digital twins, and immersive technologies is redefining remote operations in oil and gas. What was once a matter of monitoring from afar is becoming a dynamic, data-rich environment where assets think, learn, and collaborate with people in real time.

Oil and gas has always been a distributed enterprise. Exploration happens in one region, production in another, refining in a third. For decades, distance was a logistical challenge, equipment, data, and expertise had to move constantly between field and headquarters. The advent of digital transformation has turned that challenge into an opportunity. Through advanced connectivity, AI, and digital twins, the industry is beginning to operate without boundaries, where physical assets are mirrored by living digital systems that enable continuous optimisation, even from thousands of kilometres away.

Remote operations are not new, but their meaning is changing. The next generation of systems does not simply transmit information to a control room. It interprets, predicts, and acts. The digital twin is no longer a passive replica but the command centre of an intelligent network — the central nervous system of the industrial metaverse.

The foundation of remote operations lies in visibility. Digital twins provide that visibility not only through data integration but through context. They combine design blueprints, sensor readings, and operational data to create a single, coherent

representation of the asset. With AI embedded at their core, these twins can analyse performance trends, detect anomalies, and recommend corrective actions long before issues reach the threshold of concern.

Operators such as Equinor, ExxonMobil, and BP are already leveraging AI-driven twins to monitor offshore platforms and subsea installations in real time. Equinor’s operations centre in Bergen, for example, manages production assets across the North Sea with minimal on-site personnel. Engineers can view a virtual replica of the installation, drill into component data, and adjust control parameters directly through the twin interface. The impact is significant: fewer helicopter trips, lower safety risk, and more efficient allocation of expertise.

AI adds a predictive layer to this visibility. By training on historical and live data, algorithms can forecast equipment wear, predict energy usage, or simulate the outcome of specific process adjustments. When combined with distributed control systems (DCS) and advanced process control (APC), these insights can be used to optimise performance autonomously. The system learns continuously, refining its models as conditions evolve.

This evolution from monitoring to mastery marks a fundamental shift in how remote operations are conceived. The twin is not a dashboard; it is a living ecosystem. Engineers interact with it as they would with a colleague, querying performance metrics or scenario outcomes in natural language. Over time, this human–machine collaboration is transforming operations from a centralised command structure to a distributed intelligence network, where expertise and analytics flow seamlessly across geographies and disciplines.

Leveraging new technologies for AI maintenance Maintenance has always been one of the costliest and riskiest aspects of oil and gas operations, particularly in remote environments. AI is now addressing that burden through predictive, prescriptive, and even autonomous maintenance models that extend equipment life while reducing human exposure.